Implementing training logic

Scalable AI Models with PyTorch Lightning

Sergiy Tkachuk

Director, GenAI Productivity

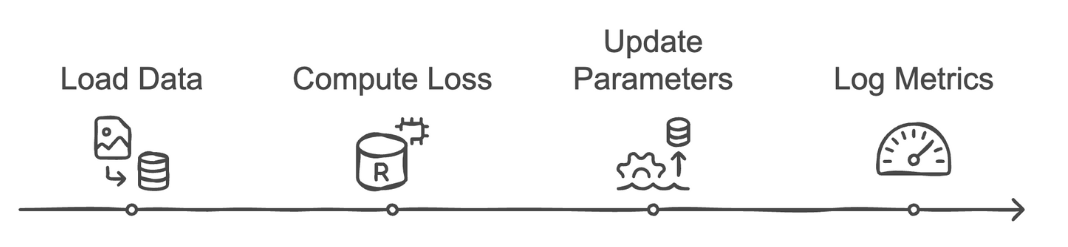

Defining the training step

- Process input and label batch

- Compute predictions with forward pass

- Calculate cross entropy loss for classification

- Log training loss for monitoring

def training_step(self, batch, batch_idx): x, y = batchy_hat = self(x)loss = cross_entropy(y_hat, y)self.log("train_loss", loss) return loss

Configuring optimizers

- Select an appropriate optimizer for updates

- Link model parameters for gradient computation

- Set a suitable learning rate for convergence

- Return the optimizer instance for Lightning integration

def configure_optimizers(self):

optimizer = torch.optim.Adam(self.parameters(), lr=1e-3)

return optimizer

Training with Lightning Trainer

- Integrate training logic with Lightning Trainer

- Manage training loops and epochs automatically

- Monitor performance metrics in real time

Using trainer.fit and trainer.validate

$$

- Start training with trainer.fit method

- Validate model with trainer.validate method

$$

trainer.fit(model, train_dataloader)trainer.validate(model, val_dataloader)

- Automate training and validation cycles

- Monitor metrics during both phases

Complete training logic example

$$

- Define a custom LightningModule with a classifier

- Implement training_step to compute and log loss

- Configure optimizers to update model parameters

- Train and validate the model

class LightClassifier(pl.LightningModule): def __init__(self): super().__init__() self.layer=torch.nn.Linear(28 * 28, 10) def forward(self, x): return self.layer(x.view(x.size(0), -1))def training_step(self, batch, batch_idx): ...def configure_optimizers(self): params=self.parameters() optimizer=torch.optim.Adam(params,lr=1e-3) return optimizermodel = LightClassifier() # Define classifier model trainer = Trainer(max_epochs=5) # Define trainer trainer.fit(model, train_dataloader) trainer.validate(model, val_dataloader)

Industry applications

Why training logic matters?

- Ensure precise loss tracking for quality control

- Optimize training pipelines for scalable deployment

Real-world examples:

- Enhance image analysis in healthcare diagnostics

- Support fraud detection in financial services

Industry applications

Why training logic matters?

- Ensure precise loss tracking for quality control

- Optimize training pipelines for scalable deployment

Real-world examples:

- Enhance image analysis in healthcare diagnostics

- Support fraud detection in financial services

Let's practice!

Scalable AI Models with PyTorch Lightning