Evaluating Graph RAG with RAGAS

Graph RAG with LangChain and Neo4j

Adam Cowley

Manager, Developer Education at Neo4j

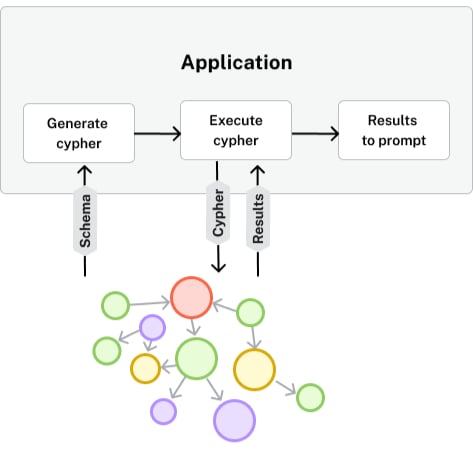

Text-to-Cypher

Vector search

Evaluating Graph RAG

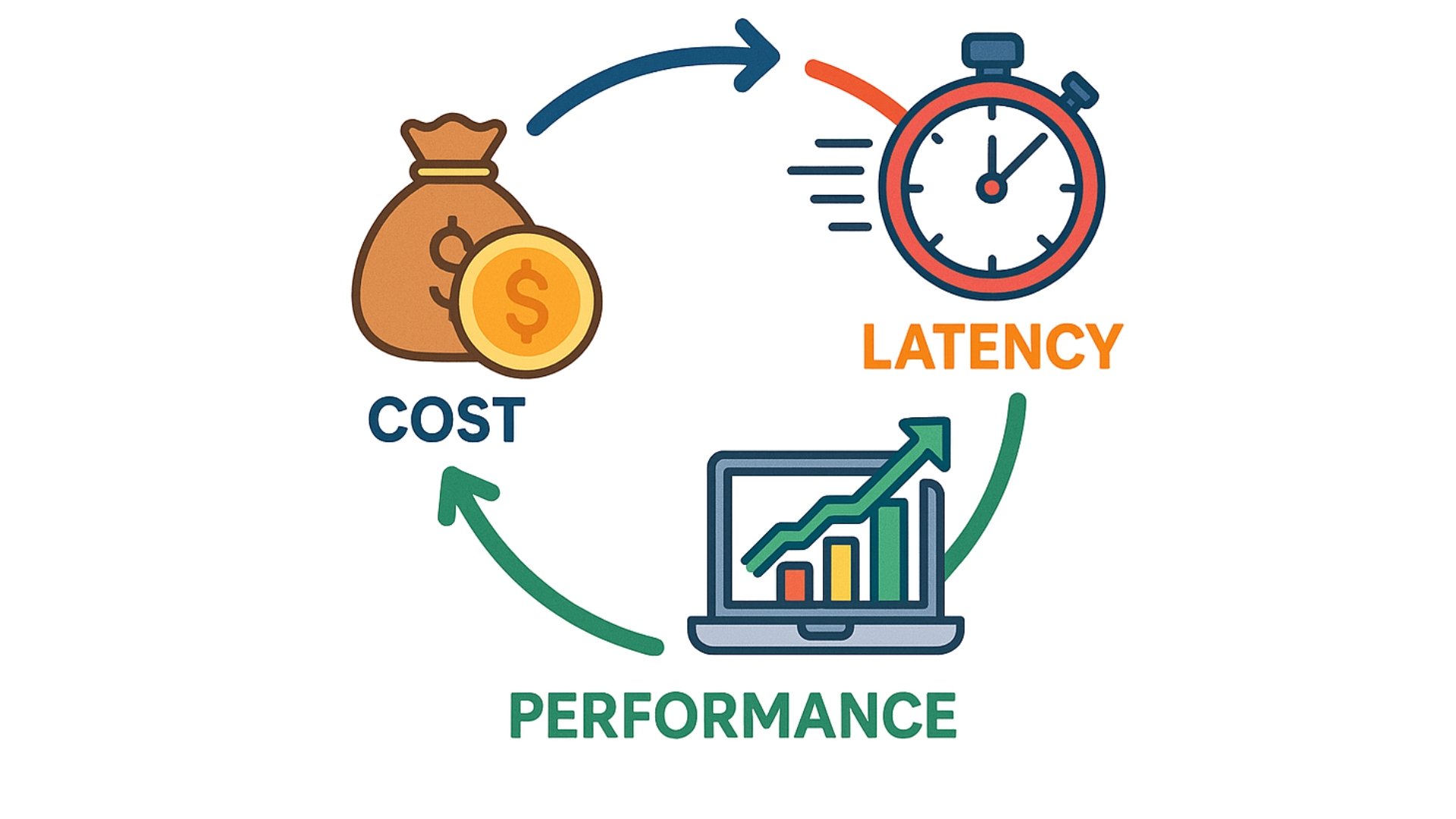

- Evaluating end-to-end generating using

time - Evaluating token usage and costs with

tiktoken - Evaluating output quality with

ragas- Context precision

- Noise sensitivity

1 Image generated with GPT-4o

Noise Sensitivity

- Measures amount of irrelevant information in retrieved documents

- Higher in vector search alone

- Returns a score based on relevant information

from ragas.metrics import NoiseSensitivitymetric = NoiseSensitivity( llm=evaluator_llm, mode="irrelevant" )

1 https://docs.ragas.io/en/stable/concepts/metrics/available_metrics/noise_sensitivity/

Context Precision

- Measures the proportion of relevant chunks in retrieved documents

- Higher score means retrieved information is more relevant

from ragas.metrics import LLMContextPrecisionWithReferencemetric = LLMContextPrecisionWithReference( llm=evaluator_llm, )

1 https://docs.ragas.io/en/stable/concepts/metrics/available_metrics/context_precision/#llm-based-context-precision

Context Precision

- Measures the proportion of relevant chunks in retrieved documents

- Higher score means retrieved information is more relevant

from ragas.metrics import LLMContextPrecisionWithoutReferencemetric = LLMContextPrecisionWithoutReference( llm=evaluator_llm, )

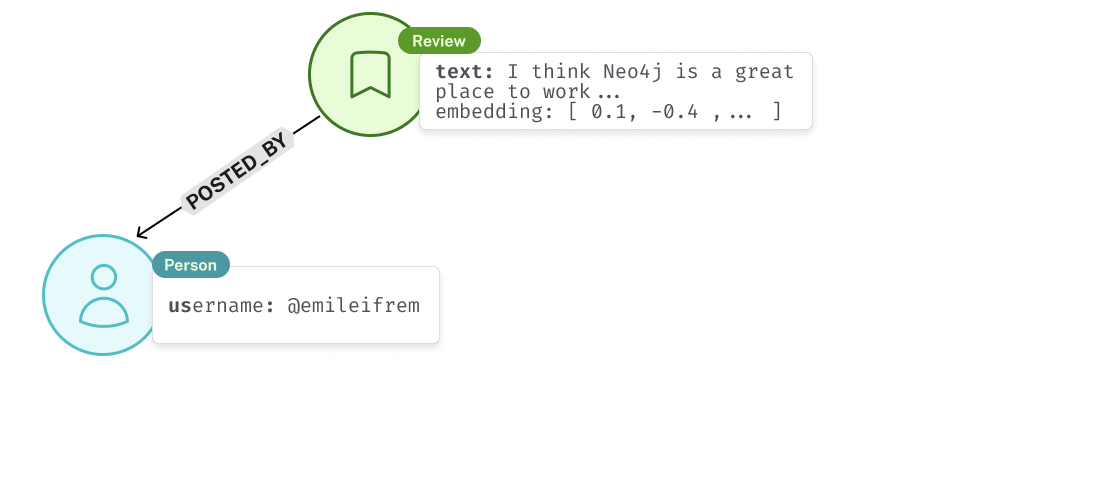

Text-to-Cypher result structure

cypher_result = {"user_input": "Who is Romeo's love?","response": "Romeo loves Juliet","retrieved_contexts": [ { "source": "Romeo", "target": "Juliet", "relationship": "LOVES", "sentiment": 0.9837 }, ]}

Text-to-Cypher result structure

cypher_result = {

"user_input": "Who is Romeo's love?",

"response": "Romeo loves Juliet",

"retrieved_contexts": [

json.dumps({

"source": "Romeo",

"target": "Juliet",

"relationship": "LOVES",

"sentiment": 0.9837

}),

]

}

Vector-only result structure

vector_result = { "user_input": "Who is Romeo's love?", "response": "Romeo loves Juliet", "retrieved_contexts": ["But, soft! what light through yonder window breaks?..." "O, she doth teach the forches to burn bright!"] }

Hybrid result structure

hybrid_result = { "user_input": "Who is Romeo's love?", "response": "Romeo loves Juliet","retrieved_contexts": [json.dumps({ "page_content": "But, soft! what light through yonder window breaks? ...","metadata": {"act": 2, "scene": 2, "spoken_to": "Juliet"}}),# ...] }

Creating an evaluation dataset

cypher_result = { "user_input": "Who is Romeo's love?", "retrieved_contexts": [ json.dumps({ "source": "Romeo", "target": "Juliet", "relationship": "LOVES", "sentiment": 0.9837 }), ] }cypher_dataset = EvaluationDataset.from_list([cypher_result])

Choosing an LLM for evaluation

from langchain_openai import ChatOpenAI # Choose an LLM to perform the evaluation llm = ChatOpenAI( model="gpt-4o-mini", temperature=0 )# Wrap in LangchainLLMWrapper from ragas.llms import LangchainLLMWrapper evaluator_llm = LangchainLLMWrapper(llm)

Evaluating responses

from ragas import evaluate, EvaluationDataset from ragas.metrics import LLMContextPrecisionWithoutReference, NoiseSensitivity cypher_scores = evaluate(dataset=cypher_dataset,metrics=[LLMContextPrecisionWithoutReference(llm=evaluator_llm),NoiseSensitivity(llm=evaluator_llm, mode="irrelevant")])

{'llm_context_precision_without_reference': 1.0000,

'noise_sensitivity(mode=irrelevant)': 0.0000}

Let's practice!

Graph RAG with LangChain and Neo4j