Memory graphs

Graph RAG with LangChain and Neo4j

Adam Cowley

Manager, Developer Education at Neo4j

Memory in RAG applications

Short-term memory

- Short-lived, scoped lifespan

- Conversation history

Long-term memory

- Semantic memory: facts about the world

- Useful for hyper-personalization

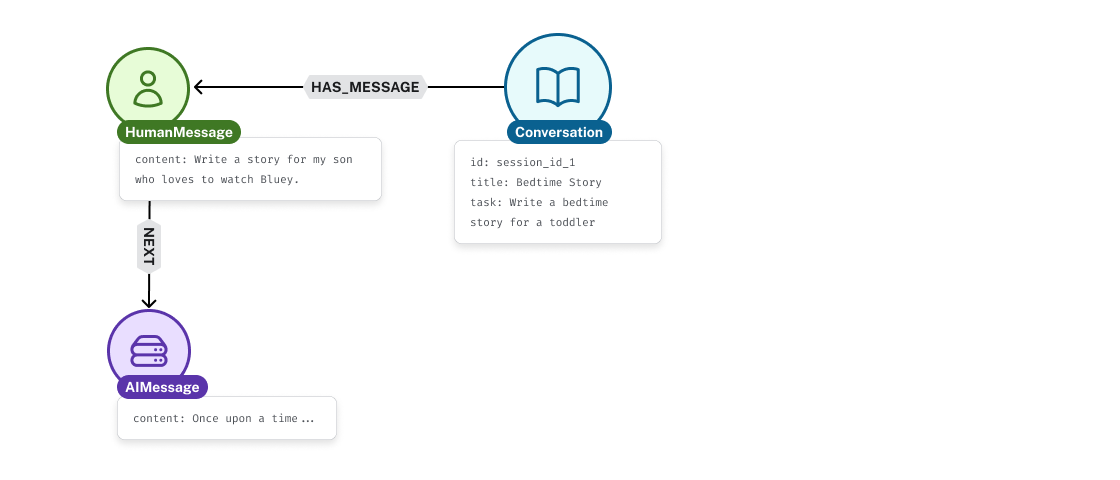

Short-term memory

Short-term memory

Short-term memory

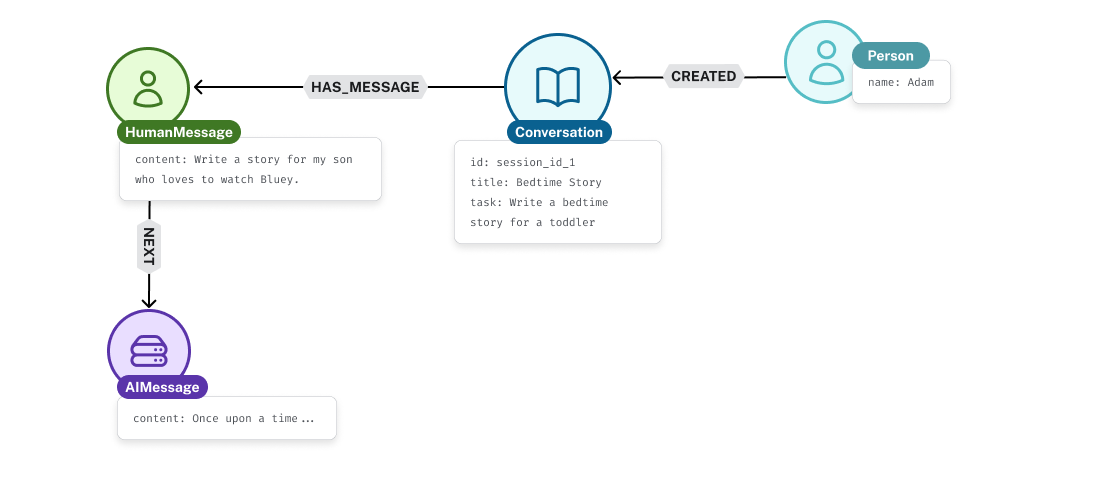

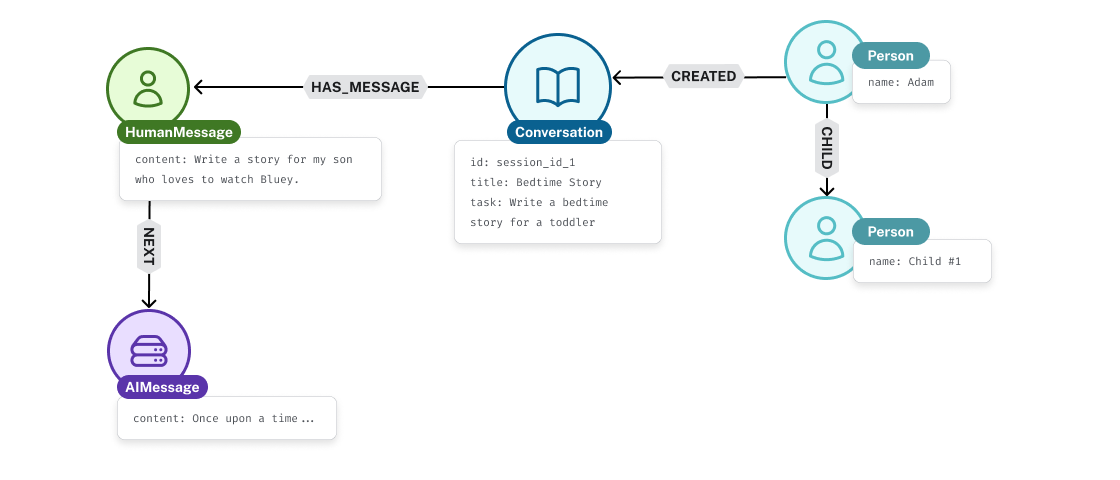

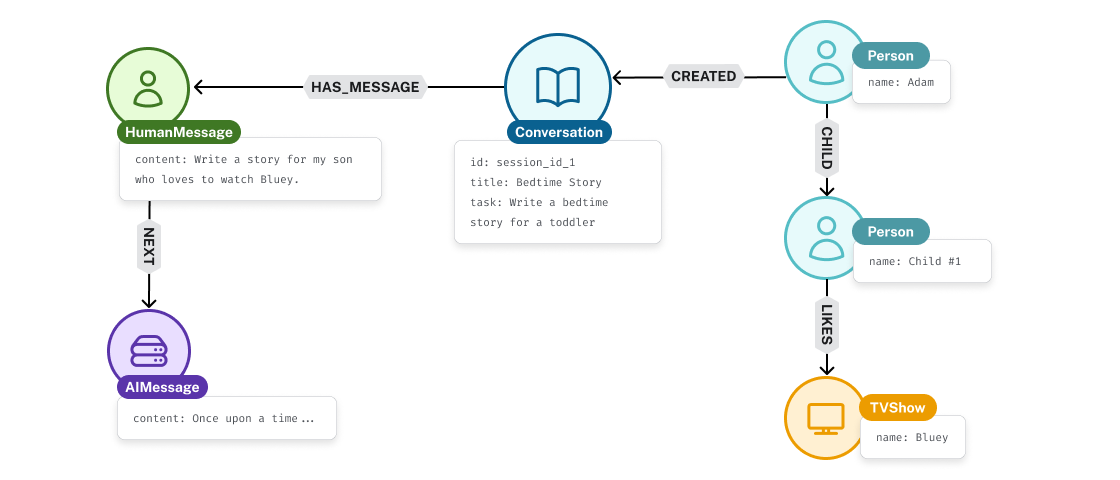

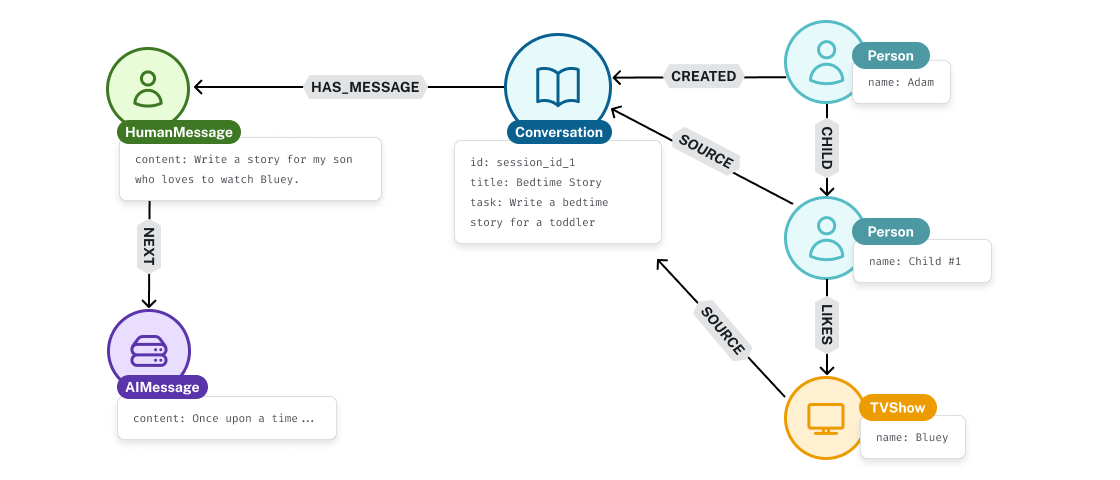

Short-term to long-term memory

Short-term to long-term memory

Short-term to long-term memory

Short-term to long-term memory

Short-term to long-term memory

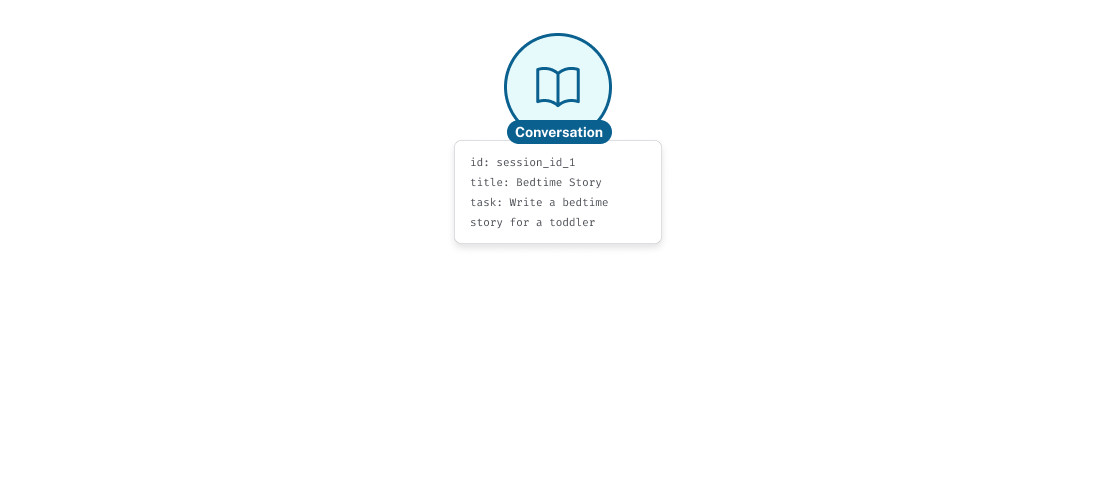

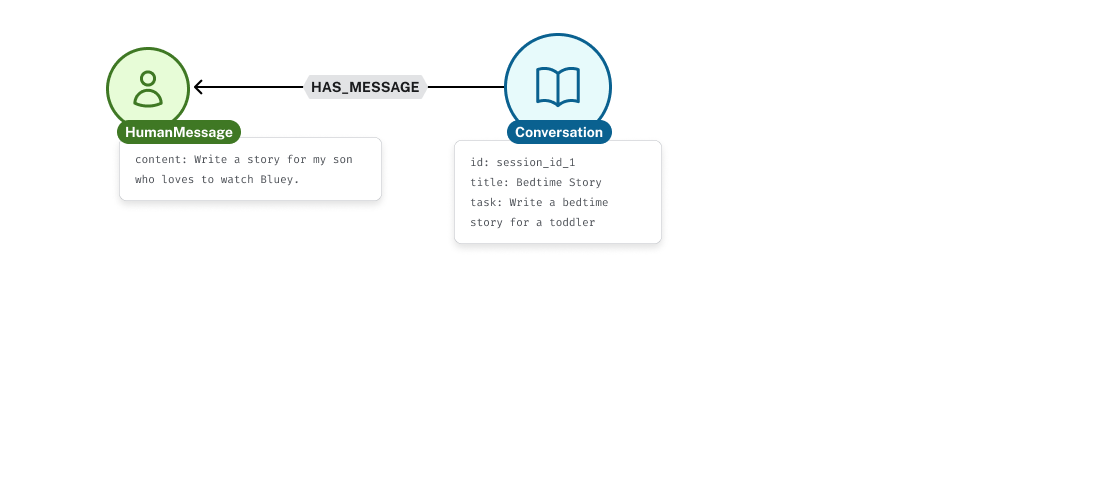

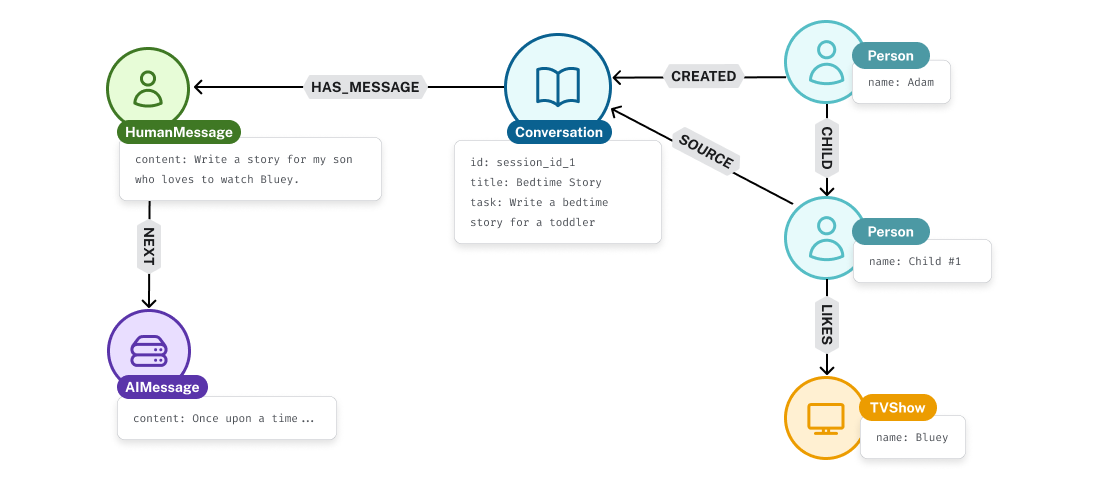

Neo4j chat message history

from langchain_neo4j import Neo4jChatMessageHistoryhistory = Neo4jChatMessageHistory(url=NEO4J_URI,username=NEO4J_USERNAME,password=NEO4J_PASSWORD,session_id="session_id_1",)# Add a human message history.add_user_message("hi!")# Add an AI message history.add_ai_message("what's up?")

Problems with storing everything

Neo4j chat message history

from langchain_neo4j import Neo4jChatMessageHistory history = Neo4jChatMessageHistory( url=NEO4J_URI, username=NEO4J_USERNAME, password=NEO4J_PASSWORD, session_id="session_id_1",window=10 # defaults to 3 messages)# Get history print(history.messages)

[HumanMessage(content='hi!', ...), AIMessage(content='whats up?', ...)]

Summarizing conversations

from pydantic import BaseModel, Field class ConversationFact(BaseModel): """ A class that holds the facts from a conversation in a format of object, subject, predicate. """object: str = Field(description="The object of the fact. For example, 'Adam' ")subject: str = Field(description="The subject of the fact. For example, 'Ice cream'")relationship: str = Field(description="The relationship between object and subject. Eg: 'LOVES'")class ConversationFacts(BaseModel): """ A class that holds a list of ConversationFact objects. """ facts: list[ConversationFact] = Field(description="A list of ConversationFact objects.")

Summarizing conversations

llm_with_output = (

ChatOpenAI(model="gpt-4o-mini", openai_api_key=OPENAI_API_KEY)

.with_structured_output(ConversationFacts)

)

Summarizing conversations

prompt = ChatPromptTemplate.from_messages(SystemMessagePromptTemplate.from_template("Extract the facts from the conversation."),MessagesPlaceholder(variable_name="history"),)chain = prompt | llm_with_outputchain.invoke({"history": history.messages,})

ConversationFacts(facts=[

ConversationFact(object='child', subject='Bluey', relationship='LOVES')

])

Let's practice!

Graph RAG with LangChain and Neo4j