Zero-shot video classification

Multi-Modal Models with Hugging Face

James Chapman

Curriculum Manager, DataCamp

Zero-shot video classification

Business case:

- A competitor's ads are leading to more sales

- Is our competitor more positive in ads?

1 https://repository.duke.edu/dc/adviews/dmbb37204

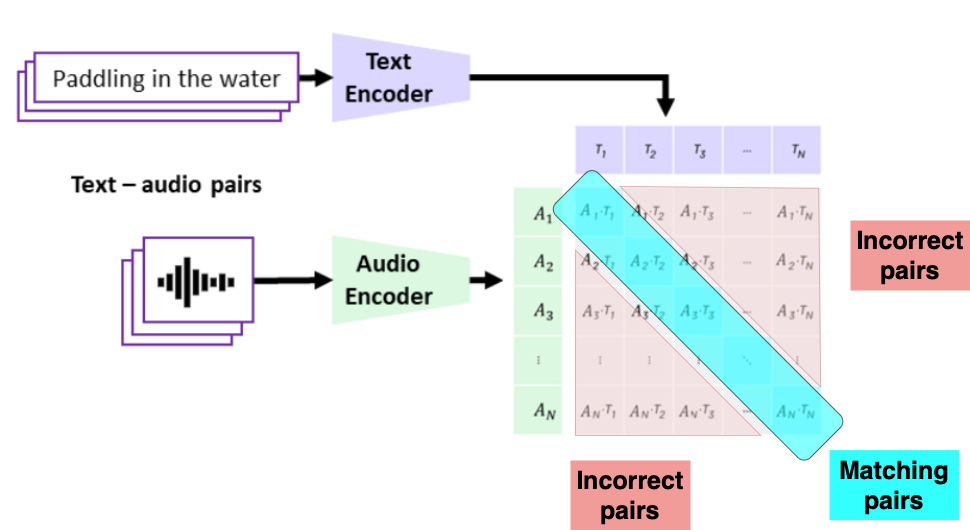

CLAP

- Contrastive Language Audio Pretraining

- Analogous to method used to train CLIP

- Separate text encoder and audio encoder

- 633k matched pairs of audio + descriptions

- Contrastive pretraining aligns audio + text embeddings

1 https://arxiv.org/html/2211.06687v4

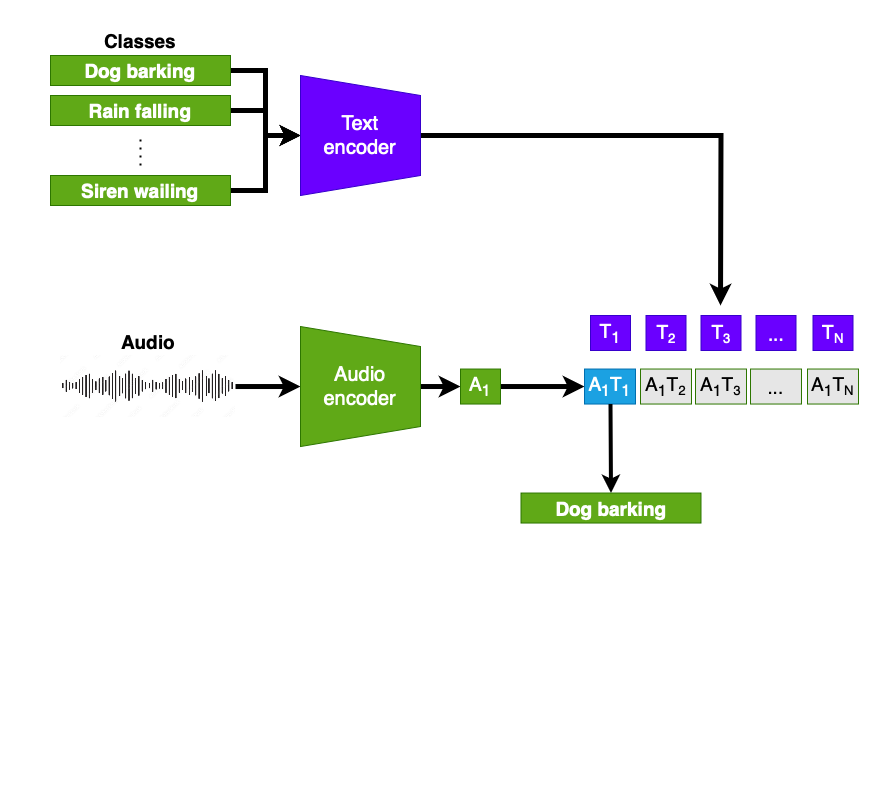

Approach: multi-modal ZSL

Zero-shot learning with CLAP

1 https://arxiv.org/html/2310.02298v3

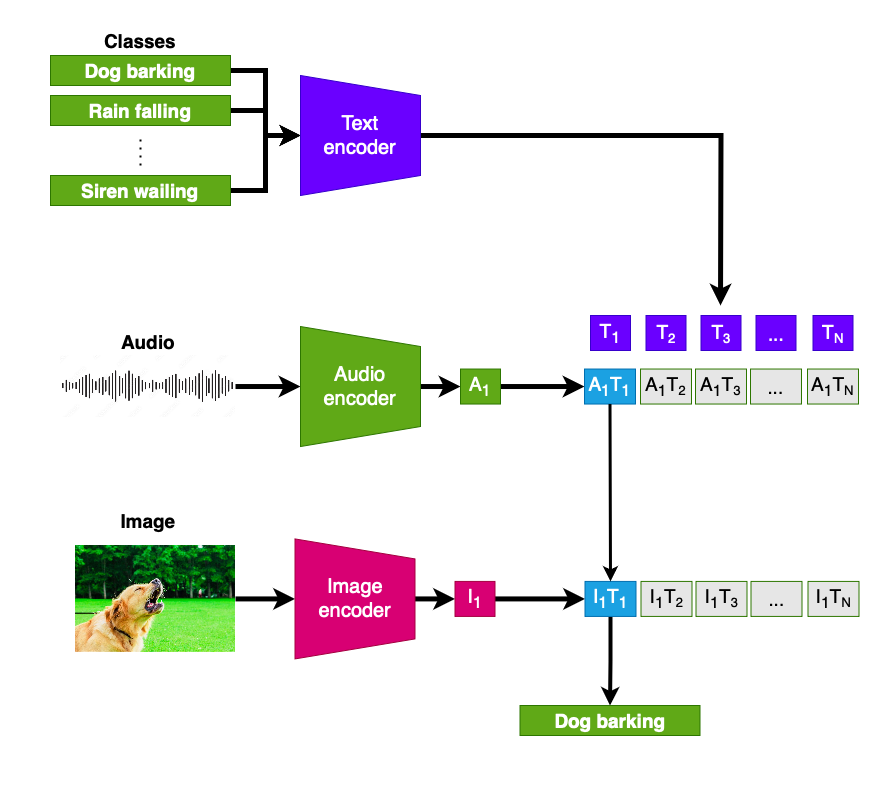

Approach: multi-modal ZSL

Zero-shot learning with CLAP

+ zero-shot learning with CLIP

- Common text classes

- Combined probabilities from CLIP and CLAP

Video and audio

Separating .mp4 audio and video streams with moviepy:

from moviepy.editor import VideoFileClip from moviepy.video.io.ffmpeg_tools import ffmpeg_extract_subclip ffmpeg_extract_subclip("advert.mp4", 0, 5, "advert_5s.mp4")video = VideoFileClip("advert_5s.mp4")audio = video.audioaudio.write_audiofile("advert_5s.mp3")

Preparing the audio and video

from decord import VideoReader from PIL import Image video_reader = VideoReader(video_path) video = video_reader.get_batch(range(20)).asnumpy() video = video[:, :, :, ::-1] video = [Image.fromarray(frame) for frame in video]from datasets import Dataset, Audio audio_dataset = Dataset.from_dict({"audio": [audio_path]}).cast_column("audio", Audio()) audio_sample = audio_dataset[0]["audio"]["array"]

Video predictions

emotions = ["joy", "fear", "anger", "sadness", "disgust", "surprise", "neutral"]image_class = pipeline(model="openai/clip-vit-large-patch14", task="zero-shot-image-classification")predictions = image_classifier(video, candidate_labels=emotions) scores = [ {l['label']: l['score'] for l in prediction} for prediction in predictions ]avg_image_scores = {emotion: sum([s[emotion] for s in scores])/len(scores) for emotion in emotions} print(f"Average scores: {avg_image_scores}")

Average scores: {'joy': 0.10063326267991216, 'fear': 0.0868348691612482, ...}

Audio predictions and combination

audio_class = pipeline(model="laion/clap-htsat-unfused", task="zero-shot-audio-classification")audio_scores = audio_class(audio_sample, candidate_labels=emotions) audio_scores = {l['label']: l['score'] for l in audio_scores}multimodal_scores = {emotion: (avg_image_scores[emotion] + audio_scores[emotion])/2 for emotion in emotions} print(f"Multimodal scores: {multimodal_scores}")

Multimodal scores: {'joy': 0.3109628591220826, 'fear': 0.09013736313208938,

'anger': 0.011454355076421053, 'sadness': 0.06018101833760738, 'disgust': 0.07207315033301712,

'surprise': 0.252118631079793, 'neutral': 0.2030726027674973}

Let's practice!

Multi-Modal Models with Hugging Face