Speech recognition and audio generation

Multi-Modal Models with Hugging Face

James Chapman

Curriculum Manager, DataCamp

Speech

What are the important parts of speech waveforms?

- Pitch: avg. frequency for males 85-180Hz, for females 165-255Hz

- Stress: language, accent, and emotion specific

- Rhythm: influenced by context and emotion and language

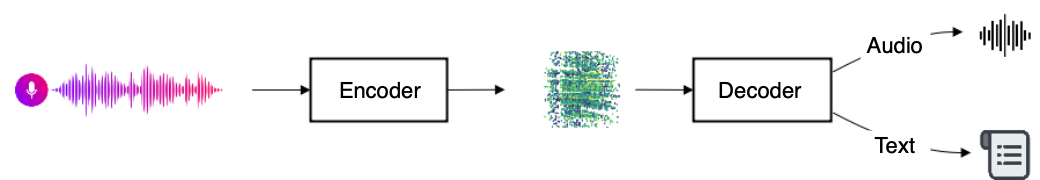

Automatic speech recognition

- Text and audio are both sequential

- Examples: transcription, translation, text-to-speech

Automatic speech recognition

- Tiny model: 39M parameters, 150MB model size

- Training data: 680k hours (labeled)

from transformers import WhisperProcessor, WhisperForConditionalGeneration

processor = WhisperProcessor.from_pretrained("openai/whisper-tiny")

model = WhisperForConditionalGeneration.from_pretrained("openai/whisper-tiny")

1 https://github.com/openai/whisper

Automatic speech recognition

from datasets import load_dataset, Audio dataset = load_dataset("CSTR-Edinburgh/vctk")["train"] dataset = dataset.cast_column("audio", Audio(sampling_rate=16_000))sample = dataset[0]["audio"]input_preprocessed = processor(sample["array"], sampling_rate=sample["sampling_rate"], return_tensors="pt")predicted_ids = model.generate(input_preprocessed.input_features)transcription = processor.batch_decode(predicted_ids, skip_special_tokens=True) print(transcription)

['Please cool Stella.']

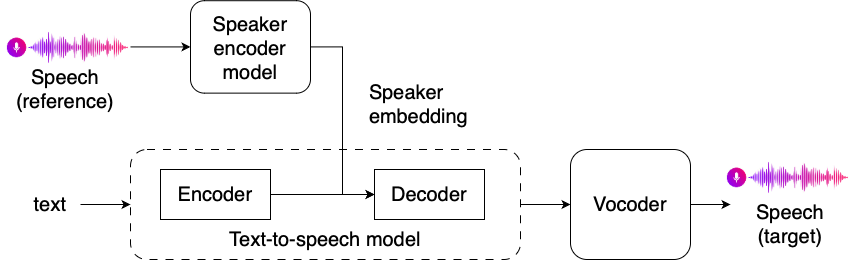

Audio generation

Three components to generate audio:

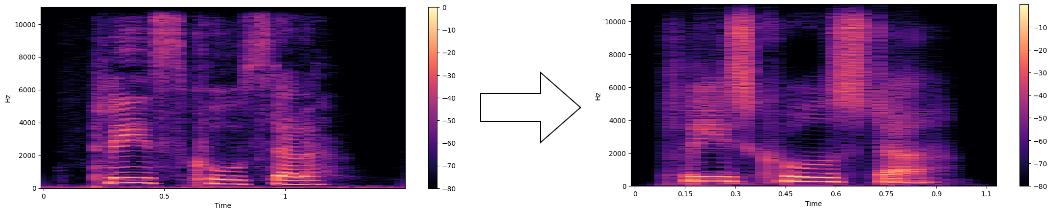

- Preprocessor: resampling and feature extraction

- Model: feature transformation

- Vocoder: a separate generative model for audio waveforms

from transformers import SpeechT5Processor, SpeechT5ForSpeechToSpeech, SpeechT5HifiGanprocessor = SpeechT5Processor.from_pretrained("microsoft/speecht5_vc") model = SpeechT5ForSpeechToSpeech.from_pretrained("microsoft/speecht5_vc") vocoder = SpeechT5HifiGan.from_pretrained("microsoft/speecht5_hifigan")

Speech embeddings

Generating speaker embeddings

- Pre-trained encoder: audio waveform array → encoded array (typically 512 dimensions)

from speechbrain.inference.speaker import EncoderClassifier

speaker_model = EncoderClassifier.from_hparams(source="speechbrain/spkrec-xvect-voxceleb")

speaker_embeddings = speaker_model.encode_batch(torch.tensor(dataset[0]["audio"]["array"]))speaker_embeddings = torch.nn.functional.normalize(speaker_embeddings, dim=2).unsqueeze(0)

Audio generation

inputs = processor(audio=dataset[0]["audio"], sampling_rate=dataset[0]["audio"]["sampling_rate"], return_tensors="pt")speech = model.generate_speech(inputs["input_values"], speaker_embedding, vocoder=vocoder)

Let's practice!

Multi-Modal Models with Hugging Face