Pipeline tasks and evaluations

Multi-Modal Models with Hugging Face

James Chapman

Curriculum Manager, DataCamp

Pipelines vs. model components

Current approach

from transformers import BlipProcessor, BlipForConditionalGeneration

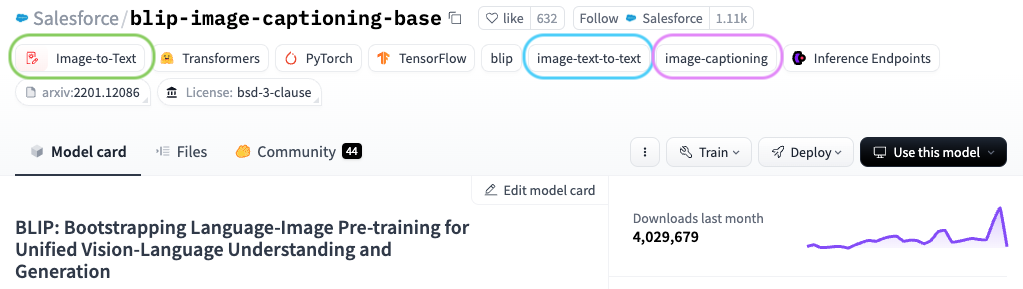

checkpoint = "Salesforce/blip-image-captioning-base"

processor = BlipProcessor.from_pretrained(checkpoint)

model = BlipForConditionalGeneration.from_pretrained(checkpoint)

Pipelines

from transformers import pipeline

pipe = pipeline("image-to-text", model=checkpoint)

Example comparison

Preprocessor and model directly

inputs = processor(images=image,

return_tensors="pt")

gen = model.generate(**inputs)

processor.decode(gen[0])

Pipeline

pipe(image)

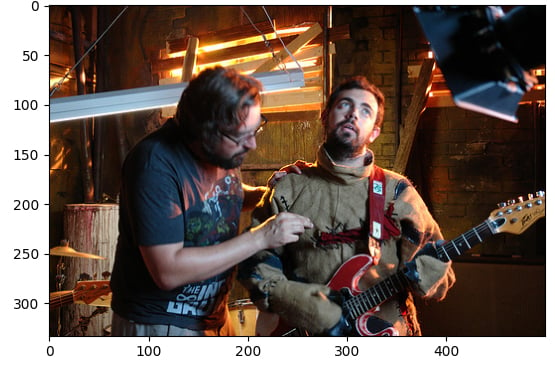

[{'generated_text':

'a man wearing a black shirt'}]

Finding models and tasks

Find models for a pipeline via the API:

from huggingface_hub import HfApi

model = list(api.list_models(task="text-to-image", limit=5))

pipe = pipeline("text-to-image", model[0].id)

Passing options to models

MusicgenForConditionalGenerationunder-the-hood

pipe = pipeline(task="text-to-audio",

model="facebook/musicgen-small", framework="pt")

generate_kwargs = {"temperature": 0.8, "max_new_tokens": 20}outputs = pipe("Classic rock riff", generate_kwargs=generate_kwargs)

temperature(0-1): control randomness and creativitymax_new_tokens: limit number of generated tokens

Evaluating pipeline performance

- Accuracy: total proportion of correct classifications

- Precision: how often class predictions are correct

- Recall: how many actual classes were correctly identified

- F1 Score: combines precision and recall

from evaluate import evaluatortask_evaluator = evaluator("image-classification")metrics_dict = { "precision": "precision", "recall": "recall", "f1": "f1", }label_map = pipe.model.config.label2id

Evaluating pipeline performance

eval_results = task_evaluator.compute( model_or_pipeline=pipe,data=dataset,metric=evaluate.combine(metrics_dict),label_mapping=label_map)

print(eval_results)

{'precision': 0.999001923076923,

'recall': 0.999,

'f1': 0.9989999609405906, ...}

pipe = pipeline(task="image-classification",

model="ideepankarsharma2003/AI_ImageClassi

fication_MidjourneyV6_SDXL"

)

dataset = load_dataset("ideepankarsharma2003/

Midjourney_v6_Classification_small_shuffled")

Let's practice!

Multi-Modal Models with Hugging Face