Zero-shot image classification

Multi-Modal Models with Hugging Face

James Chapman

Curriculum Manager, DataCamp

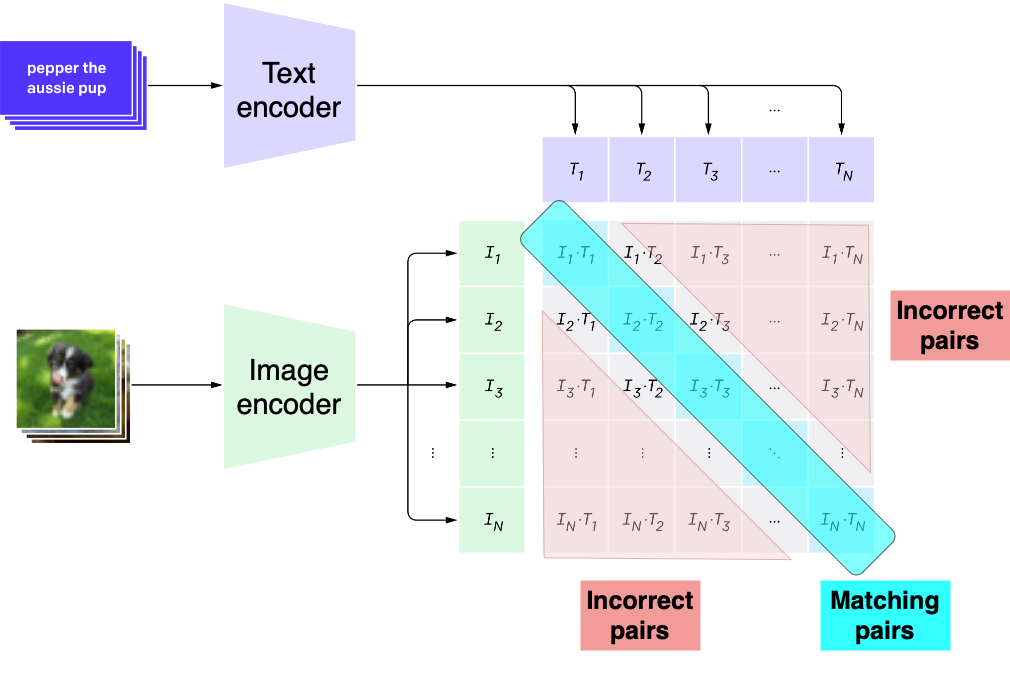

CLIP

- Contrastive Language-Image Pre-training

- Score similarity between images and text

- Trained on 400M image-text pairs

- Two encoders:

- Vision encoder

- Text encoder

- Close image-text matches produce similar arrays

1 https://openai.com/index/clip/

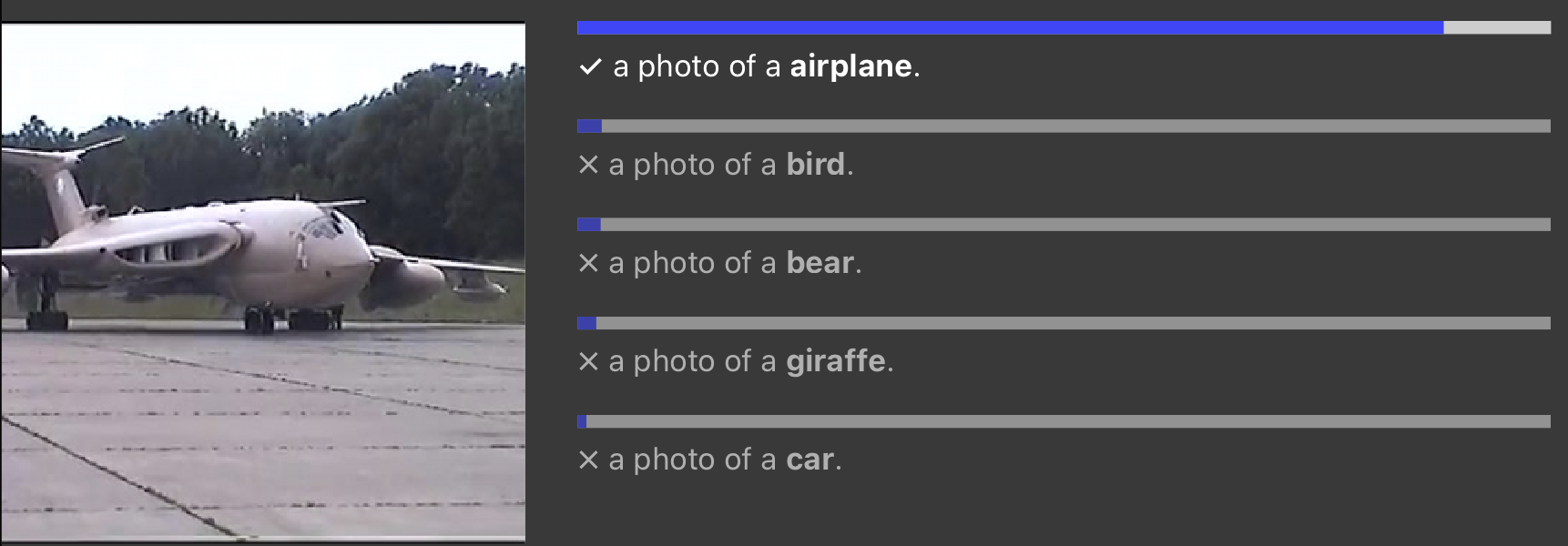

Zero-shot learning

- Perform tasks that the model wasn't trained for

1 https://openai.com/index/clip/

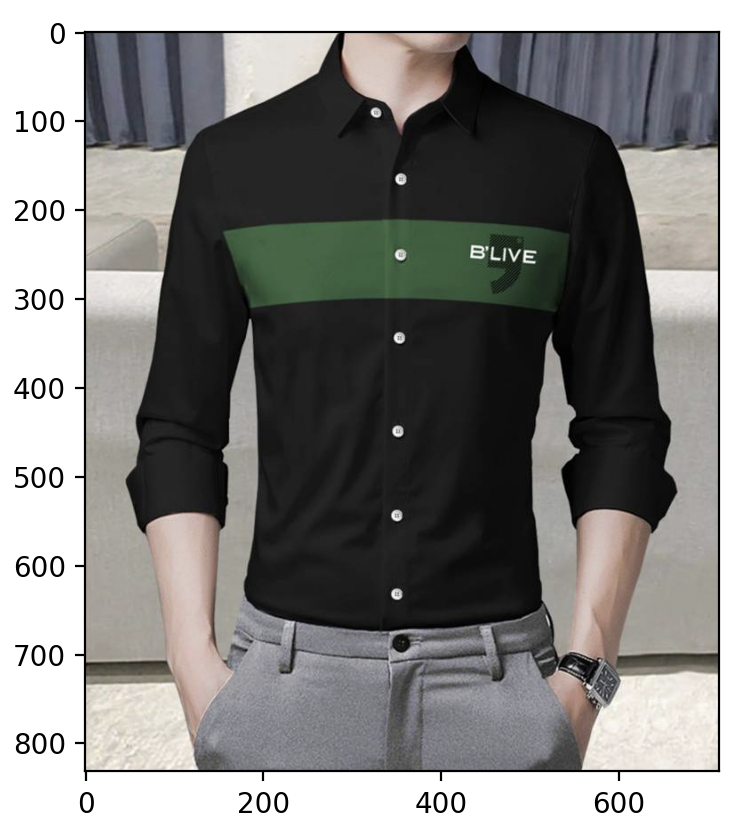

Use case: product categorization

from datasets import load_dataset import matplotlib.pyplot as plt dset = "rajuptvs/ecommerce_products_clip" dataset = load_dataset(dset)print(dataset["train"][0]["Description"])plt.imshow(dataset["train"][0]["image"]) plt.show()

Blive High quality premium Full sleeves printed

Shirt direct from the manufacturers.Gives you

a clean and classy look while also

making you feel comfortable.Trusted

brand online and no compromise on quality.

Zero-shot learning with CLIP

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32") processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")categories = ["shirt", "trousers", "shoes", "dress", "hat", "bag", "watch"]inputs = processor(text=categories, images=dataset["train"][0]["image"], return_tensors="pt", padding=True) outputs = model(**inputs)probs = outputs.logits_per_image.softmax(dim=1)categories[probs.argmax().item()]

shirt

The CLIP score

- Similarity of encoded image and encoded description

- Range from

100(perfect agreement) to0(no agreement)

from torchmetrics.functional.multimodal import clip_scoreimage = dataset["train"][0]["image"] description = dataset["train"][0]["Description"]from torchvision.transforms import ToTensor image = ToTensor()(image)*255score = clip_score(image, description, "openai/clip-vit-base-patch32")print(f"CLIP score: {score}")

CLIP score: 28.495952606201172

Let's practice!

Multi-Modal Models with Hugging Face