Preprocessing different modalities

Multi-Modal Models with Hugging Face

James Chapman

Curriculum Manager, DataCamp

Preprocessing text

- Tokenizer: maps text → model input

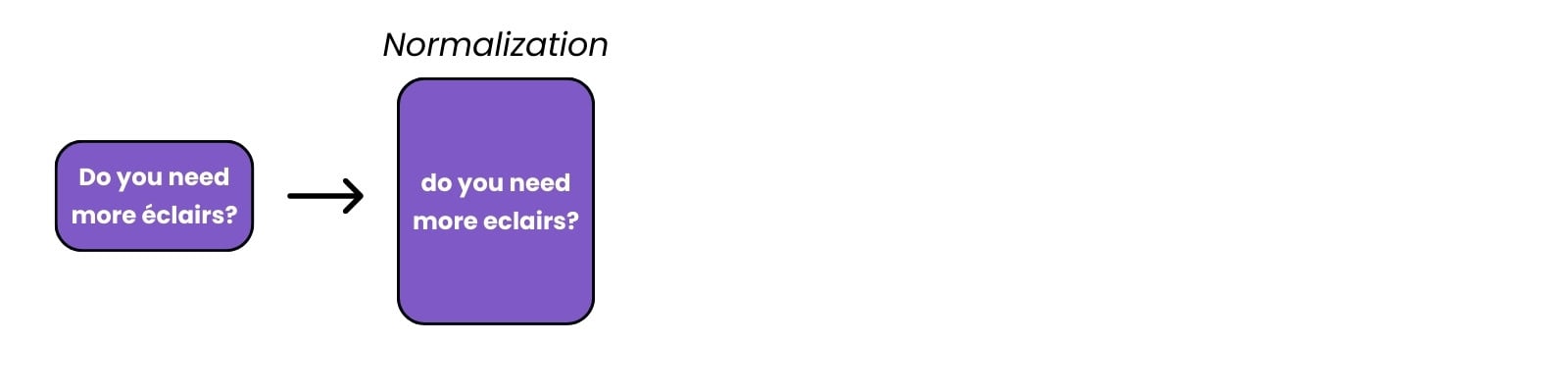

Preprocessing text

- Tokenizer: maps text → model input

- Normalization: lowercasing, removing special characters, removing extra whitespace

Preprocessing text

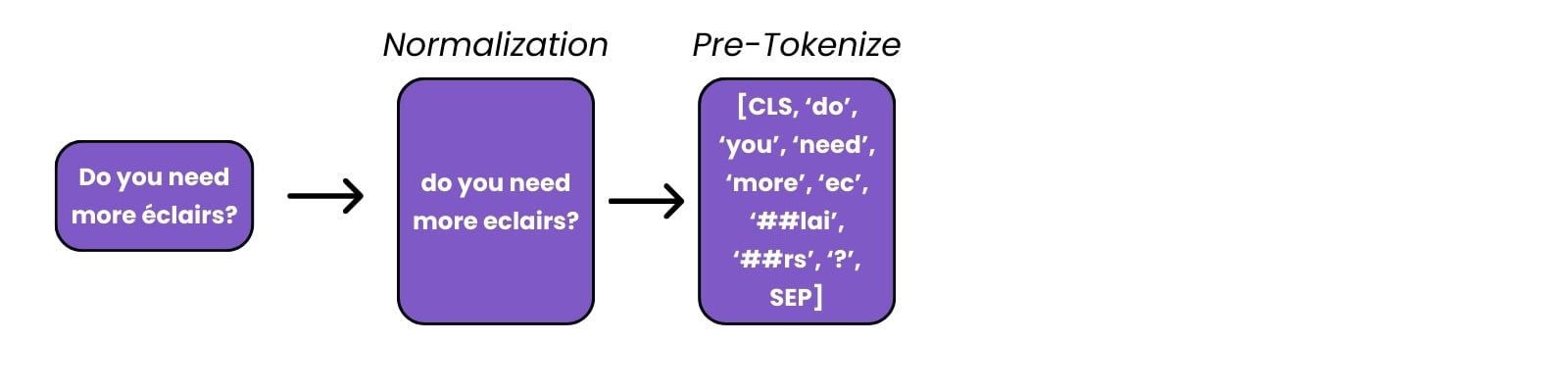

- Tokenizer: maps text → model input

- Normalization: lowercasing, removing special characters, removing extra whitespace

- (Pre-)tokenization: splitting text into words/subwords

Preprocessing text

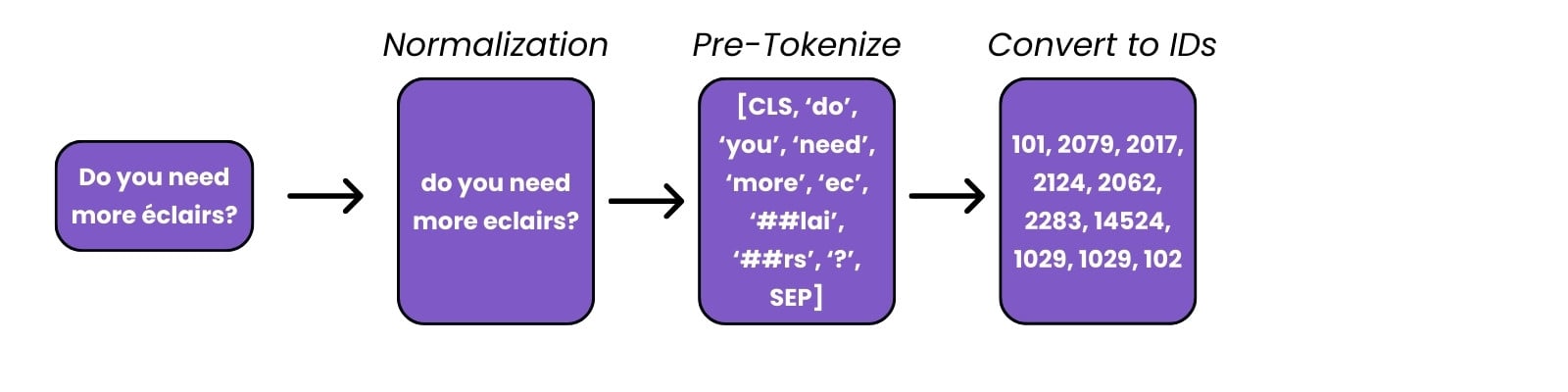

- Tokenizer: maps text → model input

- Normalization: lowercasing, removing special characters, whitespace

- (Pre-)tokenization: splitting text into words/subwords

- ID conversion: Mapping of tokens to integers using a vocabulary

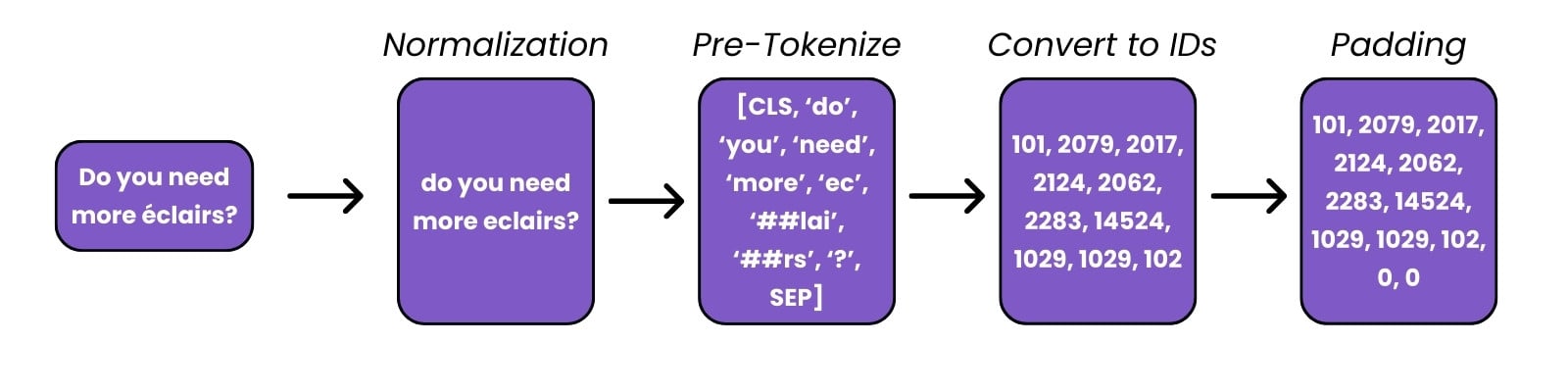

Preprocessing text

- Tokenizer: maps text → model input

- Normalization: lowercasing, removing special characters, whitespace

- (Pre-)tokenization: splitting text into words/subwords

- ID conversion: Mapping of tokens to integers using a vocabulary

- Padding: Adding additional tokens for consistent length

Preprocessing text

from transformers import AutoTokenizer tokenizer = AutoTokenizer.from_pretrained('distilbert/distilbert-base-uncased')text = "Do you need more éclairs?"print(tokenizer.backend_tokenizer.normalizer.normalize_str(text))

do you need more eclairs

tokenizer(text, return_tensors='pt', padding=True)

{'input_ids': tensor([[ 101, ..., 102]]), ...}

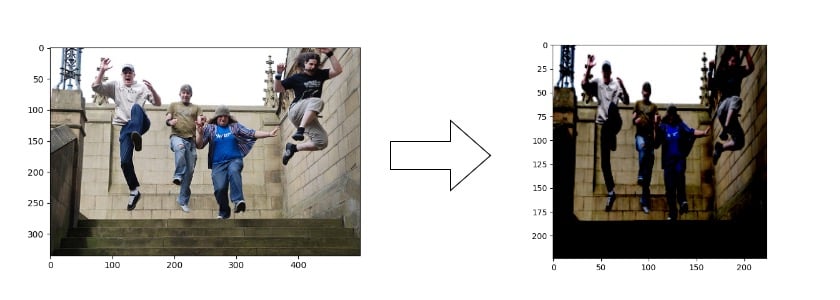

Preprocessing images

- Normalization: pixel intensity updates

- Resize: Match input layer of model

- General rule → use preprocessing of original model

1 https://huggingface.co/datasets/nlphuji/flickr30k

Preprocessing images

Multimodal tasks require consistent preprocessing:

from transformers import BlipProcessor, BlipForConditionalGenerationcheckpoint = "Salesforce/blip-image-captioning-base"model = BlipForConditionalGeneration.from_pretrained(checkpoint) processor = BlipProcessor.from_pretrained(checkpoint)

Encode image → transform to text encoding → decode text

image = load_dataset("nlphuji/flickr30k")['test'][11]["image"] inputs = processor(images=image, return_tensors="pt")output = model.generate(**inputs)print(processor.decode(output[0]))

[{'generated_text': 'a group of people jumping'}]

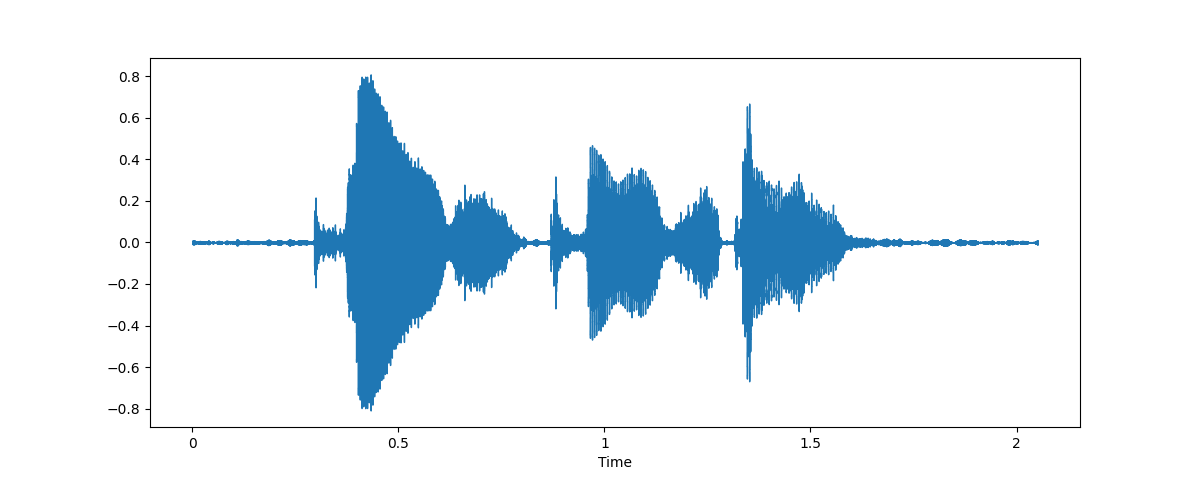

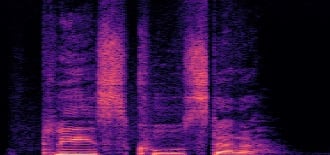

Preprocessing audio

- Audio preprocessing:

- Sequential array → filter/padding

- Sampling rate → resampling

Feature extraction as model input (spectrogram)

Preprocessing audio

from datasets import load_dataset, Audiodataset = load_dataset("CSTR-Edinburgh/vctk")["train"] dataset = dataset.cast_column("audio", Audio(sampling_rate=16_000))

- Model specific full preprocessors should be available:

from transformers import AutoProcessor

processor = AutoProcessor.from_pretrained("openai/whisper-small")

audio_pp = processor(dataset[0]["audio"]["array"],

sampling_rate=16_000, return_tensors="pt")

- Sampling rate must match model input requirements

1 https://huggingface.co/datasets/CSTR-Edinburgh/vctk

Let's practice!

Multi-Modal Models with Hugging Face