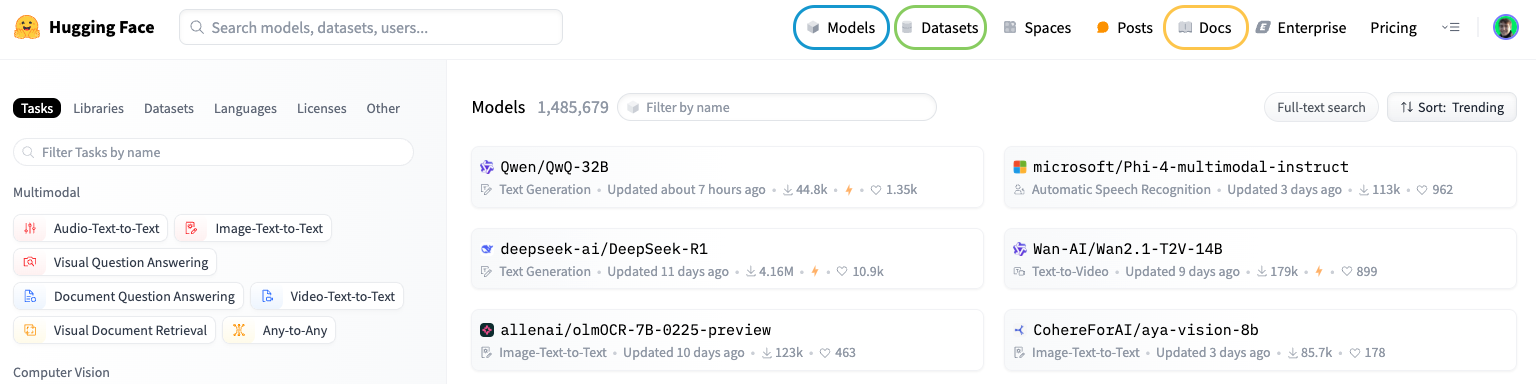

Hugging Face model navigation

Multi-Modal Models with Hugging Face

James Chapman

Curriculum Manager, DataCamp

Meet your instructor...

Sean Benson

- Former particle physicist at the Large Hadron Collider (LHC) in CERN, Switzerland

- Now applied AI researcher at the Amsterdam University Medical Center

Modalities covered

1 Image Credits: OpenAI generated

Hugging Face

- 1M+ models

- 250k+ datasets

The Hub API

- Access models and datasets programmatically

pip install huggingface_hub[cli]

Log in to access the models in your account:

>>> huggingface-cli login

- All libraries, models, and datasets will be pre-installed

Searching for models

- Hugging Face API: programmatic access to models and datasets

from huggingface_hub import HfApi api = HfApi()models = api.list_models()

task:"image-classification","text-to-image", etc.sort: e.g.,"likes"or"downloads"limit: Maximum entriestags: Associated extra info of the model

Searching for models

models = api.list_models(task="text-to-image",author="CompVis",tags="diffusers:StableDiffusionPipeline",sort="downloads")

top_model = list(models)[0]

print(top_model)

ModelInfo(id='CompVis/stable-diffusion-v1-4', private=False, downloads=1097285,

likes=6718, library_name='diffusers', ...

Using models from the API

top_model_id = top_model.id

print(top_model_id)

CompVis/stable-diffusion-v1-4

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained(top_model_id)

Available tasks

import json

from urllib.request import urlopen

url = "https://huggingface.co/api/tasks"

with urlopen(url) as url:

tasks = json.load(url)

print(tasks.keys())

dict_keys(['any-to-any',

'audio-classification',

'audio-to-audio', ...'])

1 https://huggingface.co/tasks 2 https://huggingface.co/api/tasks

Let's practice!

Multi-Modal Models with Hugging Face