Visual question-answering (VQA)

Multi-Modal Models with Hugging Face

James Chapman

Curriculum Manager, DataCamp

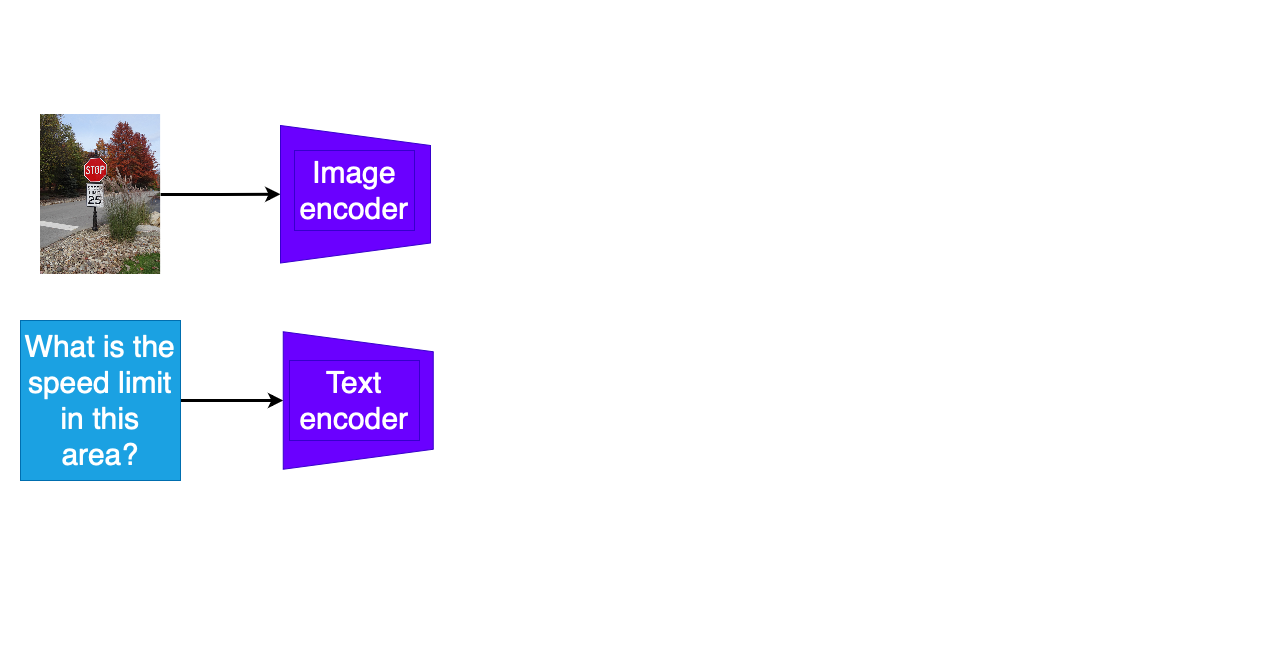

Multimodal QA tasks

- Separate encoding of question text and other modality

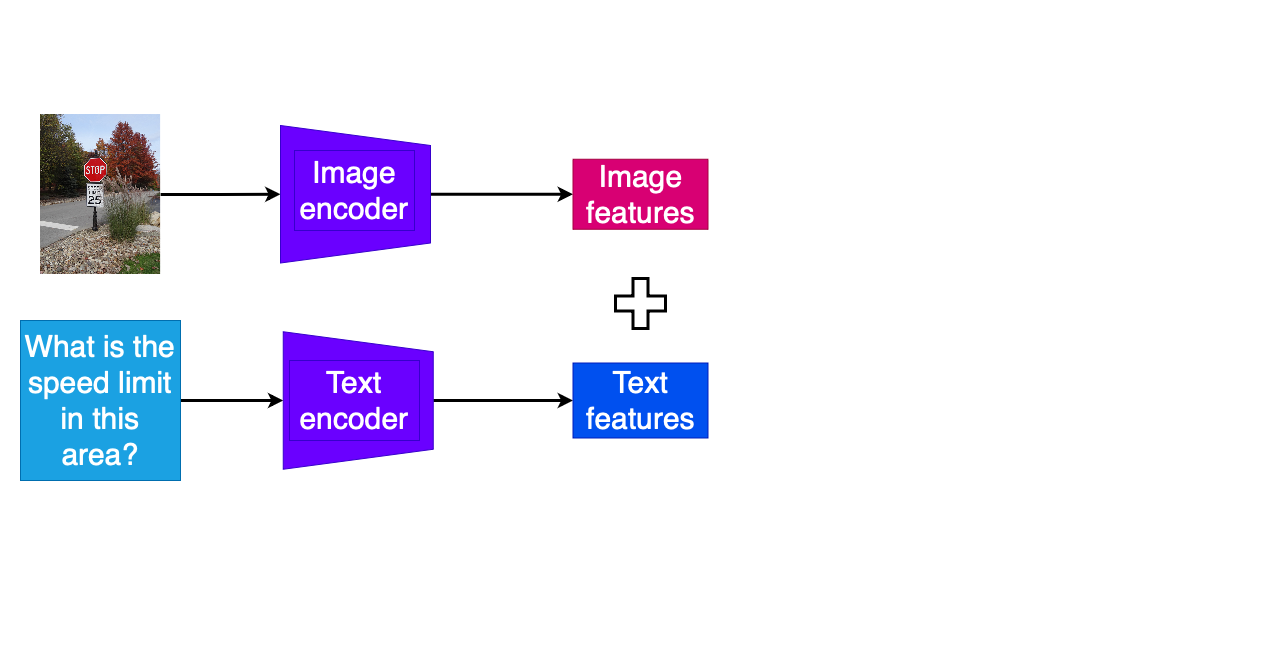

Multimodal QA tasks

- Separate encoding of question text and other modality

- Combination of encoded features

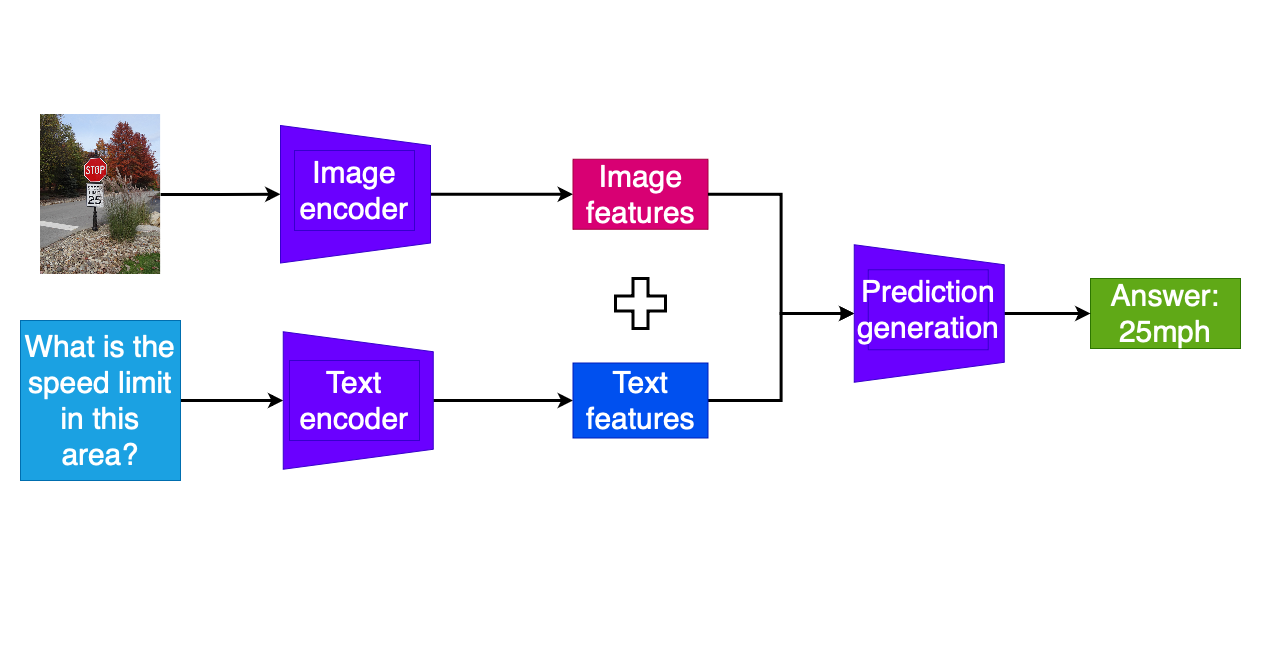

Multimodal QA tasks

- Separate encoding of question text and other modality

- Combination of encoded features

- Additional model layers to predict answer tokens

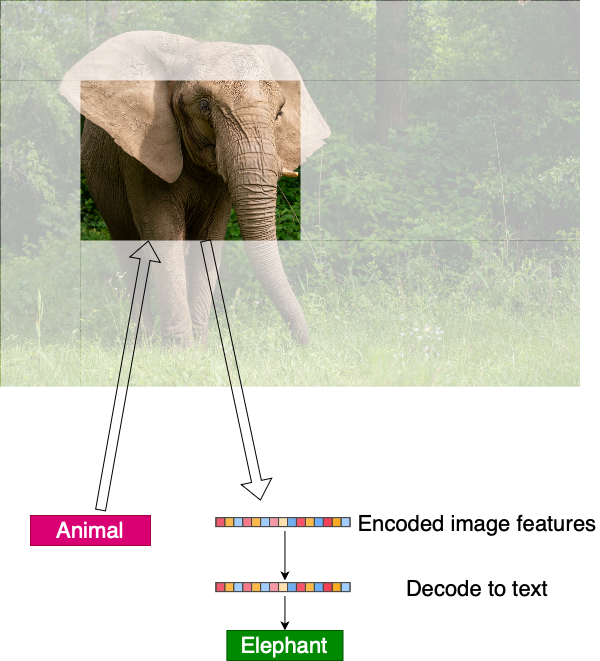

VQA

import requests from PIL import Image url = "https://www.worldanimalprotection .org/cdn-cgi/image/width=1920,format= auto/globalassets/images/elephants/1 033551-elephant.jpg"image = Image.open(requests.get(url, stream=True).raw)text = "What animal is in this photo?"

VQA

- Model knows image and text features of many objects

- Reusable models with no extra fine-tuning

VQA

from transformers import ViltProcessor, ViltForQuestionAnsweringprocessor = ViltProcessor.from_pretrained("dandelin/vilt-b32-finetuned-vqa") model = ViltForQuestionAnswering.from_pretrained("dandelin/vilt-b32-finetuned-vqa")encoding = processor(image, text, return_tensors="pt")outputs = model(**encoding)idx = outputs.logits.argmax(-1).item()print("Predicted answer:", model.config.id2label[idx])

Predicted answer: elephant

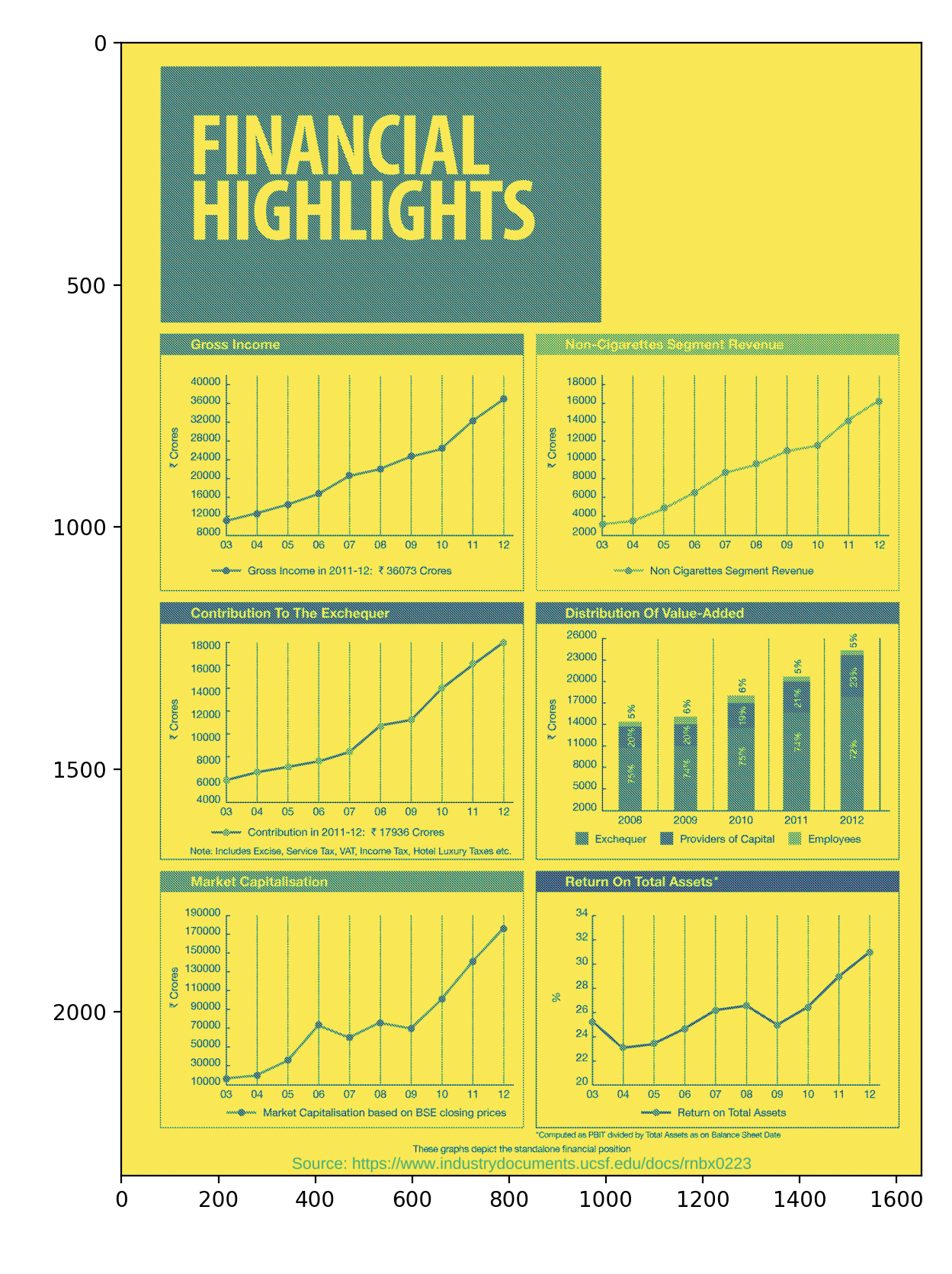

Document VQA

- Extension of VQA to detect graphs, tables, and text (OCR) in images

from datasets import load_dataset from transformers import pipeline dataset = load_dataset("lmms-lab/DocVQA")import matplotlib.pyplot as plt plt.imshow(dataset["test"][2]["image"]) plt.show()

Document VQA

- Extra dependencies needed to run OCR

pytesseractinstalled viapip- Tesseract OCR via package installer (e.g.

apt-get,exeorhomebrew/macports)

Document VQA

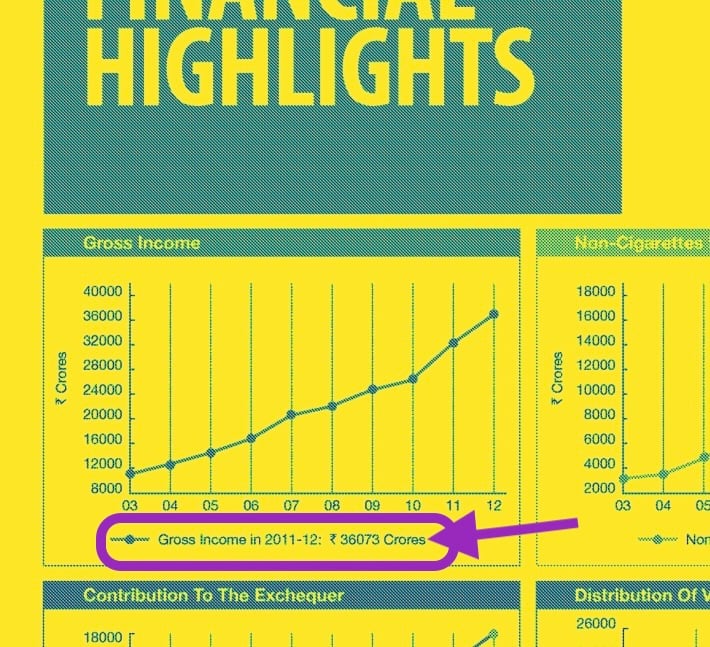

- LayoutLM: Trained with images and Q/As from the DocVQA dataset

from transformers import pipeline pipe = pipeline("document-question-answering", "impira/layoutlm-document-qa")result = pipe( dataset["test"][2]["image"], "What was the gross income in 2011-2012?" )

Document VQA

print(result)

[{'score': 0.05149758607149124,

'answer': '3 36073 Crores', ...}]

Let's practice!

Multi-Modal Models with Hugging Face