Image editing with diffusion models

Multi-Modal Models with Hugging Face

James Chapman

Curriculum Manager, DataCamp

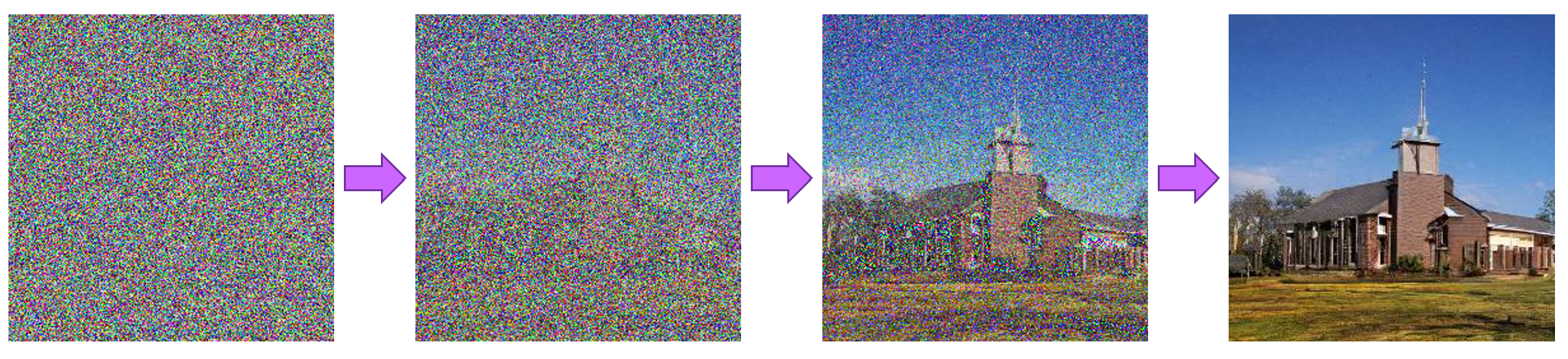

Diffusers

- Trained to map noise to an image

- CLIP + Diffusion → 2 types of conditional generation

- Generation: Text → image

- Modification: Text+image → image

1 https://huggingface.co/docs/diffusers

Custom image editing

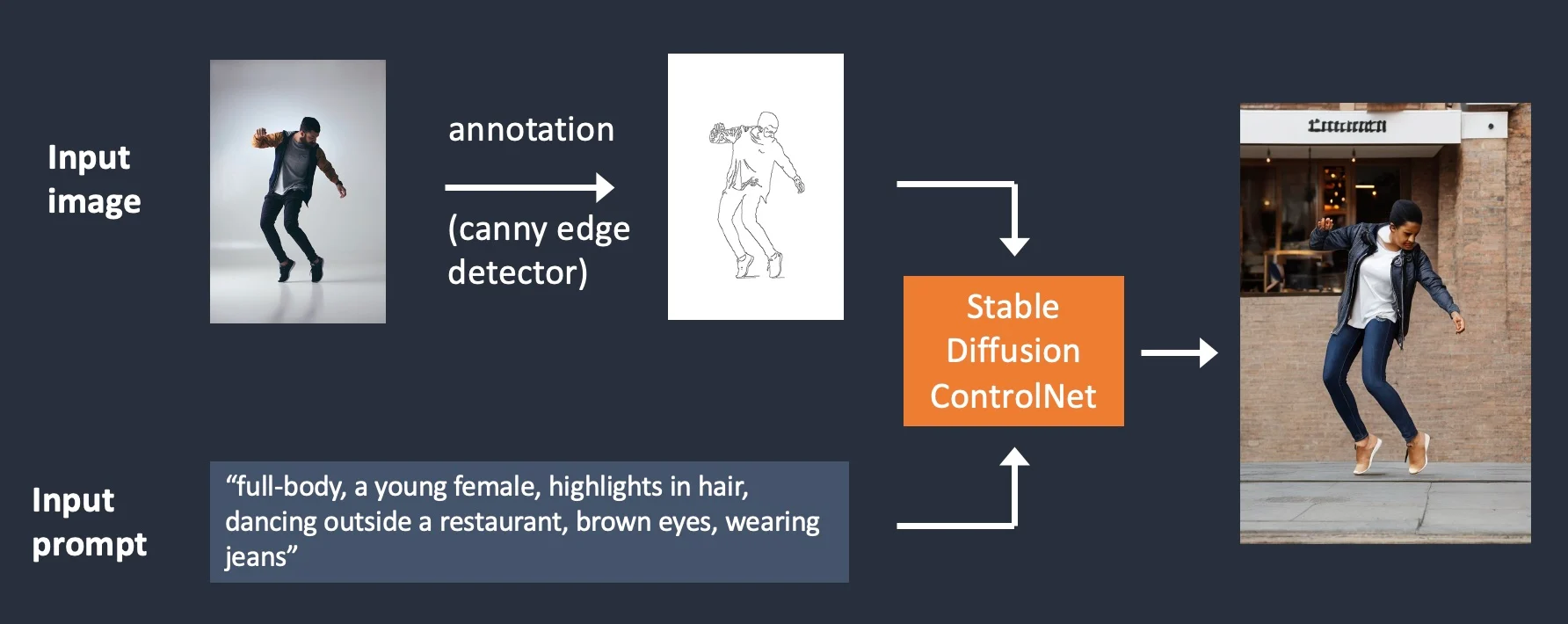

ControlNet: image and text-guided conditional generation

1 https://stable-diffusion-art.com/controlnet/

Custom image editing

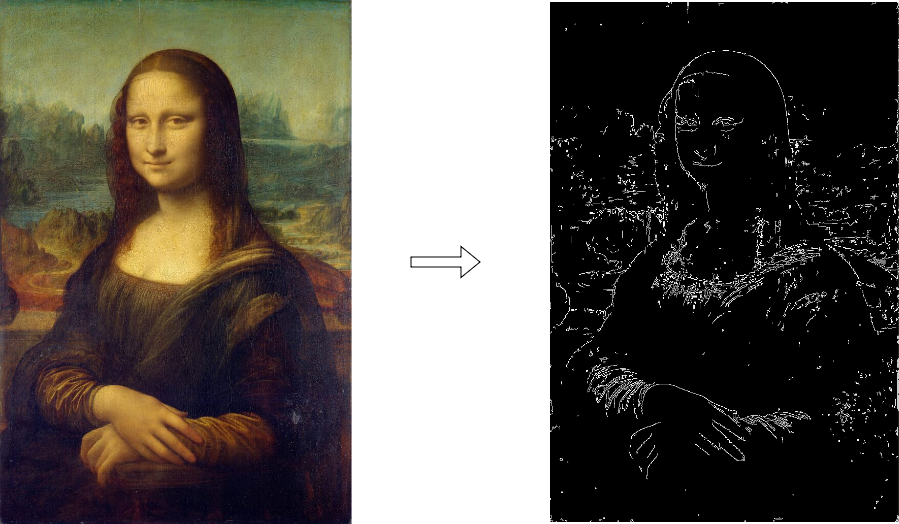

from diffusers.utils import load_image image = load_image("http://301.nz/o81bf")import cv2 from PIL import Image import numpy as np image = cv2.Canny(np.array(image), 100, 200)image = image[:, :, None] image = np.concatenate([image, image, image], axis=2)

Custom image editing

from diffusers.utils import load_image

image = load_image("http://301.nz/o81bf")

import cv2

from PIL import Image

import numpy as np

image = cv2.Canny(np.array(image), 100, 200)

image = image[:, :, None]

image = np.concatenate([image, image, image],

axis=2)

canny_image = Image.fromarray(image)

Custom image editing

from diffusers import StableDiffusionControlNetPipeline from diffusers import ControlNetModel import torch controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)pipe = StableDiffusionControlNetPipeline.from_pretrained( "runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16 )pipe = pipe.to("cuda")

Custom image editing

prompt = ["Albert Einstein, best quality, extremely detailed"]generator = [ torch.Generator(device="cuda").manual_seed(2)]output = pipe( prompt, canny_image, negative_prompt=["monochrome, lowres, bad anatomy, worst quality, low quality"], generator=generator, num_inference_steps=20)

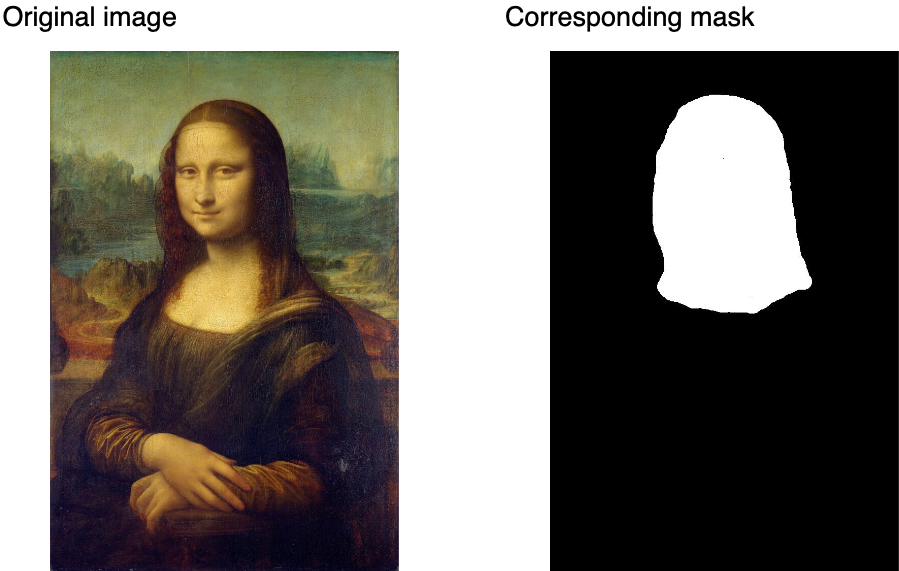

Image inpainting

- Generate new content localized to a certain region

Image inpainting

- Generate new content localized to a certain region

- Binary mask: white (

1), black (0) - Masks from a segmentation or pre-defined by user (e.g., using InpaintingMask-Generation)

Image inpainting

from diffusers import StableDiffusionControlNetInpaintPipeline, ControlNetModelcontrolnet = ControlNetModel.from_pretrained("lllyasviel/control_v11p_sd15_inpaint", torch_dtype=torch.float16, use_safetensors=True)pipe = StableDiffusionControlNetInpaintPipeline.from_pretrained( "stable-diffusion-v1-5/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16, use_safetensors=True )

Image inpainting

def make_inpaint_condition(image, image_mask): image = np.array(image.convert("RGB")).astype(np.float32) / 255.0 image_mask = np.array(image_mask.convert("L")).astype(np.float32) / 255.0image[image_mask > 0.5] = -1.0image = np.expand_dims(image, 0).transpose(0, 3, 1, 2) image = torch.from_numpy(image) return image control_image = make_inpaint_condition(init_image, mask_image)

Image inpainting

output = pipe( "The head of the mona lisa in the same style and quality as the original mona lisa with a clear smile and a slightly smaller head size",num_inference_steps=40,eta=1.0,image=init_image, mask_image=mask_image, control_image=control_image, ).images[0]

Let's practice!

Multi-Modal Models with Hugging Face