Optimizers, training, and evaluation

Intermediate Deep Learning with PyTorch

Michal Oleszak

Machine Learning Engineer

Training loop

import torch.nn as nn import torch.optim as optim criterion = nn.BCELoss() optimizer = optim.SGD(net.parameters(), lr=0.01)for epoch in range(1000): for features, labels in dataloader_train:optimizer.zero_grad()outputs = net(features)loss = criterion( outputs, labels.view(-1, 1) )loss.backward()optimizer.step()

- Define loss function and optimizer

BCELossfor binary classificationSGDoptimizer

- Iterate over epochs and training batches

- Clear gradients

- Forward pass: get model's outputs

- Compute loss

- Compute gradients

- Optimizer's step: update params

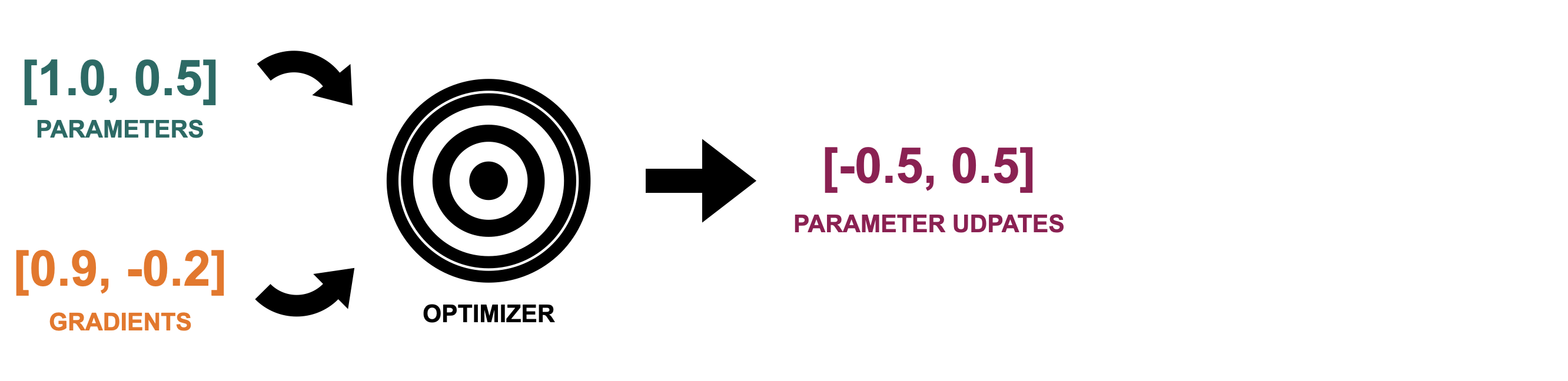

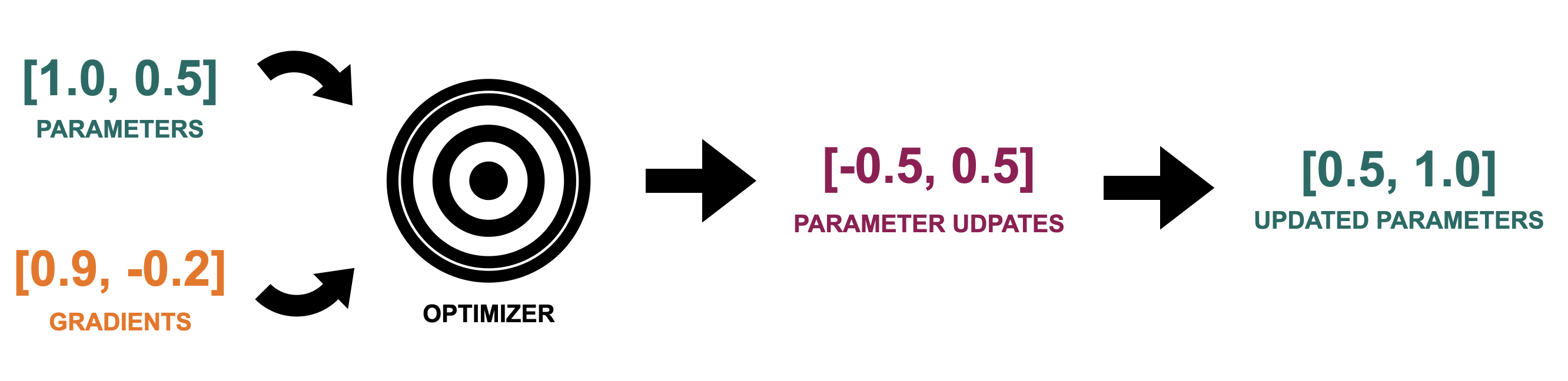

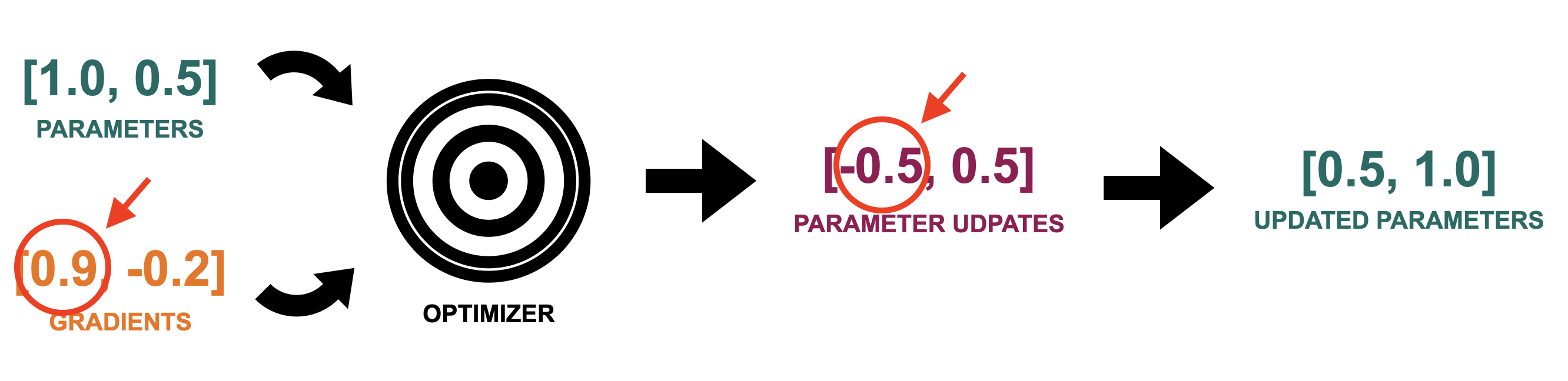

How an optimizer works

How an optimizer works

How an optimizer works

How an optimizer works

How an optimizer works

Stochastic Gradient Descent (SGD)

optimizer = optim.SGD(net.parameters(), lr=0.01)

- Update depends on learning rate

- Simple and efficient, for basic models

- Rarely used in practice

Adaptive Gradient (Adagrad)

optimizer = optim.Adagrad(net.parameters(), lr=0.01)

- Adapts learning rate for each parameter

- Good for sparse data

- May decrease the learning rate too fast

Root Mean Square Propagation (RMSprop)

optimizer = optim.RMSprop(net.parameters(), lr=0.01)

- Update for each parameter based on the size of its previous gradients

Adaptive Moment Estimation (Adam)

optimizer = optim.Adam(net.parameters(), lr=0.01)

- Arguably the most versatile and widely used

- RMSprop + gradient momentum

- Often used as the go-to optimizer

Model evaluation

from torchmetrics import Accuracy acc = Accuracy(task="binary")net.eval() with torch.no_grad(): for features, labels in dataloader_test:outputs = net(features)preds = (outputs >= 0.5).float()acc(preds, labels.view(-1, 1))accuracy = acc.compute() print(f"Accuracy: {accuracy}")

Accuracy: 0.6759443283081055

- Set up accuracy metric

- Put model in eval mode and iterate over test data batches with no gradients

- Pass data to model to get predicted probabilities

- Compute predicted labels

- Update accuracy metric

Let's practice!

Intermediate Deep Learning with PyTorch