High-risk deployer obligations

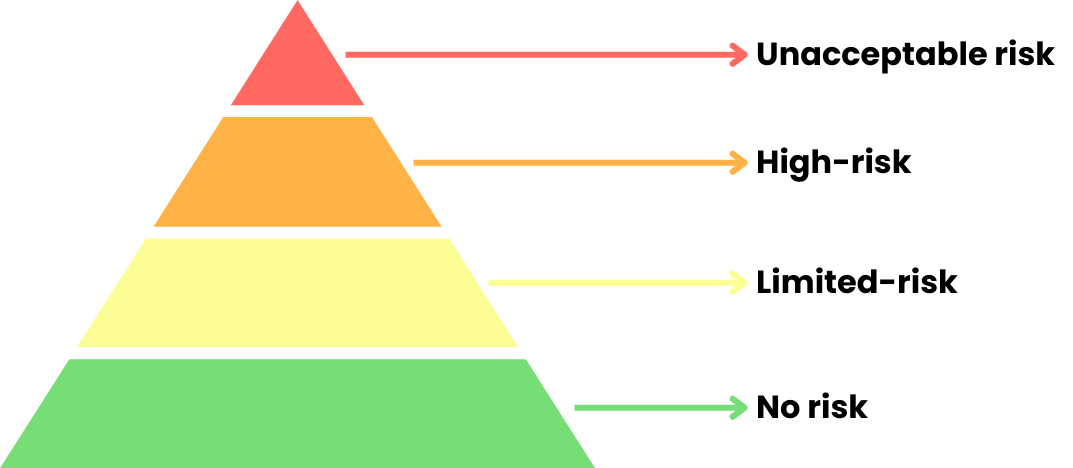

Understanding the EU AI Act

Dan Nechita

Lead Technical Negotiator, EU AI Act

Obligations for deployers

Deployers and providers share obligations to ensure that AI systems do not post threats to health, safety and fundamental rights.

So what are those obligations?

- Deployers must ensure AI use follows instructions

- Maintain automated logs and monitor for malfunctions

- Report serious incidents to provider and authorities

Notification required

- Deployers must inform workers about AI usage in task assignment or performance monitoring.

- Deployers using high-risk AI for decisions must inform affected individuals.

- Examples: life insurance premiums, educational institution admissions.

Public authorities

- Public authorities and essential service deployers must conduct a fundamental rights impact assessment (FRIA).

- FRIA ensures AI does not breach rights, similar to GDPR's DPIA.

- High-risk AI use by public authorities must be registered in the EU database.

Deployers can become providers!

- Providers and deployers roles can overlap with substantial AI modifications.

- Modifying AI's intended purpose makes the deployer a provider with provider obligations.

- Example: Using GPT-4 for hiring decisions makes the company a high-risk AI provider.

Looking back

Thank you!

Understanding the EU AI Act