Monitoring and logging

Deploying AI into Production with FastAPI

Matt Eckerle

Software and Data Engineering Leader

Why monitoring and logging?

- Can't debug in production

- App supervisor needs a simple health check

- Logging key metrics over time

Setting up custom logging

- Load the uvicorn error logger

- Add custom logs to app startup

- Add custom logs to endpoints

from fastapi import FastAPI import logging logger = logging.getLogger( 'uvicorn.error' )app = FastAPI() logger.info("App is running!")@app.get('/') async def main(): logger.debug('GET /') return 'ok'

Logging a when a model is loaded

from fastapi import FastAPI import logging import joblib logger = logging.getLogger('uvicorn.error')model = joblib.load('penguin_classifier.pkl') logger.info("Penguin classifier loaded successfully.") app = FastAPI()

Logging process time with middleware

from fastapi import FastAPI, Request

import logging

import time

logger = logging.getLogger('uvicorn.error')

app = FastAPI()

@app.middleware("http")

async def log_process_time(request: Request, call_next):

start_time = time.perf_counter()

response = await call_next(request)

process_time = time.perf_counter() - start_time

logger.info(f"Process time was {process_time} seconds.")

return response

1 https://fastapi.tiangolo.com/tutorial/middleware/

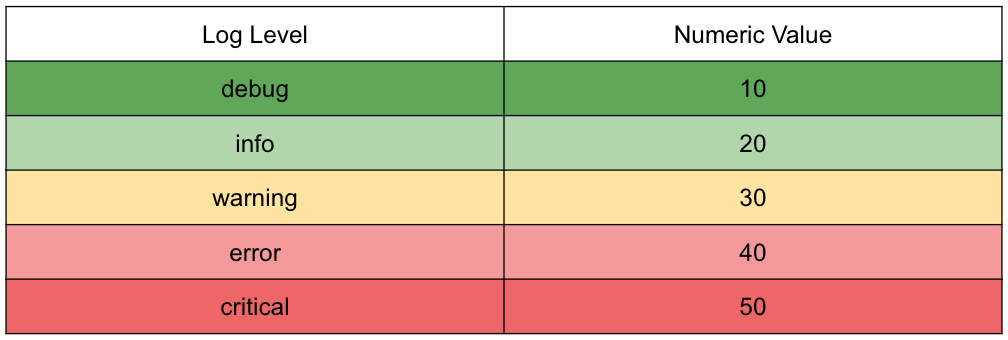

Setting the logging level

uvicorn main:app --log-level debug

Monitoring

from fastapi import FastAPI app = FastAPI()@app.get("/health") async def get_health(): return {"status": "OK"}

- "I'm ok!"

Sharing model parameters with monitoring

from fastapi import FastAPI import joblib model = joblib.load( 'penguin_classifier.pkl' ) app = FastAPI()@app.get("/health") async def get_health(): params = model.get_params() return {"status": "OK", "params": params}

- "I'm ok!"

- "Here are some fun facts about me!"

Let's practice!

Deploying AI into Production with FastAPI