Asynchronous processing

Deploying AI into Production with FastAPI

Matt Eckerle

Software and Data Engineering Leader

What is asynchronous processing

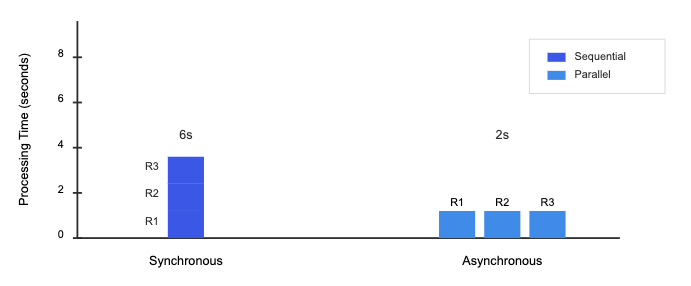

Allows handling multiple requests concurrently

Synchronous vs asynchronous requests

Turning synchronous endpoints asynchronous

@app.post("/analyze")

def analyze_sync(comment: Comment):

result = sentiment_model(comment.text)

return {"sentiment": result}

import asyncio

@app.post("/analyze")

async def analyze_async(comment: Comment):

result = await asyncio.to_thread(

sentiment_model, comment.text

)

return {"sentiment": result}

Implementing background tasks

from fastapi import BackgroundTasks

from typing import List

@app.post("/analyze_batch")

async def analyze_batch(

comments: Comments,

background_tasks: BackgroundTasks

):

async def process_comments(texts: List[str]):

for text in texts:

result = await asyncio.to_thread(

sentiment_model, text)

background_tasks.add_task(process_comments,

comments.texts)

return {"message": "Processing started"}

BackgroundTasksmanage comment processing queue

background_taskshandles post-response processing.

add_taskschedulesprocess_commentsasynchronously.

Adding error handling

@app.post("/analyze_comment")

async def analyze_comment(comment: Comment):

try:

sentiment_model = SentimentAnalyzer()

result = await asyncio.wait_for(

sentiment_model(comment.text),

timeout=5.0

)

return {"sentiment": result["label"]}

Adding error handling

except asyncio.TimeoutError:

raise HTTPException(

status_code=408,

detail="Analysis timed out"

)

except Exception:

raise HTTPException(

status_code=500,

detail="Analysis failed"

)

Let's practice!

Deploying AI into Production with FastAPI