Loading a pre-trained model

Deploying AI into Production with FastAPI

Matt Eckerle

Software and Data Engineering Leader

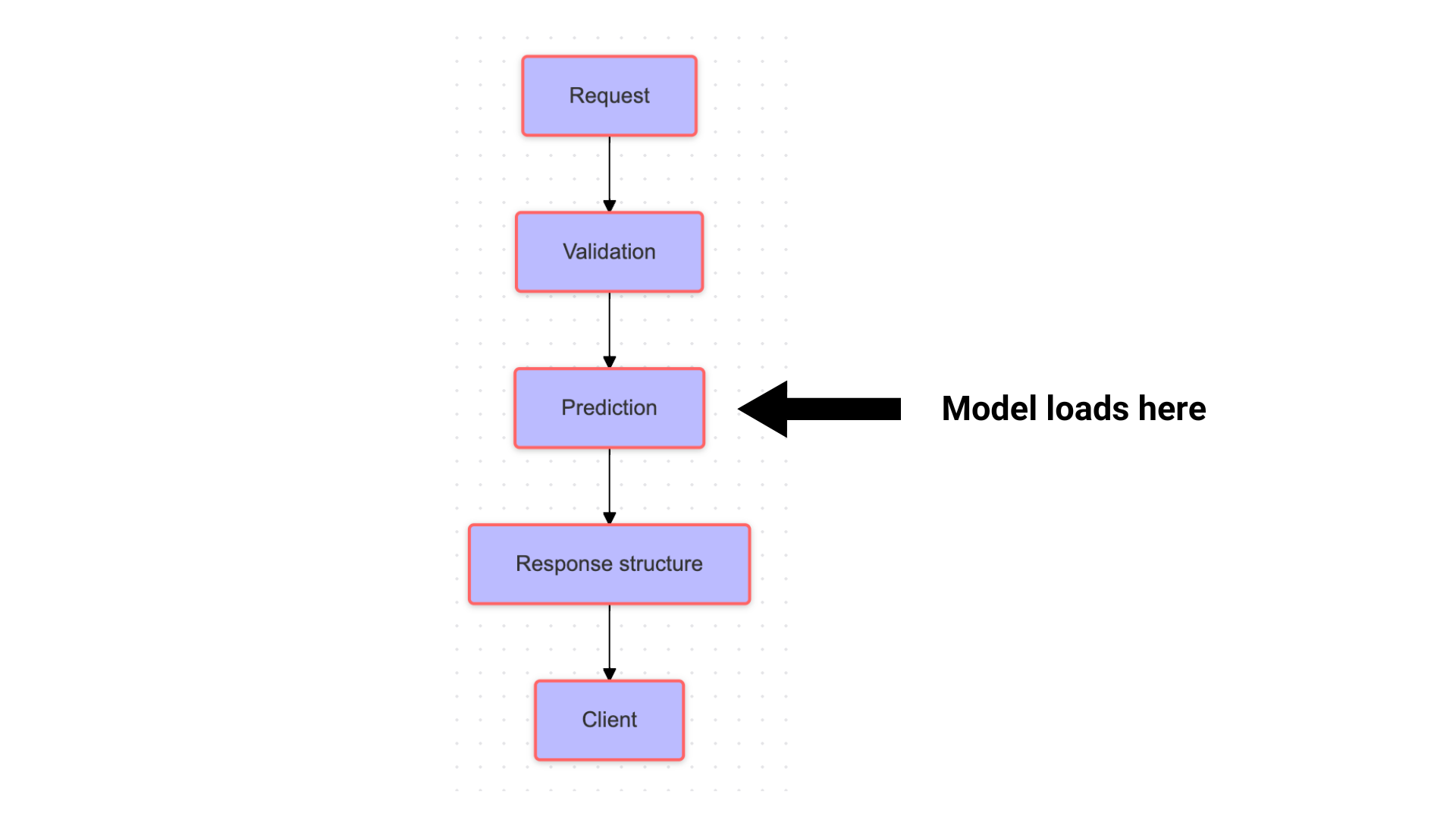

Current structure

Challenge with loading models

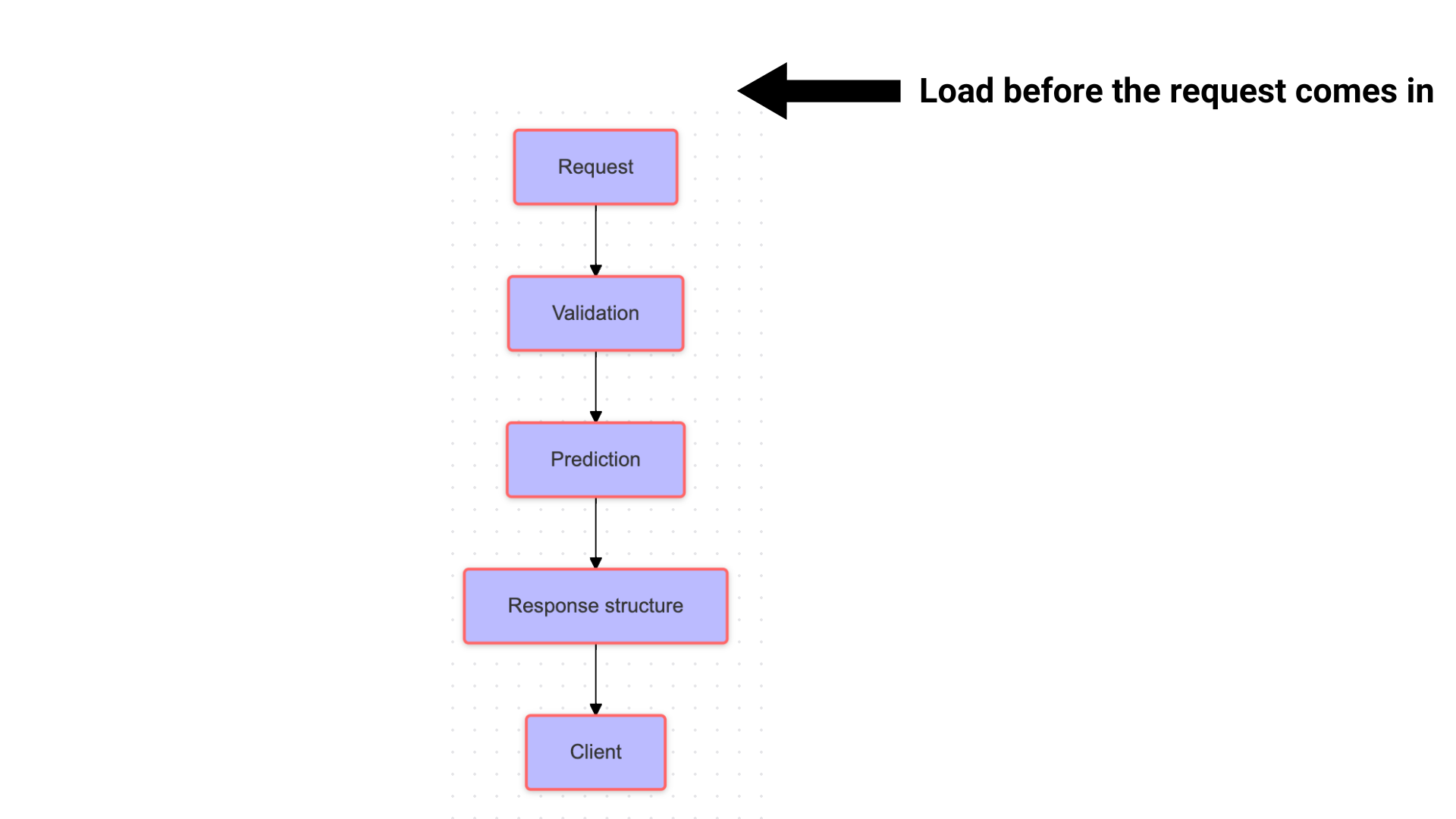

Load models before the request

Loading the model

from fastapi import FastAPI

sentiment_model = None

def load_model():

global sentiment_model

sentiment_model = SentimentAnalyzer("trained_model.joblib")

print("Model loaded successfully")

load_model()

Model loaded successfully

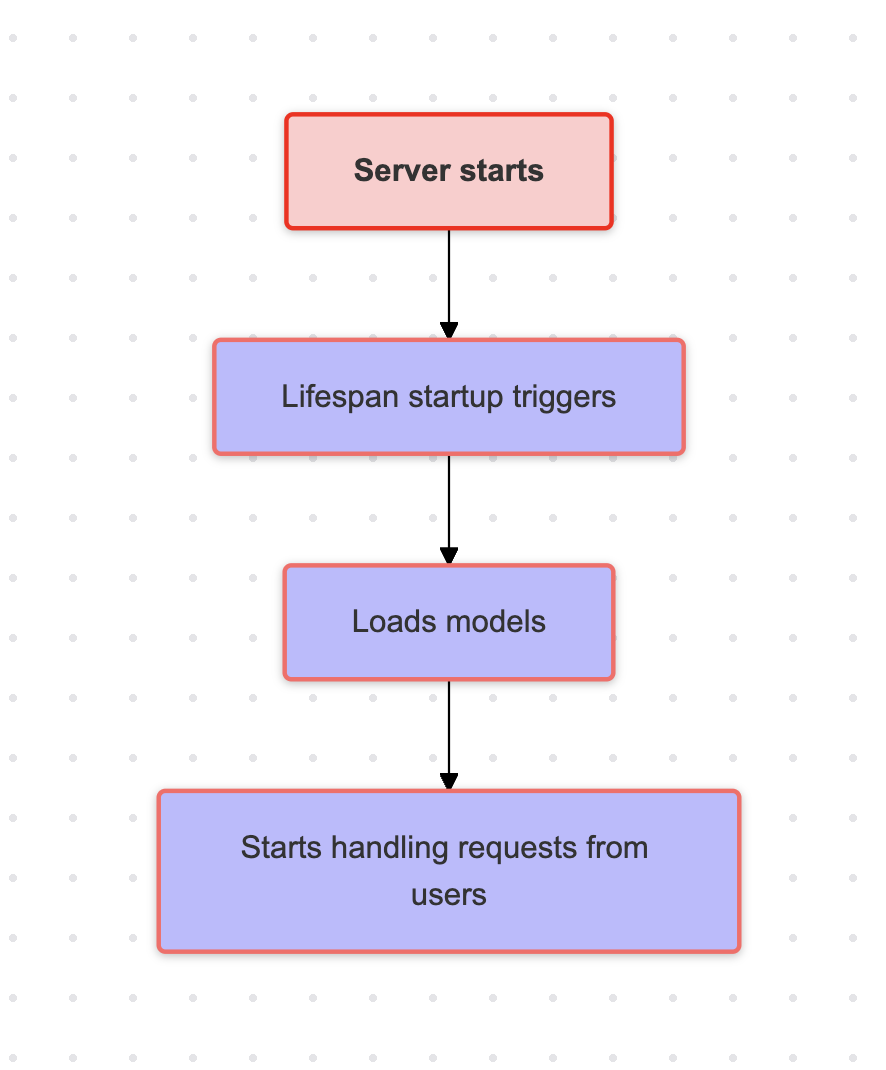

FastAPI lifespan event

FastAPI lifespan event

from contextlib import asynccontextmanager

@asynccontextmanager

async def lifespan(app: FastAPI):

# Startup: Load the ML model

load_model()

yield

app = FastAPI(lifespan=lifespan)

Health checks

@app.get("/health")

def health_check():

if sentiment_model is not None:

return {"status": "healthy",

"model_loaded": True}

return {"status": "unhealthy",

"model_loaded": False}

Curl command:

curl -X GET \

"http://localhost:8080/health" \

-H "accept: application/json"

Output:

{

"status": "healthy",

"model_loaded": true

}

Let's practice!

Deploying AI into Production with FastAPI