Rate Limiting

Deploying AI into Production with FastAPI

Matt Eckerle

Software and Data Engineering Leader

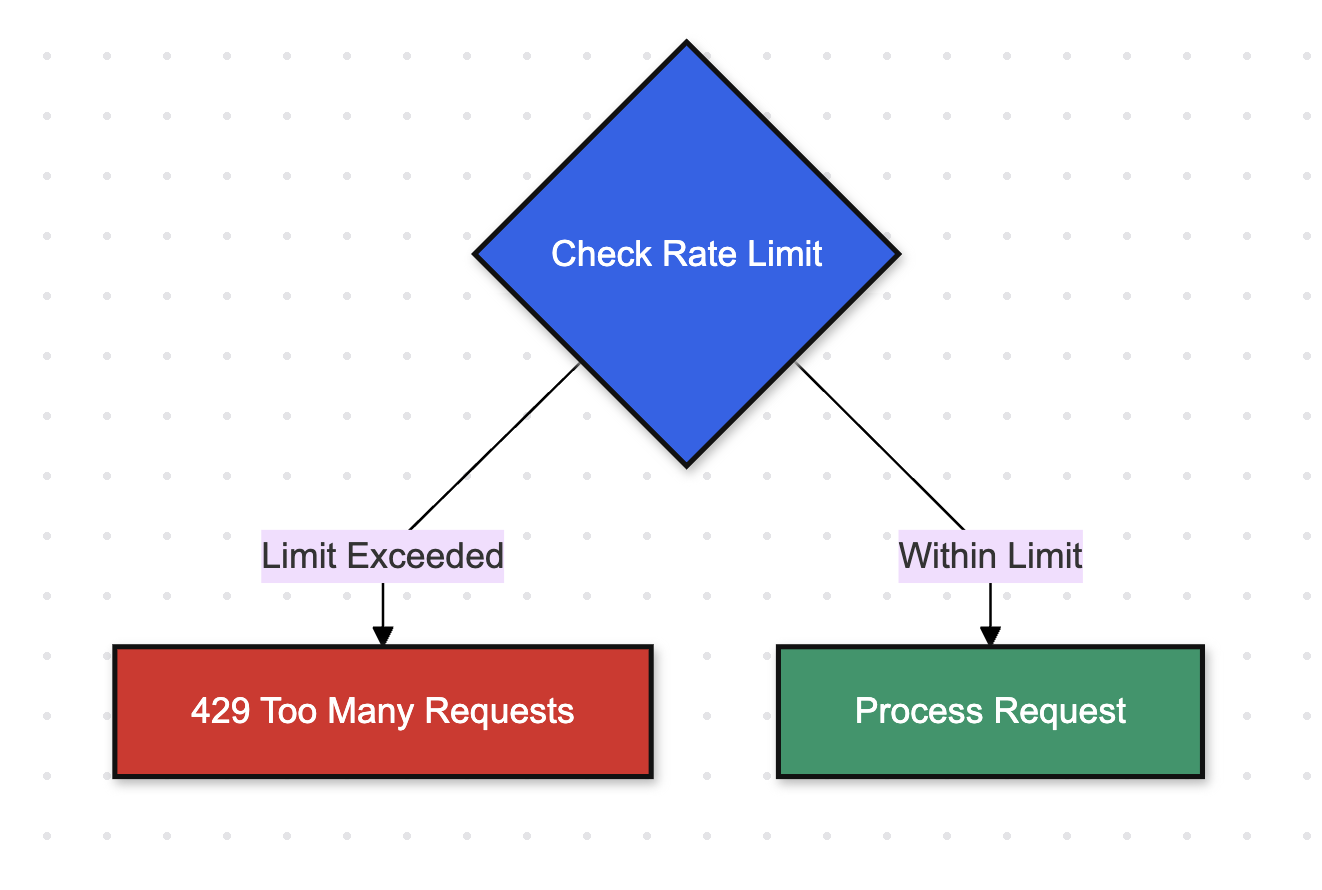

Introducing rate limiting

- Purpose: Controls the frequency of API requests.

- Response: Returns HTTP 429 ("Too Many Requests") when the limit is exceeded.

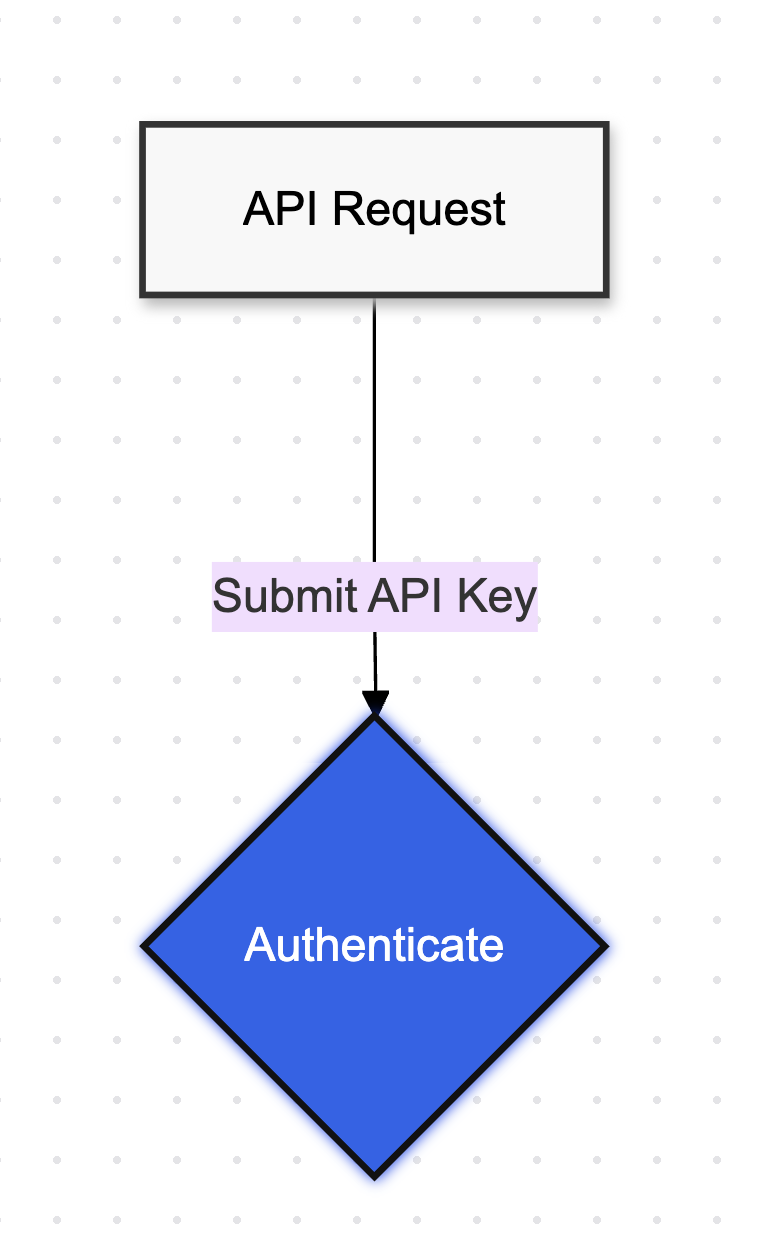

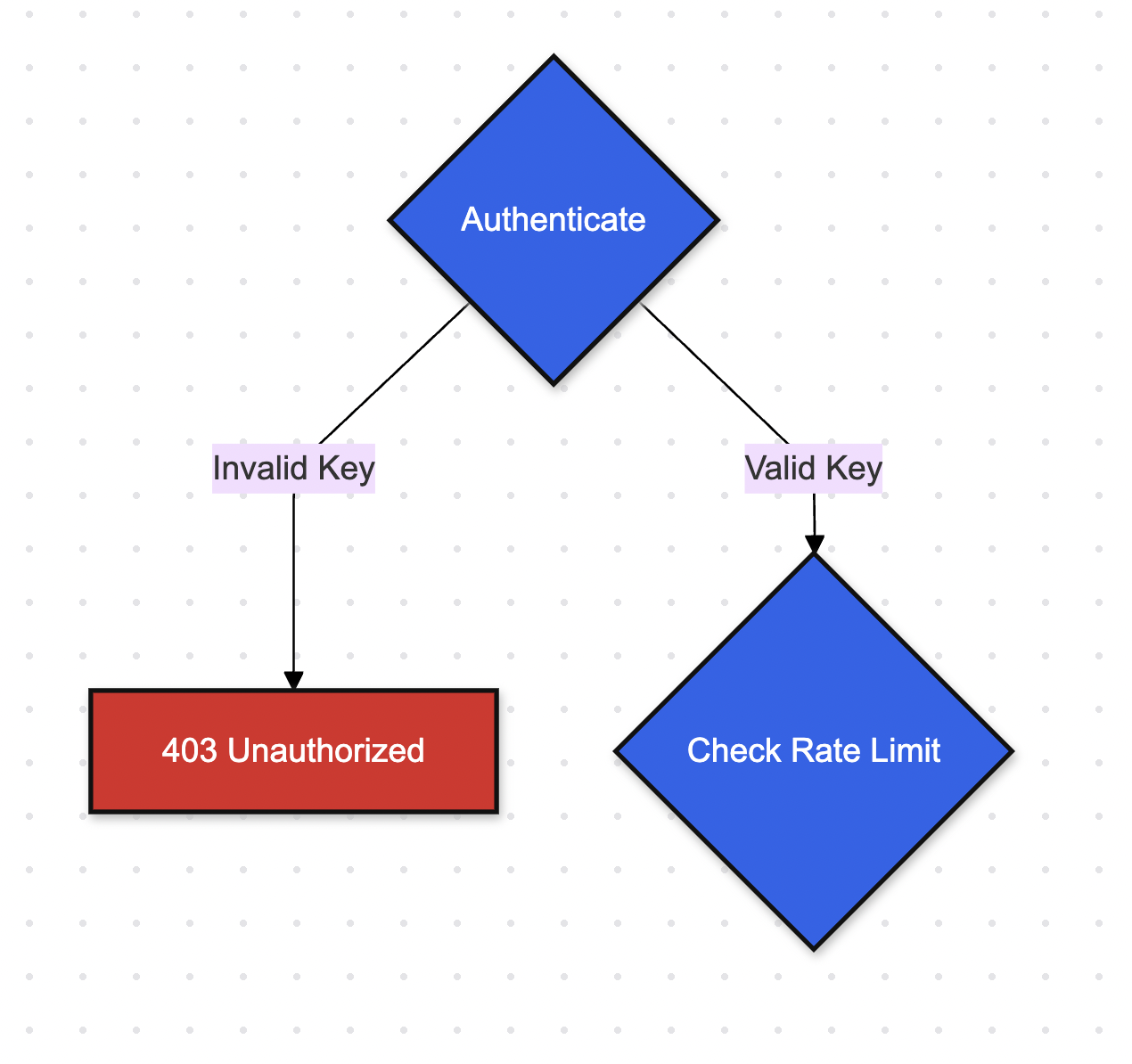

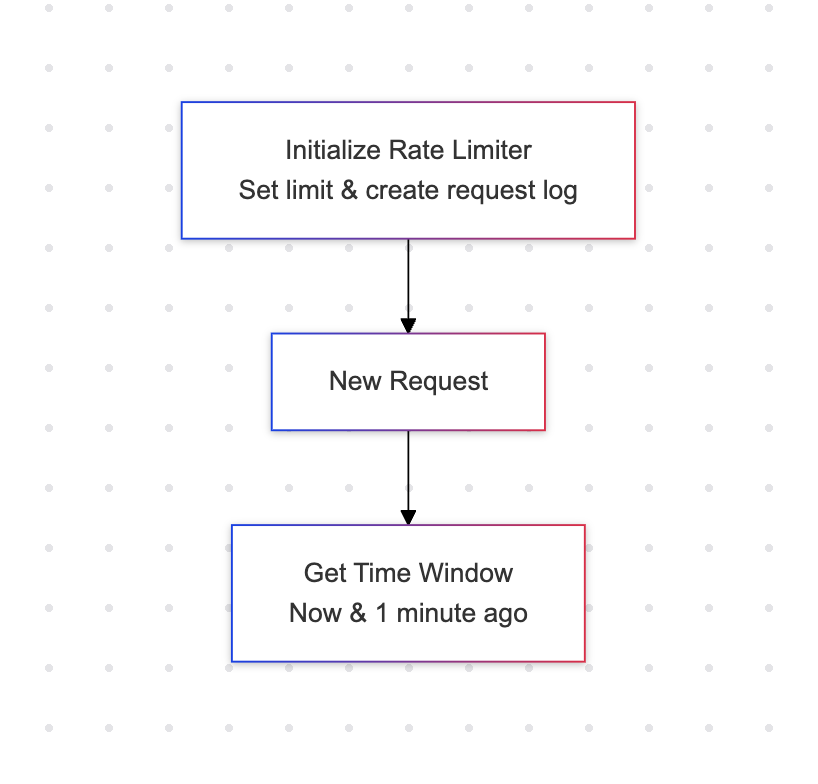

How rate limiting works

Authenticating incoming credentials

Rate limiting check

Setting up our API

from fastapi import FastAPI, Depends, HTTPException

from fastapi.security import APIKeyHeader

from pydantic import BaseModel

app = FastAPI()

model = SentimentAnalyzer(pkl_file_path)

API_KEY_HEADER = APIKeyHeader(name="X-API-Key")

API_KEY = "your-secret-key"

The rate limiter logic

from datetime import datetime, timedelta class RateLimiter: def __init__(self, requests_per_min: int = 10): self.requests_per_min = requests_per_min self.requests = defaultdict(list)def is_rate_limited( self, api_key: str ) -> tuple[bool, int]:

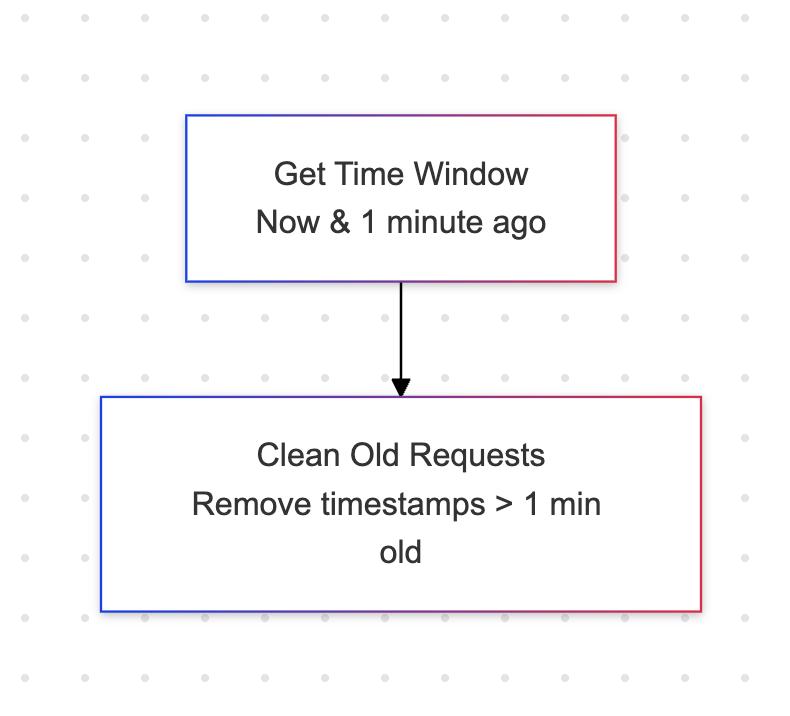

Deleting old requests

from datetime import datetime, timedelta class RateLimiter: def __init__(self, requests_per_min: int = 10): self.requests_per_min = requests_per_min self.requests = defaultdict(list)def is_rate_limited( self, api_key: str ) -> tuple[bool, int]:now = datetime.now() minute_ago = now - timedelta(minutes=1) self.requests[api_key] = [ req_time for req_time in self.requests[api_key] if req_time > minute_ago ]

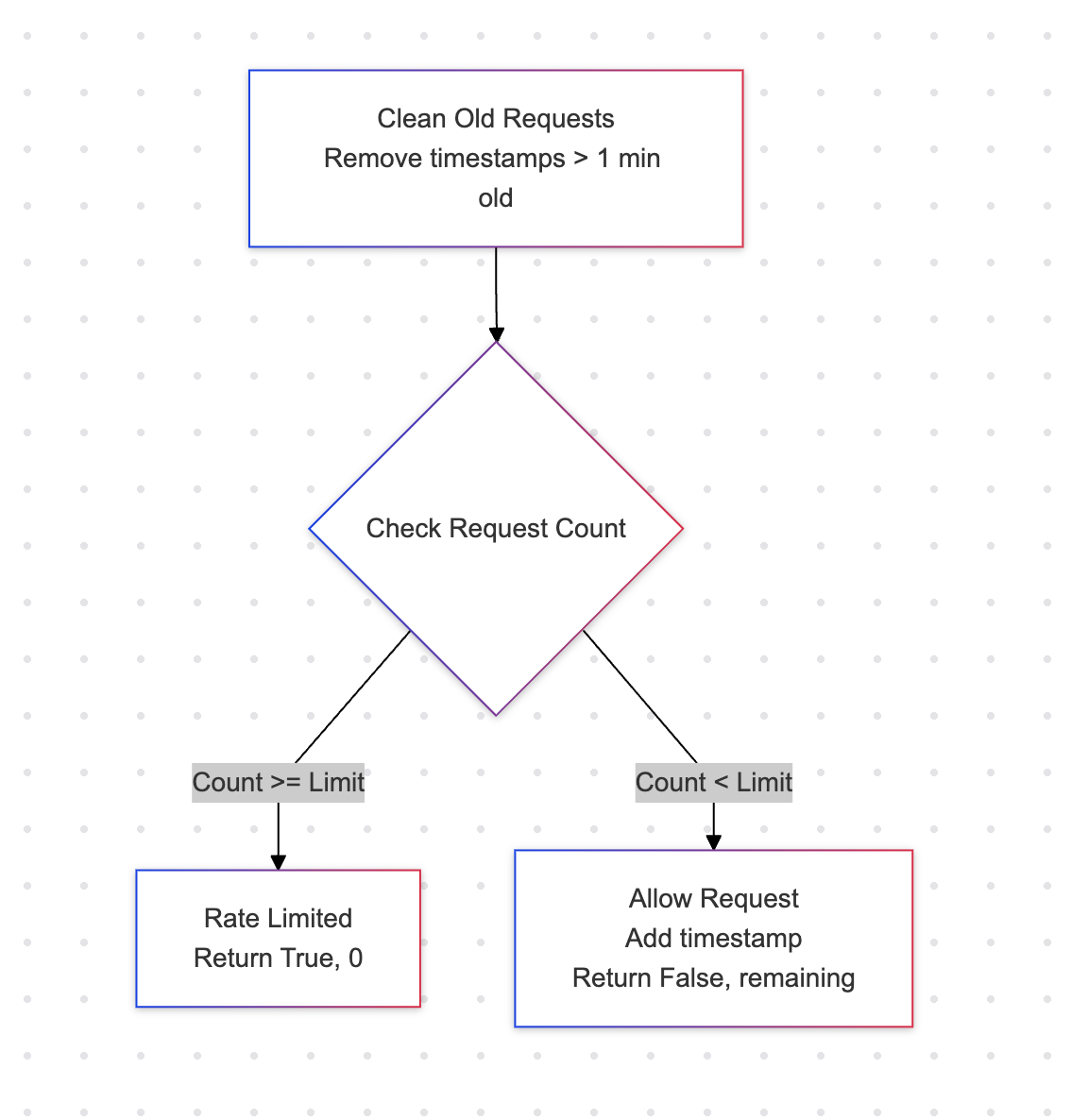

Check request count

def is_rate_limited(self, api_key: str) -> tuple[bool, int]: now = datetime.now() minute_ago = now - timedelta(minutes=1)self.requests[api_key] = [ req_time for req_time in self.requests[api_key] if req_time > minute_ago ]recent_requests = len(self.requests[api_key]) if recent_requests >= self.requests_per_min: return True, 0 self.requests[api_key].append(now) return False

Add rate limit check

rate_limiter = RateLimiter(requests_per_minute=10)def test_api_key(api_key: str = Depends(API_KEY_HEADER)): if api_key != API_KEY: raise HTTPException( status_code=403, detail="Invalid API key" ) is_limited, _ = rate_limiter.is_rate_limited(api_key) if is_limited: raise HTTPException( status_code=429, detail="Rate limit exceeded. Please try again later." ) return api_key

Apply rate limit to endpoint

@app.post("/predict")

def predict_sentiment(

request: SentimentRequest,

api_key: str = Depends(test_api_key)

):

result = sentiment_model(request.text)

_, requests_remaining =

rate_limiter.is_rate_limited(api_key)

return {

"text": request.text,

"sentiment": result[0]["label"].lower(),

"confidence": result[0]["score"],

"requests_remaining": requests_remaining

}

Send request 11 times:

curl -X POST "http://localhost:8000/predict" \

-H "Content-Type: application/json" \

-H "X-API-Key: your-secret-key" \

-d '{"text": "I love this product"}'

Output:

{"detail":"Rate limit exceeded.

Please try again later."}

Let's practice!

Deploying AI into Production with FastAPI