Markov Chain analysis

Advanced Probability: Uncertainty in Data

Maarten Van den Broeck

Senior Content Developer at DataCamp

What are Markov Chains?

- Model systems with different states

- Transition between states based on probabilities

- Key idea: transition only depends on current state

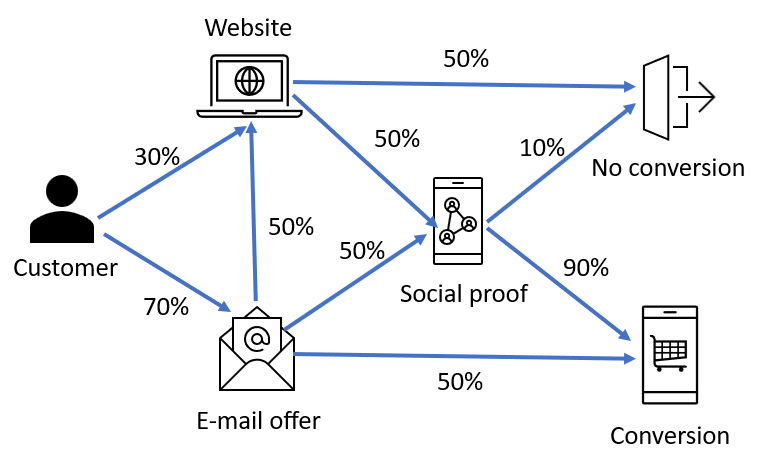

- Key application: understanding customer journeys

The elements of a Markov Chain

- States: different conditions or stages

The elements of a Markov Chain

- States: different conditions or stages

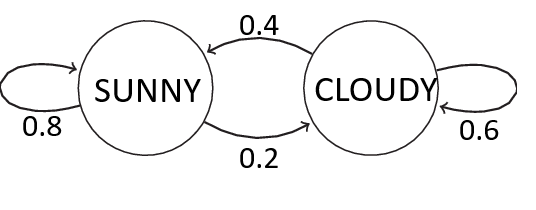

- Transitions: probabilities of moving from one state to another

The elements of a Markov Chain

- Steady-state probabilities: long-term probabilities of being in a state

- Calculated using statistical software or BI tools

- E.g. steady-state for sunny: 66.67%

Transitioning matrix

| Sunny | Cloudy | |

|---|---|---|

| Sunny | 80% | 20% |

| Cloudy | 40% | 60% |

Applications of Markov Chains

- Customer retention:

- Identifying transition probabilities between engagement and churn states

- Conversion optimization:

- Progression through a sales funnel

- Product recommendation:

- Predicting future customer interactions

Example: e-commerce$^1$

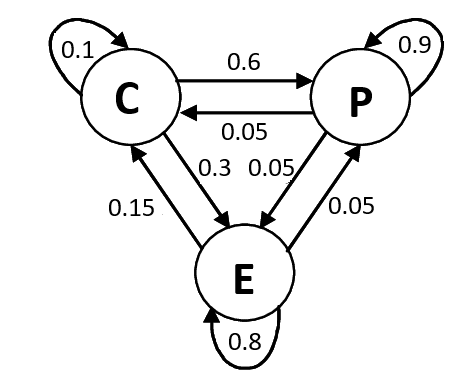

1 C for Coupon, P for Purchase, E for Exit

Example: e-commerce

| Used a (new) coupon | Made a (new) purchase | Exited | |

|---|---|---|---|

| Used a coupon | 10% | 60% | 30% |

| Made a purchase | 5% | 90% | 5% |

| Exited | 15% | 5% | 80% |

Example: e-commerce

| Used a (new) coupon | Made a (new) purchase | Exited | |

|---|---|---|---|

| Used a coupon | 10% | 60% | 30% |

| Made a purchase | 5% | 90% | 5% |

| Exited | 15% | 5% | 80% |

Let's practice!

Advanced Probability: Uncertainty in Data