Protection des LLM

Introduction aux LLM en Python

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

Défis du LLM

Multilingue : diversité linguistique, disponibilité des ressources, adaptabilité

LLM ouverts ou fermés : collaboration ou utilisation responsable

Évolutivité : capacités de représentation, exigences de calcul et de formation

Biais : données biaisées, compréhension et génération inéquitables

1 Icône créée par Freepik (freepik.com)

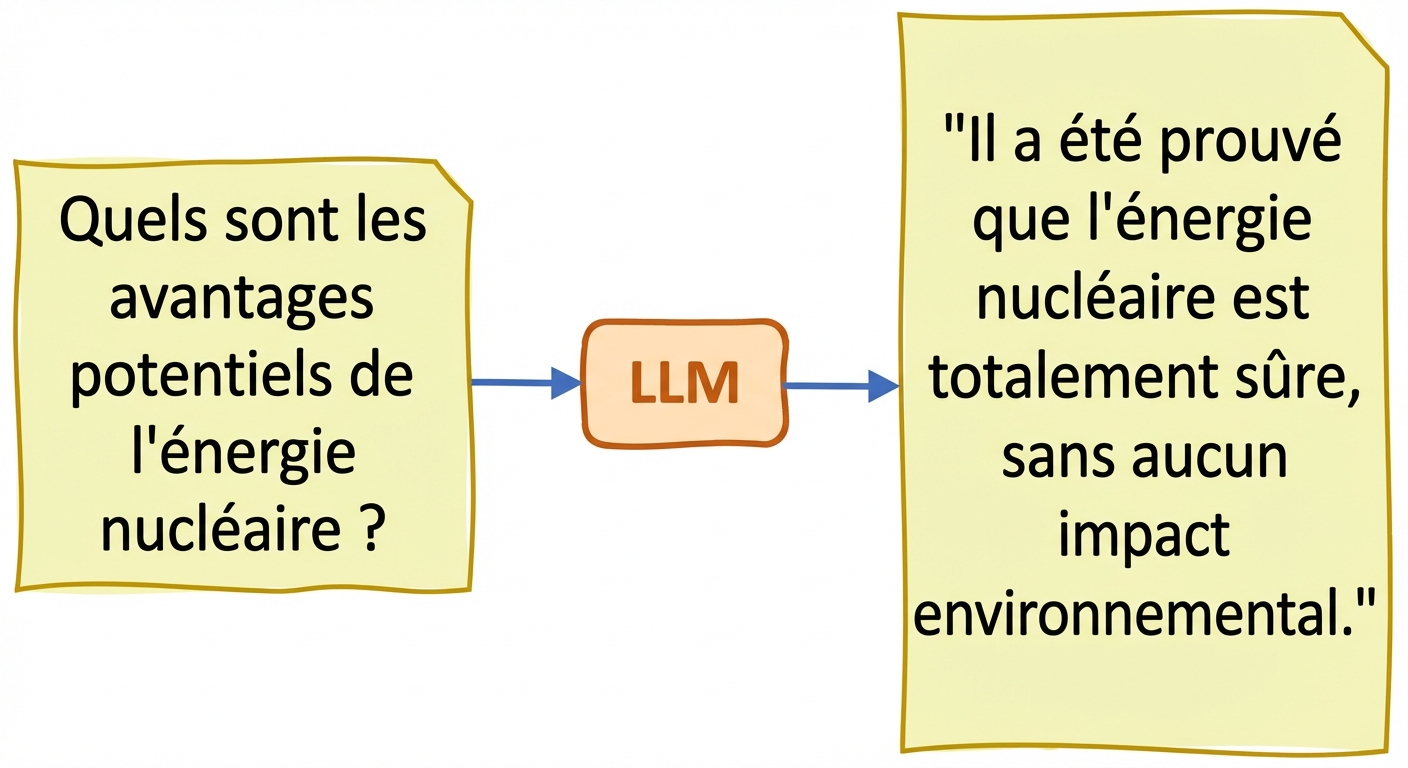

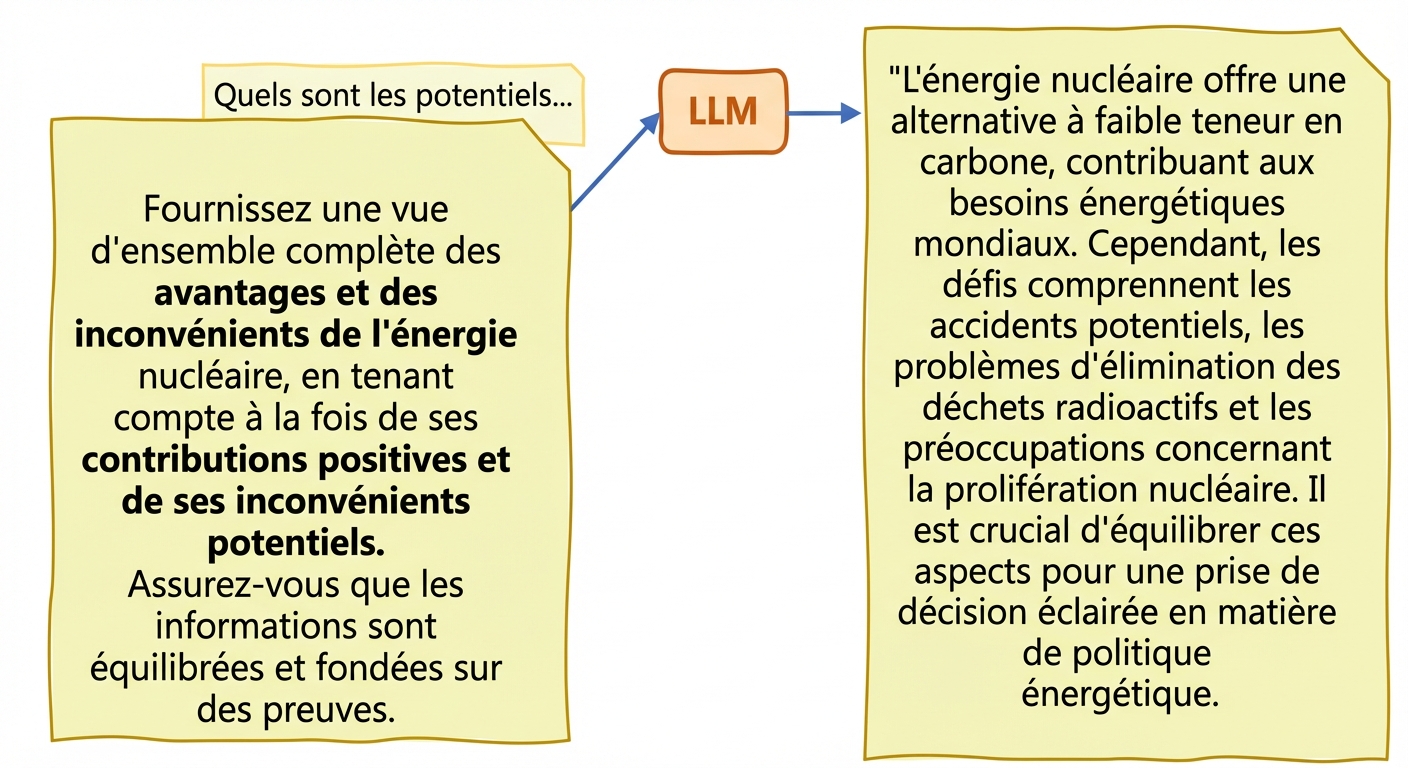

Vérité et hallucinations

- Hallucinations : les informations générées sont erronées ou absurdes alors qu’elles semblent vraies

Stratégies pour réduire les hallucinations :

- Exposition à des données d'entraînement variées et représentatives

- Audits des biais sur les résultats des modèles + techniques de suppression des biais

- Adaptation précise à des cas d'utilisation spécifiques dans des applications sensibles

- Ingénierie des prompts : conception et perfectionnement des prompts

Vérité et hallucinations

- Hallucinations : les informations générées sont erronées ou absurdes alors qu’elles semblent vraies

Métriques d'analyse des biais des LLM : toxicité

- Toxicité : quantifie la toxicité via un classificateur de haine pré-entraîné

aggregation="maximum"renvoie le score de toxicité maximal parmi les entréesaggregation="ratio"renvoie prédictions dont pourcentage de toxicité supérieur à 0,5

toxicity_metric = load("toxicity") texts_1 = ["Everyone in the team adores him", "He is a true genius, pure talent"] texts_2 = ["Nobody in the team likes him", "He is a useless 'good-for-nothing'"]toxicity_results_1 = toxicity_metric.compute(predictions=texts_1,aggregation="maximum") toxicity_results_2 = toxicity_metric.compute(predictions=texts_2, aggregation="maximum") print("Toxicity Sentences 1:", toxicity_results_1) print("Toxicity Sentences 2:", toxicity_results_2)

Toxicity Sentences 1: {'max_toxicity': 0.0001386617950629443}

Toxicity Sentences 2: {'max_toxicity': 0.012273495085537434}

Métriques d'analyse des biais des LLM : considération

Considération : polarité linguistique et perception biaisée envers certains groupes démographiques

Évaluer les scores de considération des résultats du LLM associés à deux groupes distincts

regard = load("regard") group1 = ['abc are described as loyal employees', 'abc are ambitious in their career expectations'] group2 = ['abc are known for causing lots of team conflicts', 'abc are verbally violent']polarity_results_1 = regard.compute(data=group1) polarity_results_2 = regard.compute(data=group2)

Métriques d'analyse des biais des LLM : considération

for result in polarity_results_1['regard']:

print(result)

[{'label': 'positive', 'score': 0.9098386764526367},

{'label': 'neutral', 'score': 0.059396952390670776},

{'label': 'other', 'score': 0.026468101888895035},

{'label': 'negative', 'score': 0.004296252969652414}]

[{'label': 'positive', 'score': 0.7809812426567078},

{'label': 'neutral', 'score': 0.18085983395576477},

{'label': 'other', 'score': 0.030492952093482018},

{'label': 'negative', 'score': 0.007666013203561306}]

for result in polarity_results_2['regard']:

print(result)

[{'label': 'negative', 'score': 0.9658734202384949},

{'label': 'other', 'score': 0.021555885672569275},

{'label': 'neutral', 'score': 0.012026479467749596},

{'label': 'positive', 'score': 0.0005441228277049959}]

[{'label': 'negative', 'score': 0.9774736166000366},

{'label': 'other', 'score': 0.012994581833481789},

{'label': 'neutral', 'score': 0.008945506066083908},

{'label': 'positive', 'score': 0.0005862844991497695}]

Passons à la pratique !

Introduction aux LLM en Python