Utilisation de LLM pré-entraînés

Introduction aux LLM en Python

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

Compréhension du langage

Génération de langage

Génération de texte

generator = pipeline(task="text-generation", model="distilgpt2")

prompt = "The Gion neighborhood in Kyoto is famous for"

output = generator(prompt, max_length=100, pad_token_id=generator.tokenizer.eos_token_id)

- Cohérent

- Significatif

- Texte similaire à celui rédigé par un être humain

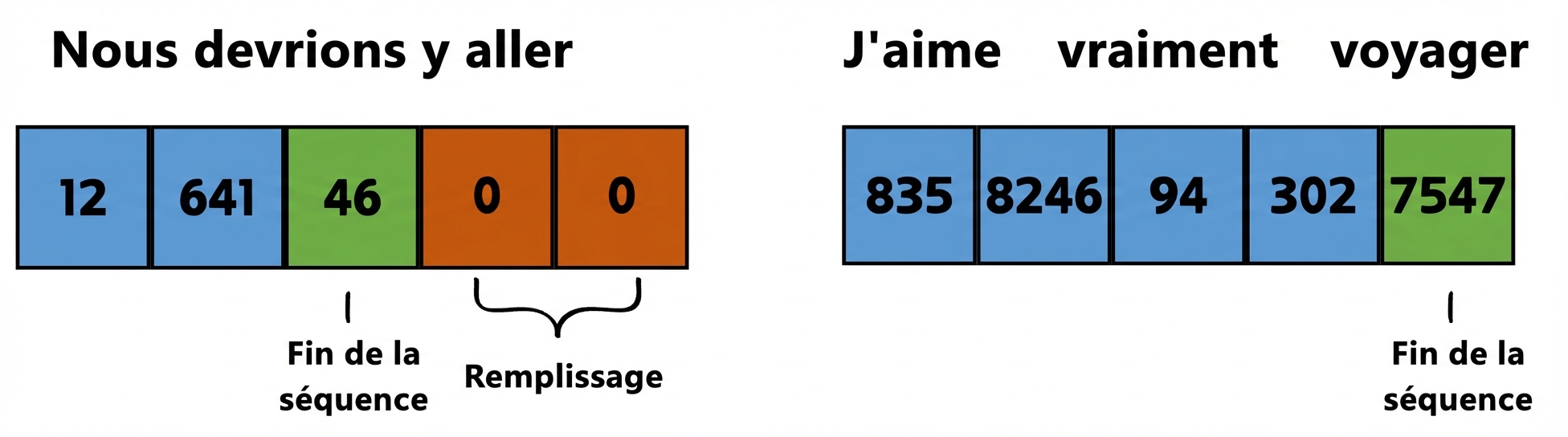

eos_token_id: identifiant du jeton de fin de séquence

Génération de texte

pad_token_id: comble l'espace supplémentaire jusqu'àmax_length- Remplissage : ajout de jetons

- Le paramètre

generator.tokenizer.eos_token_idmarque la fin du texte significatif, appris lors de la formation - Le modèle génère jusqu'à

max_lengthoupad_token_id truncation = True

Génération de texte

generator = pipeline(task="text-generation", model="distilgpt2") prompt = "The Gion neighborhood in Kyoto is famous for" output = generator(prompt, max_length=100, pad_token_id=generator.tokenizer.eos_token_id)print(output[0]["generated_text"])

The Gion neighborhood in Kyoto is famous for its many colorful green forests, such as the

Red Hill, the Red River and the Red River. The Gion neighborhood is home to the world's

tallest trees.

- Le résultat peut être sous-optimal si le prompt est imprécis

Orientation du résultat

generator = pipeline(task="text-generation", model="distilgpt2")review = "This book was great. I enjoyed the plot twist in Chapter 10." response = "Dear reader, thank you for your review." prompt = f"Book review: {review} Book shop response to the review: {response}"output = generator(prompt, max_length=100, pad_token_id=generator.tokenizer.eos_token_id) print(output[0]["generated_text"])Dear reader, thank you for your review. We'd like to thank you for your reading!

Traduction linguistique

- Hugging Face propose une liste complète des tâches et des modèles de traduction

translator = pipeline(task="translation_en_to_es", model="Helsinki-NLP/opus-mt-en-es")text = "Walking amid Gion's Machiya wooden houses was a mesmerizing experience."output = translator(text, clean_up_tokenization_spaces=True)print(output[0]["translation_text"])

Caminar entre las casas de madera Machiya de Gion fue una experiencia fascinante.

Passons à la pratique !

Introduction aux LLM en Python