Creating customer call transcripts

Multi-Modal Systems with the OpenAI API

James Chapman

Curriculum Manager, DataCamp

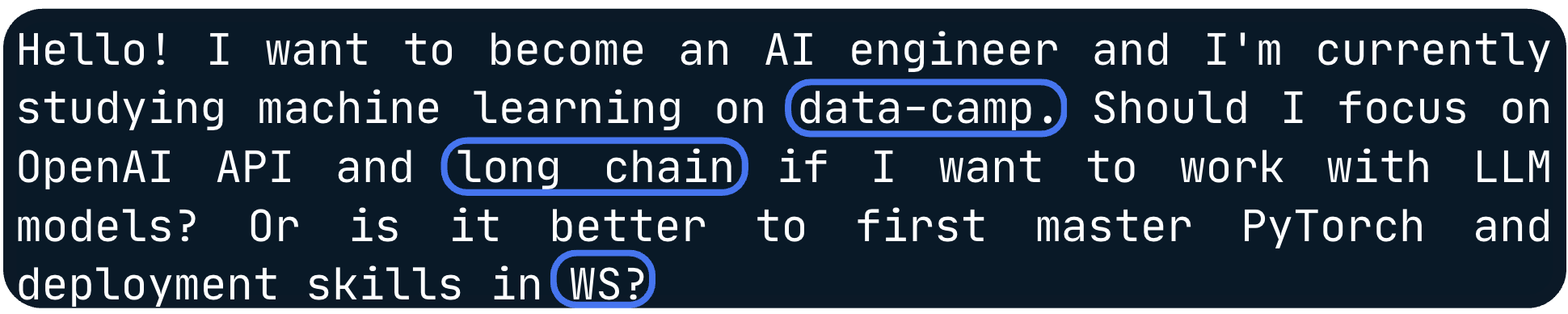

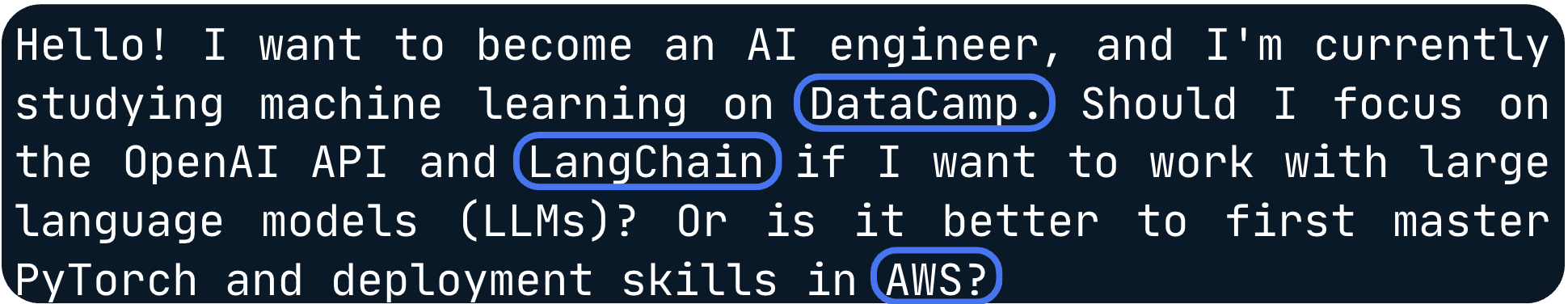

Case study introduction

$$

- AI Engineer at DataCamp

- Handles voice messages

- Speech customer support chatbot

$$

$$

Case study introduction

Case study introduction

Case study introduction

Case study introduction

Case study introduction

Case study introduction

Case study introduction

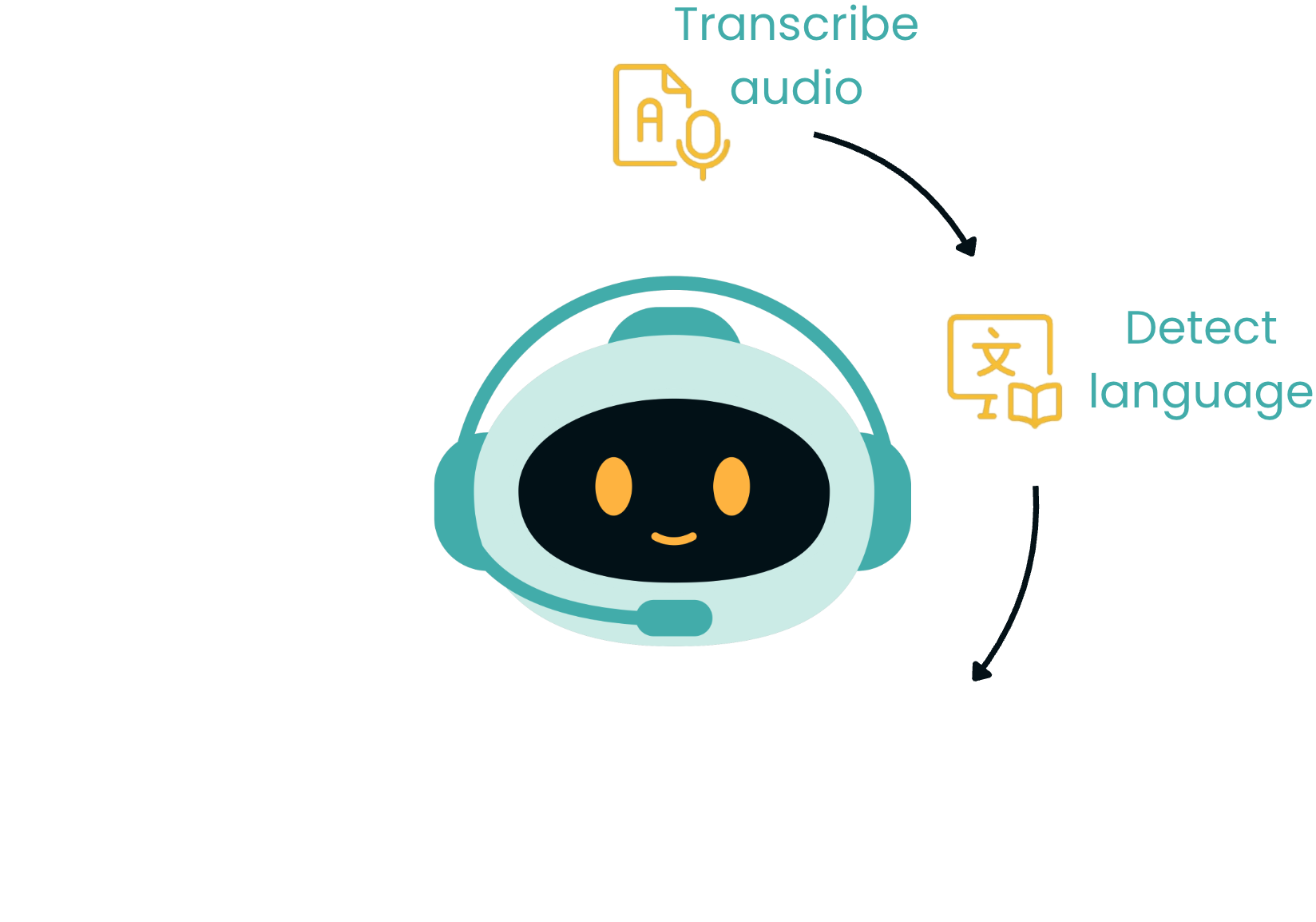

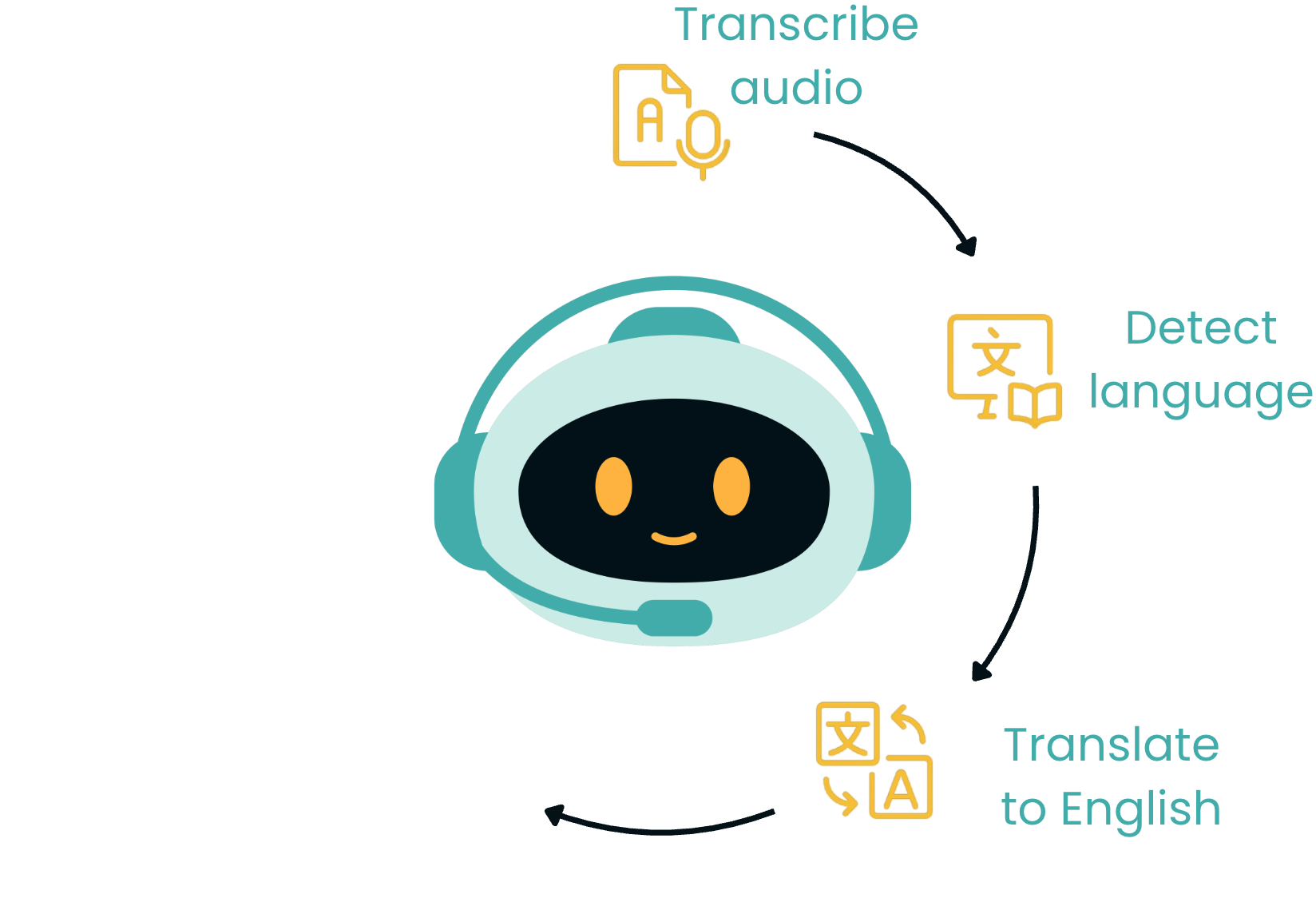

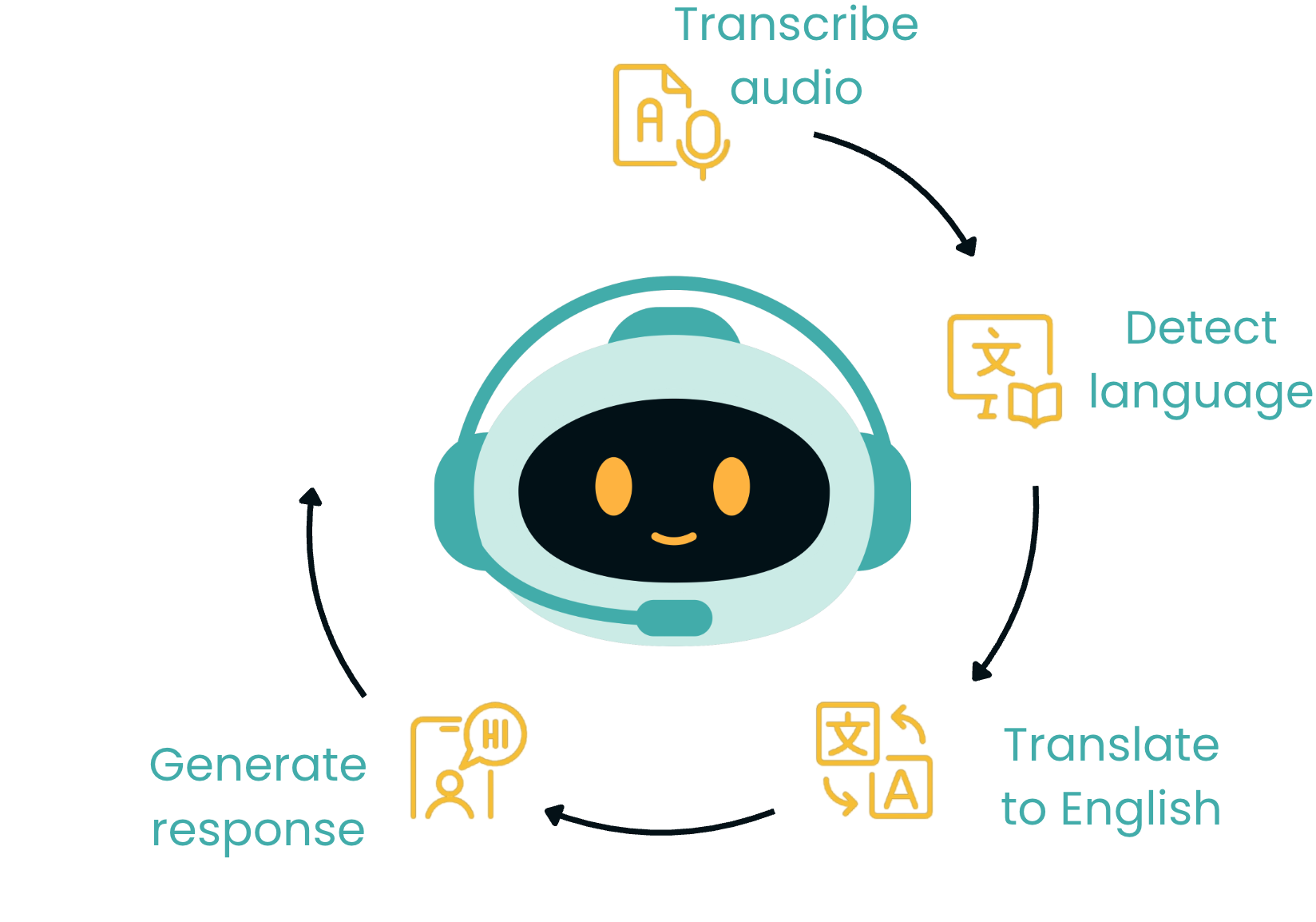

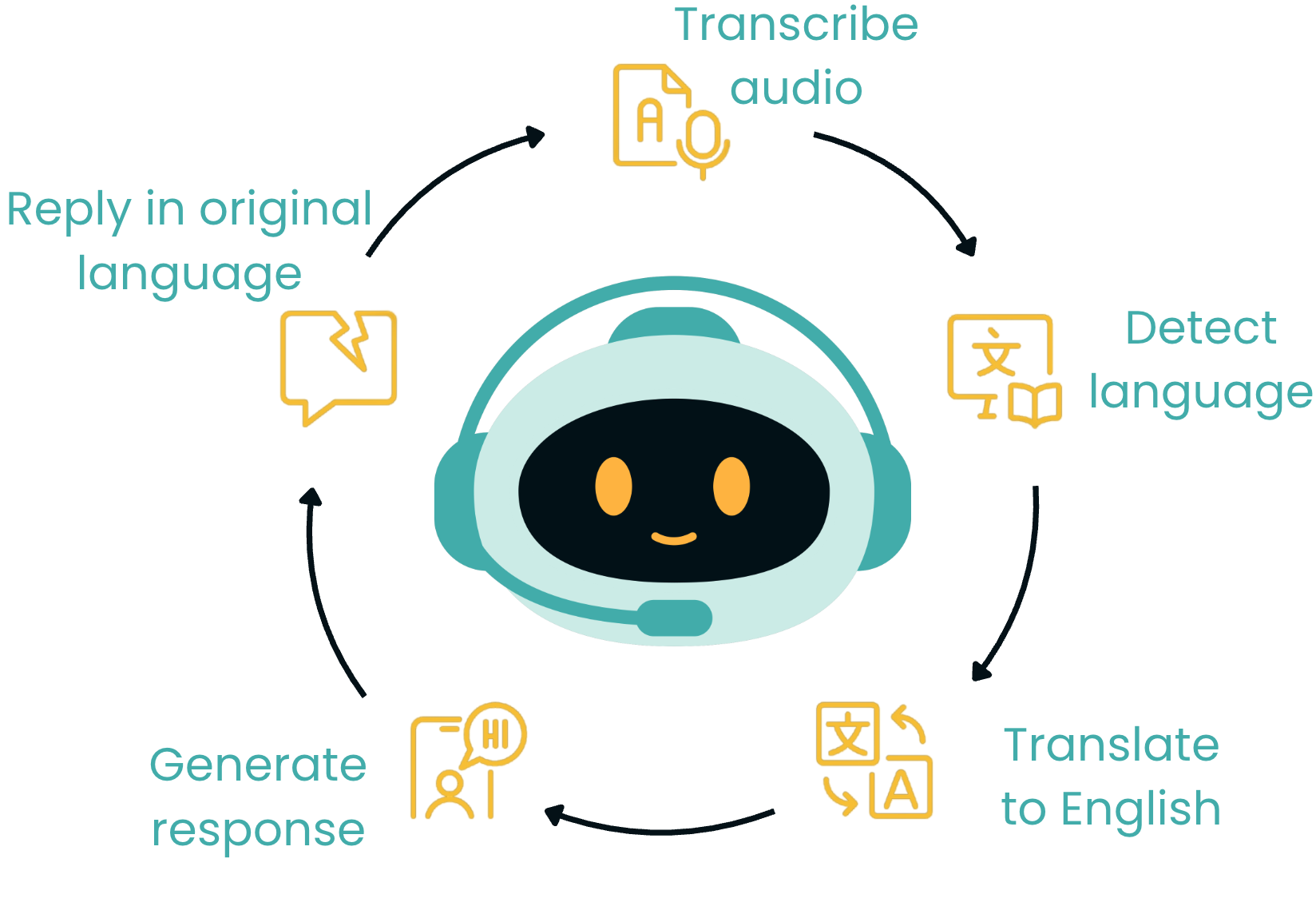

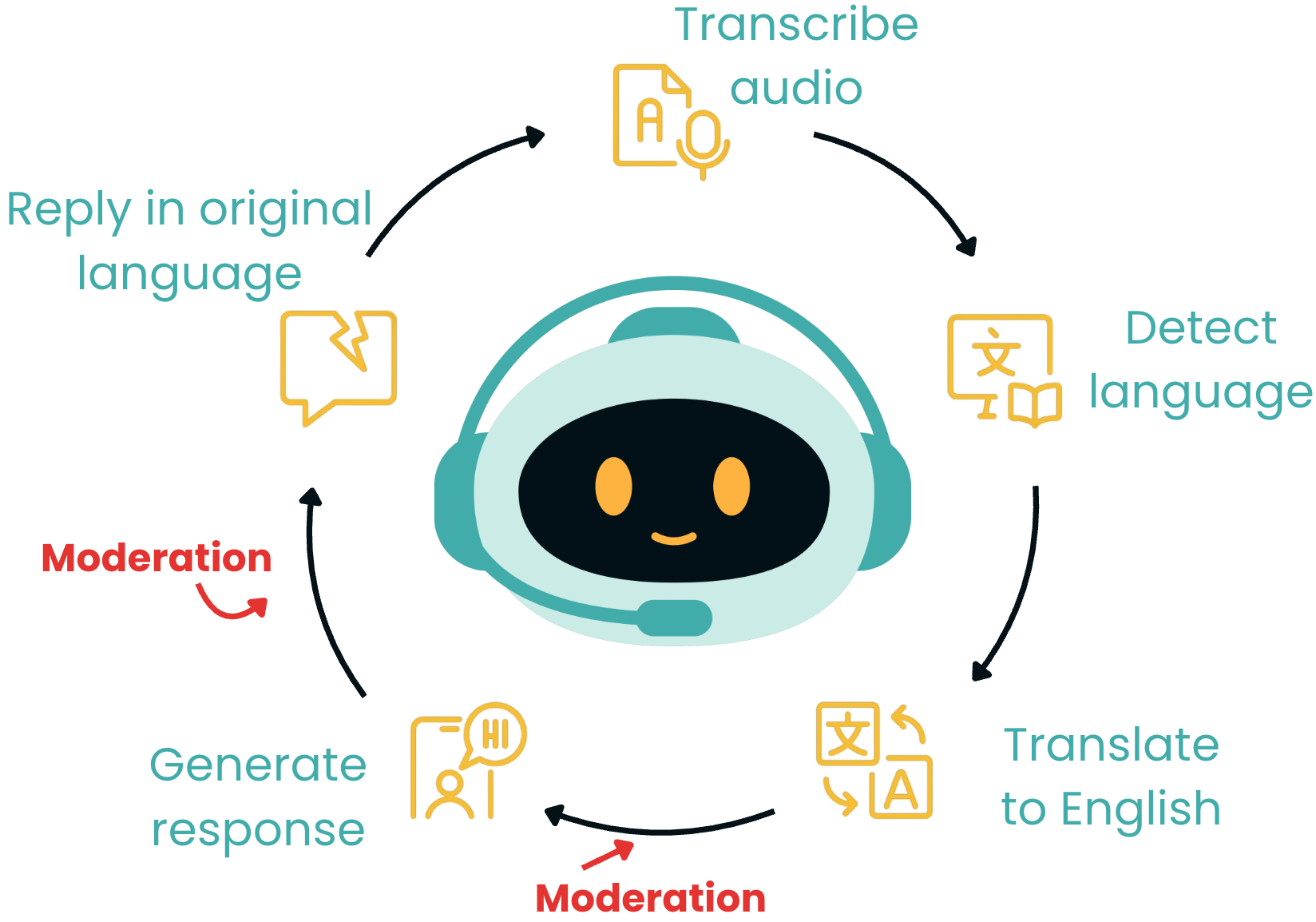

Case study plan

$$

$$

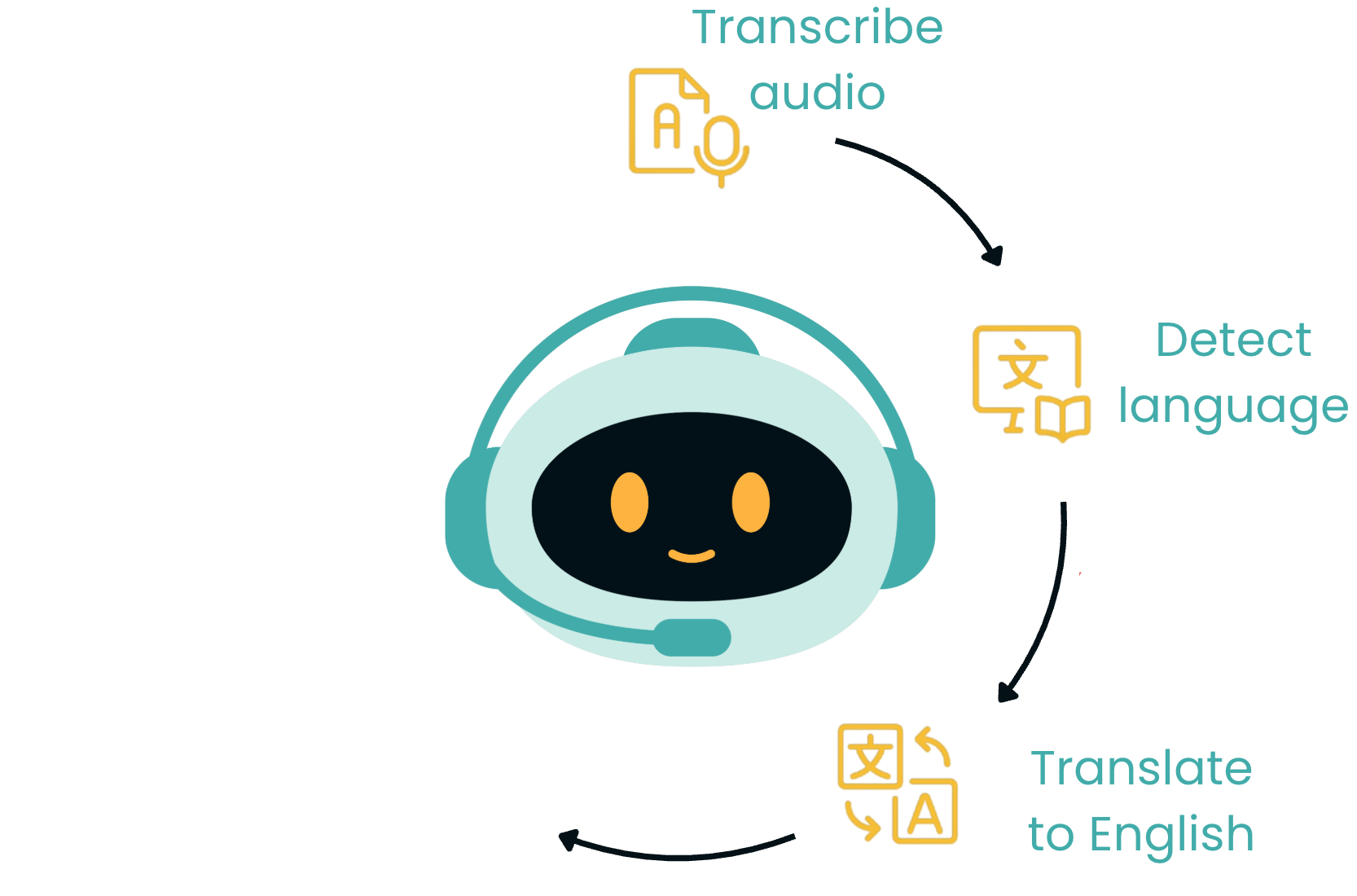

- Transcribe the audio into text

- Detect the language

- Translate into English

- Refining the text

$$

Step 1: transcribe audio

from openai import OpenAI

client = OpenAI(api_key="ENTER YOUR KEY HERE")

# Open the mp3 file

audio_file = open("recording.mp3", "rb")

# Create a transcript

response = client.audio.transcriptions.create(

model="whisper-1",

file=audio_file)

Step 1: transcribe audio

# Extract and print the transcript

transcript = response.text

print(transcript)

$$

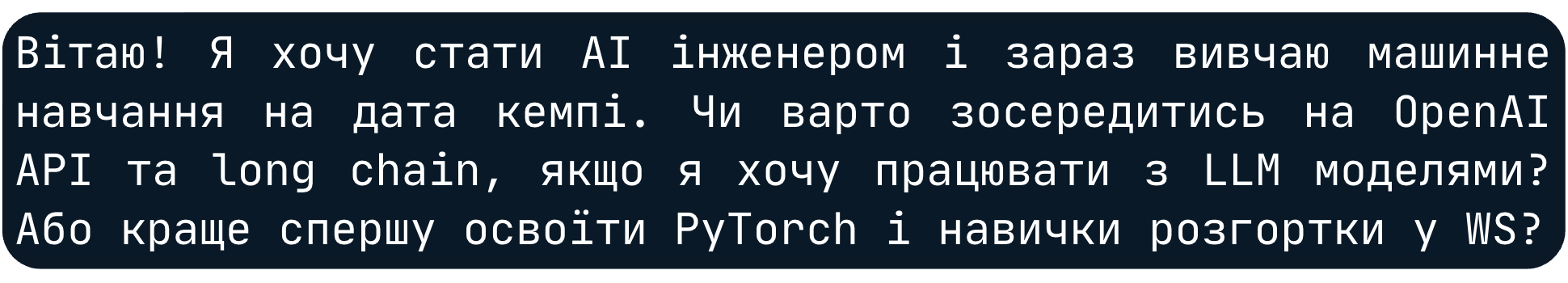

Step 2: detect language

response = client.chat.completions.create( model="gpt-4o-mini", max_completion_tokens=5,messages=[{"role": "user", "content": f"""Identify the language of the following text and respond only with the country code (e.g., 'en', 'uk', 'fr'): {transcript}"""}])# Extract detected language language = response.choices[0].message.content print(language)

uk

Step 3: translate to English

response = client.chat.completions.create(

model="gpt-4o-mini",

max_completion_tokens=300,

messages=[

{"role": "user", "content": f"""Translate this customer transcript

from country code {language} to English: {transcript}"""}])

# Extract translated text

translated_text = response.choices[0].message.content

Step 3: translate to English

print(translated_text)

Step 3: translate to English

print(translated_text)

Step 4: refining the text

response = client.chat.completions.create(

model="gpt-4o-mini",

max_completion_tokens=300,

messages=[

{"role": "user",

"content": f"""You are an AI assistant that corrects transcripts by fixing

misinterpretations, names, and terminology. Please refine the following

transcript:\n\n{translated_text}"""}])

# Extract corrected text

corrected_text = response.choices[0].message.content

Step 4: refining the text

print(corrected_text)

Recap

$$

- Transcribed the audio

- Detected and translated language

- Refined the text

$$

- Called OpenAI API four times ⭐

Time for practice!

Multi-Modal Systems with the OpenAI API