Mixed precision training

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

Mixed precision training accelerates computation

Faster calculations with less precision

Faster calculations with less precision

Faster calculations with less precision

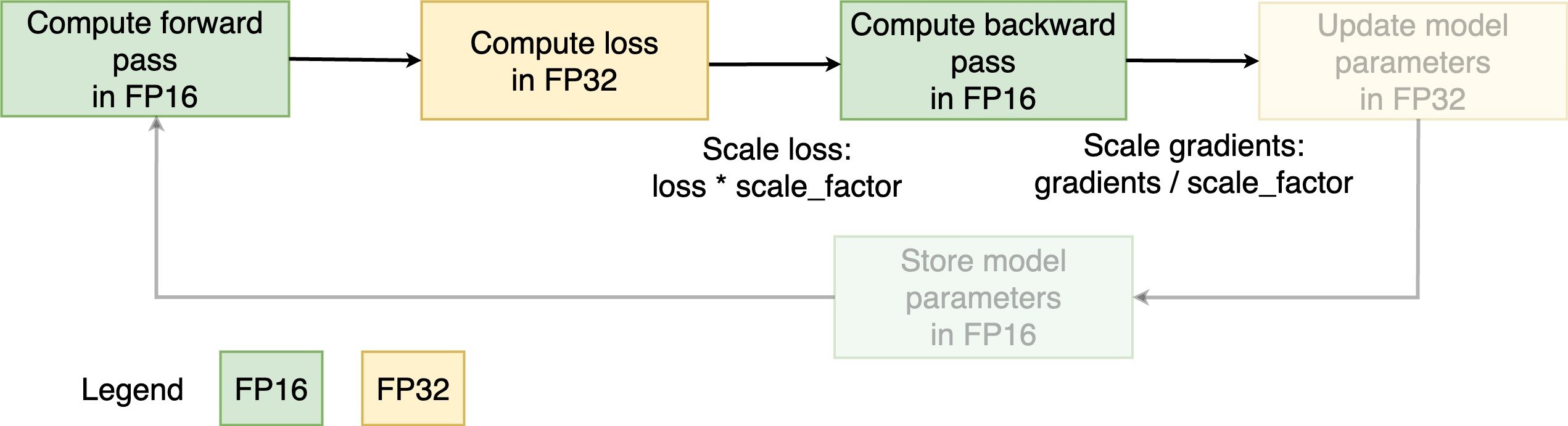

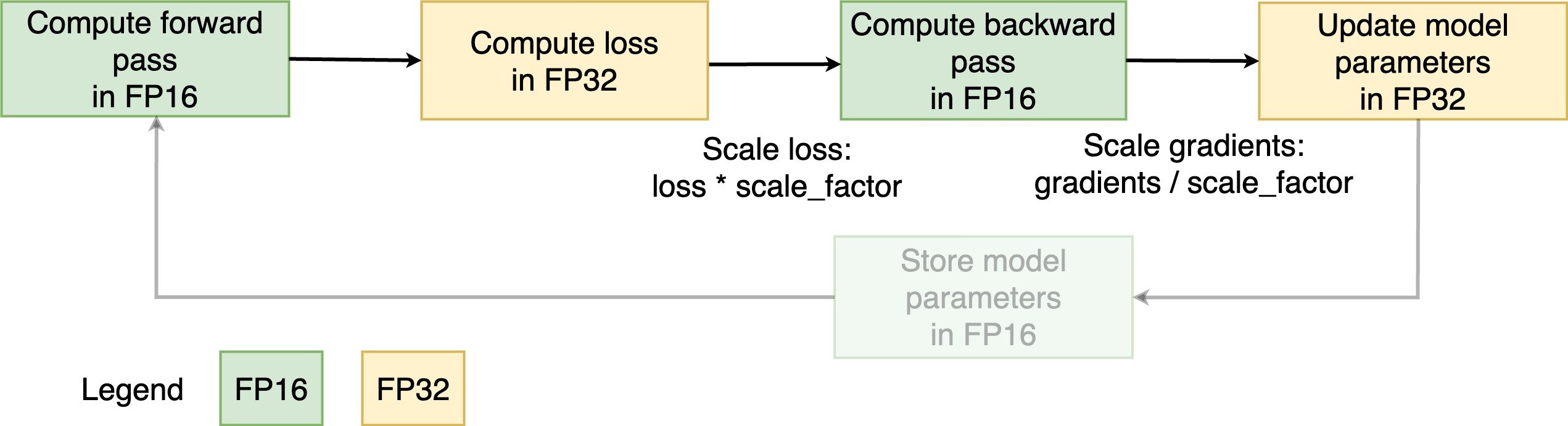

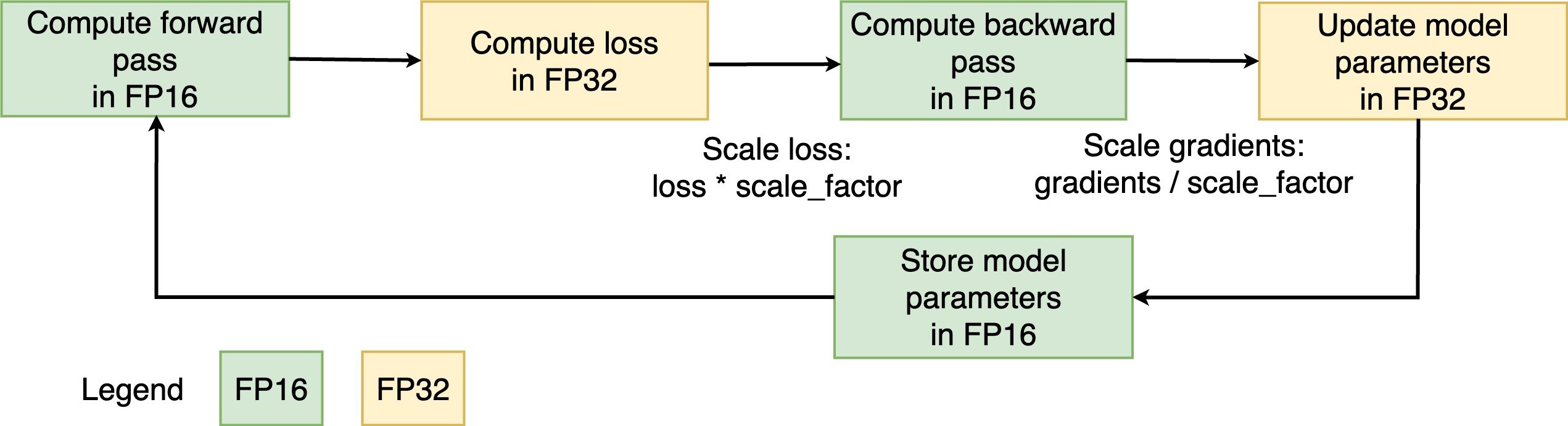

What is mixed precision training?

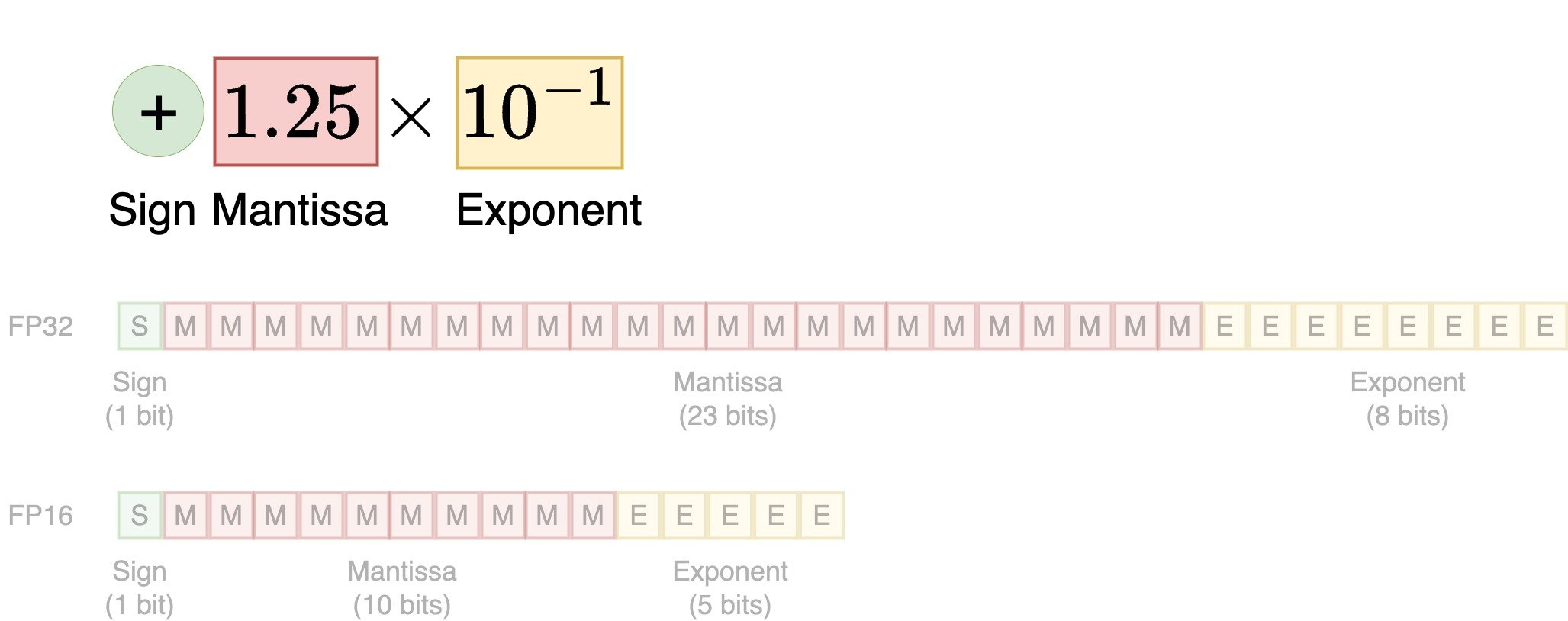

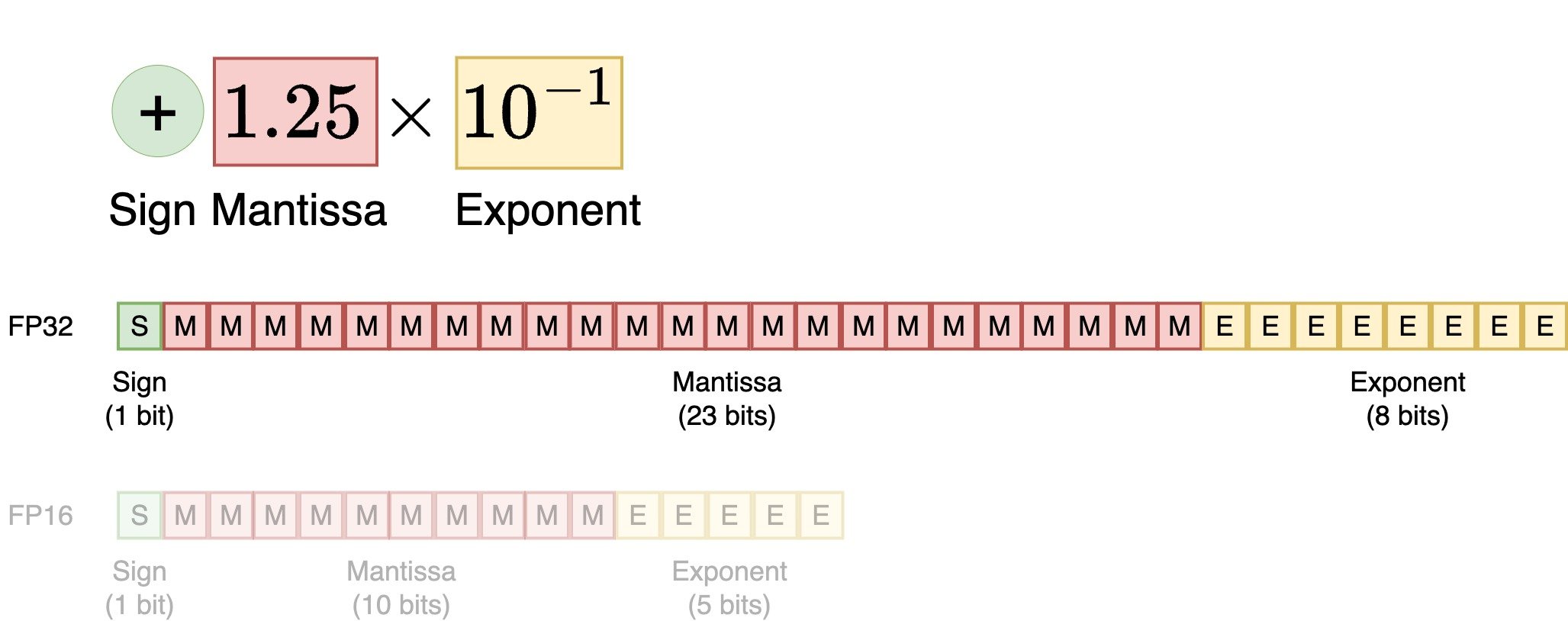

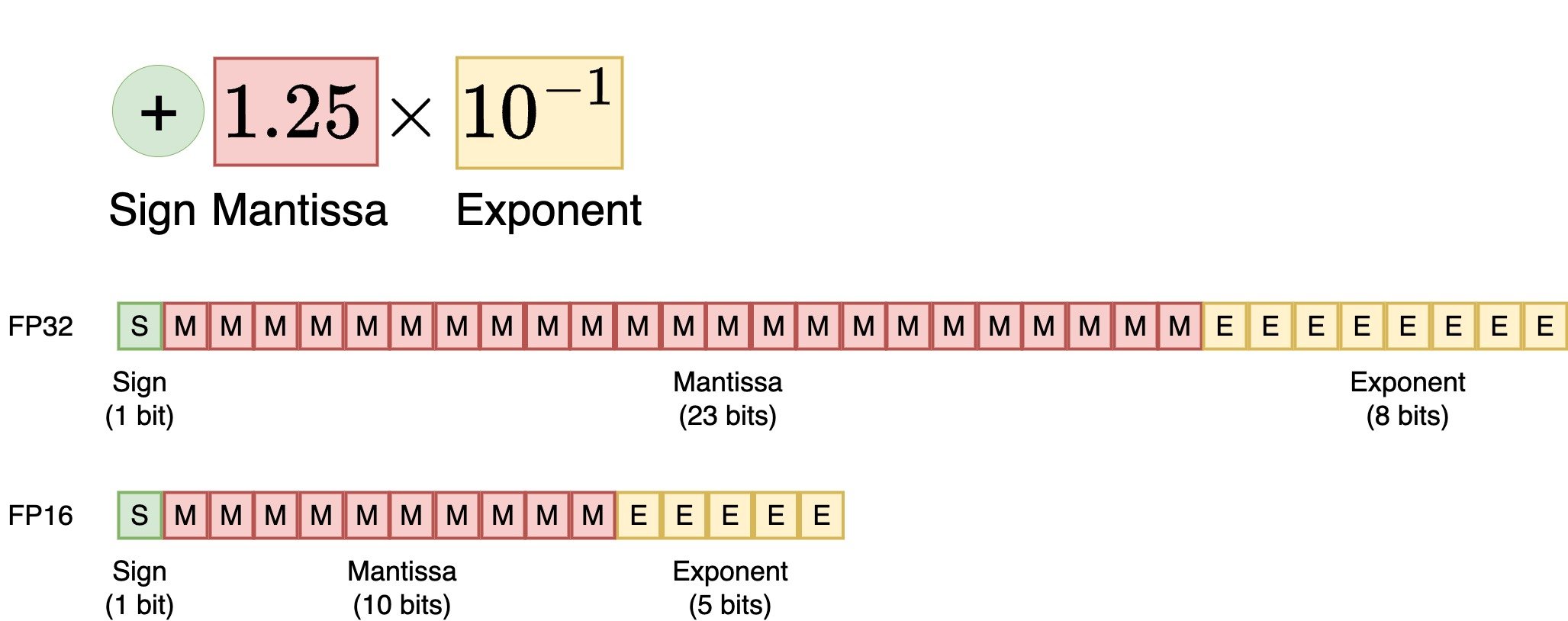

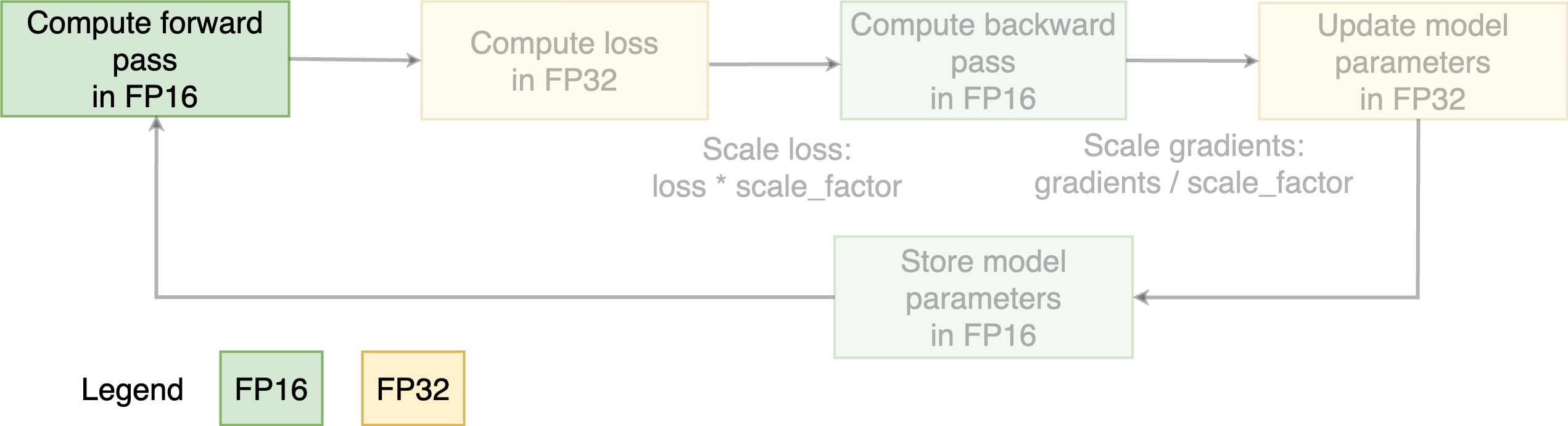

- Mixed precision training: combine FP16, FP32 computations to speed up training

What is mixed precision training?

- Mixed precision training: combine FP16, FP32 computations to speed up training

What is mixed precision training?

- Mixed precision training: combine FP16, FP32 computations to speed up training

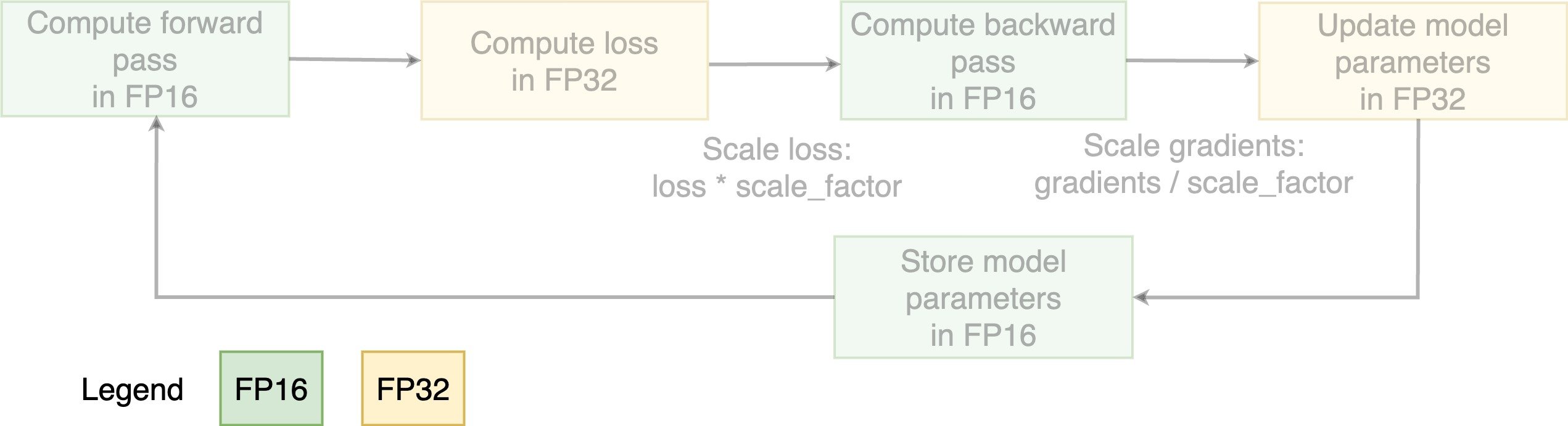

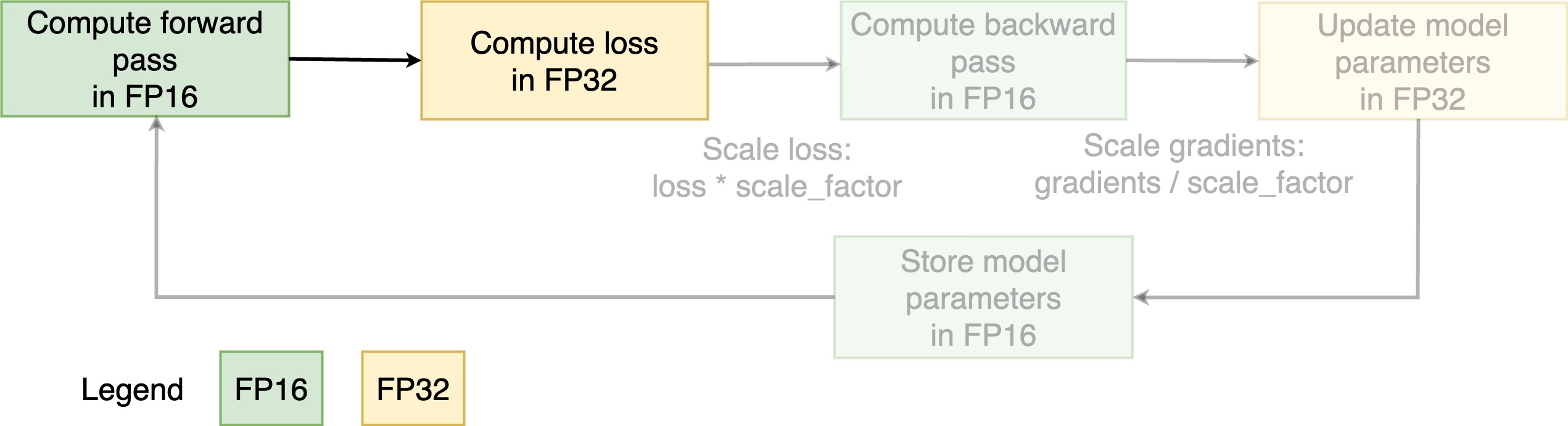

- Underflow: number vanishes to 0 because it falls below precision

What is mixed precision training?

- Mixed precision training: combine FP16, FP32 computations to speed up training

- Underflow: number vanishes to 0 because it falls below precision

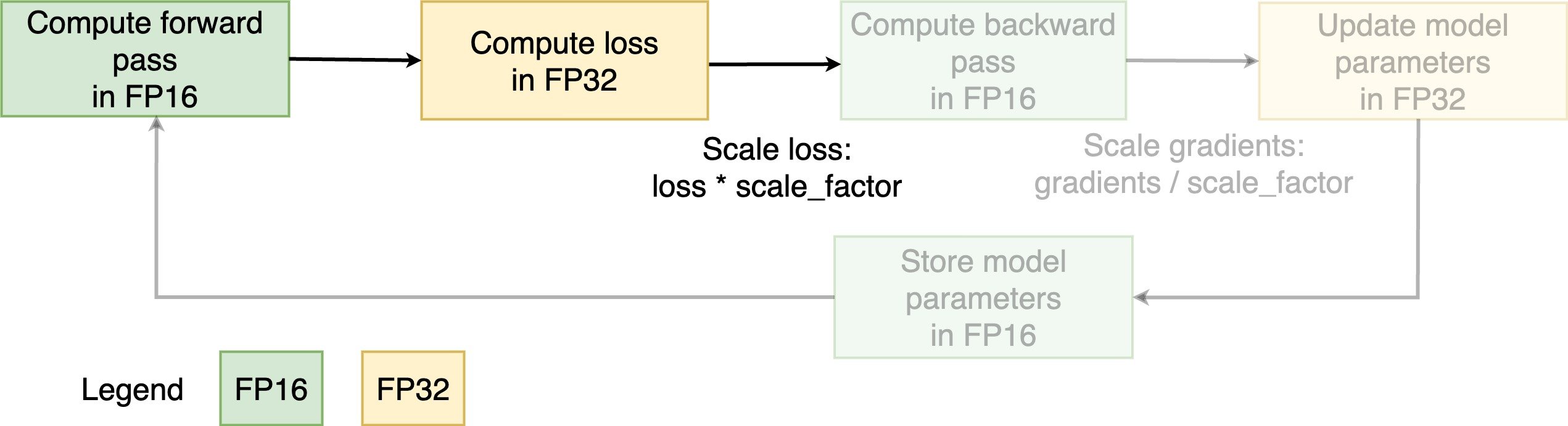

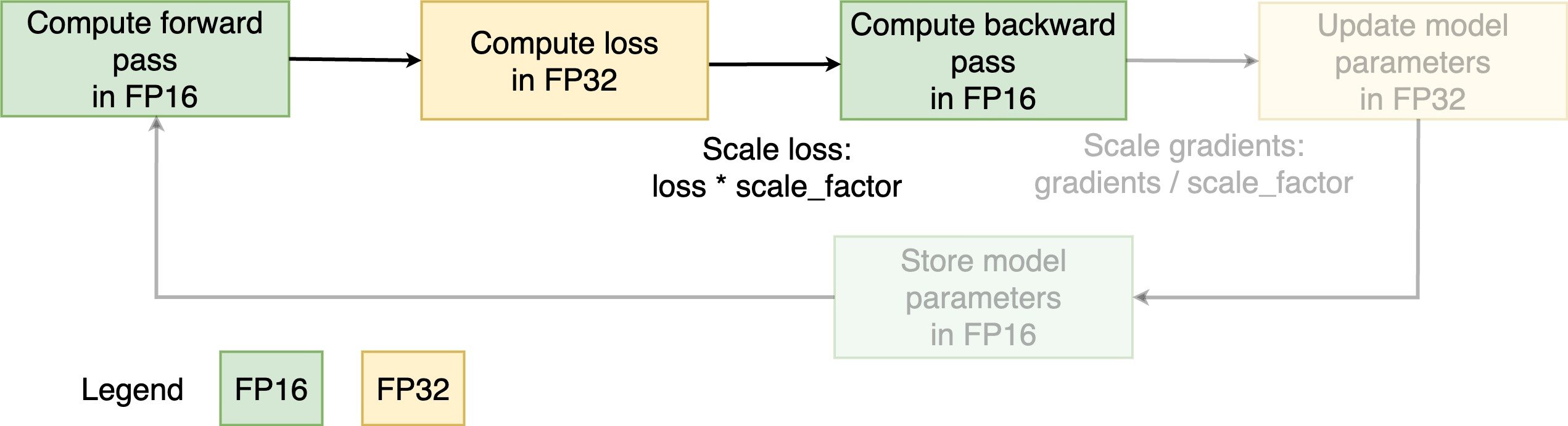

- Scale loss to prevent underflow

What is mixed precision training?

- Mixed precision training: combine FP16, FP32 computations to speed up training

- Underflow: number vanishes to 0 because it falls below precision

- Scale loss to prevent underflow

What is mixed precision training?

- Mixed precision training: combine FP16, FP32 computations to speed up training

- Underflow: number vanishes to 0 because it falls below precision

- Scale loss to prevent underflow

What is mixed precision training?

- Mixed precision training: combine FP16, FP32 computations to speed up training

- Underflow: number vanishes to 0 because it falls below precision

- Scale loss to prevent underflow

What is mixed precision training?

- Mixed precision training: combine FP16, FP32 computations to speed up training

- Underflow: number vanishes to 0 because it falls below precision

- Scale loss to prevent underflow

PyTorch implementation

Mixed precision training with PyTorch

scaler = torch.amp.GradScaler()for batch in train_dataloader: inputs, targets = batch["input_ids"], batch["labels"]with torch.autocast(device_type="cpu", dtype=torch.float16):outputs = model(inputs, labels=targets) loss = outputs.lossscaler.scale(loss).backward()scaler.step(optimizer)scaler.update()optimizer.zero_grad()

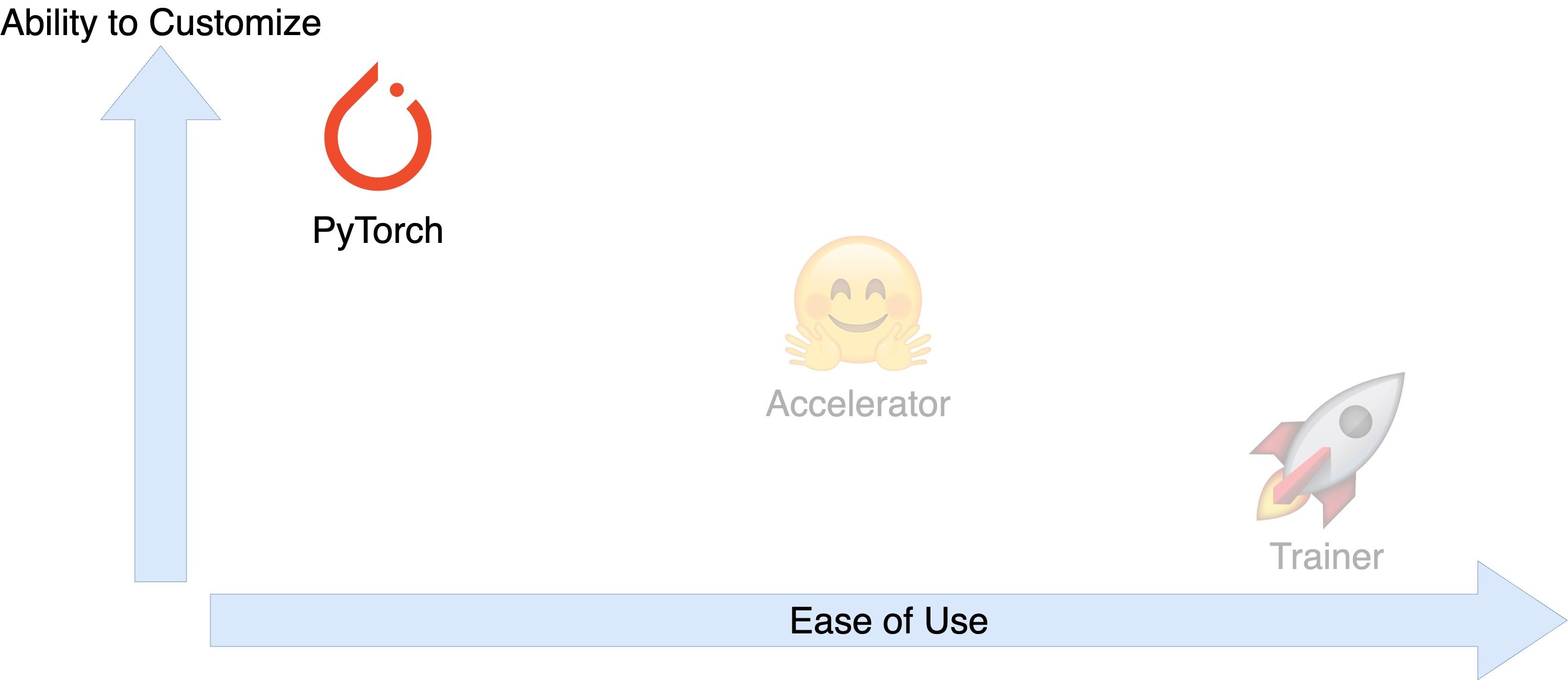

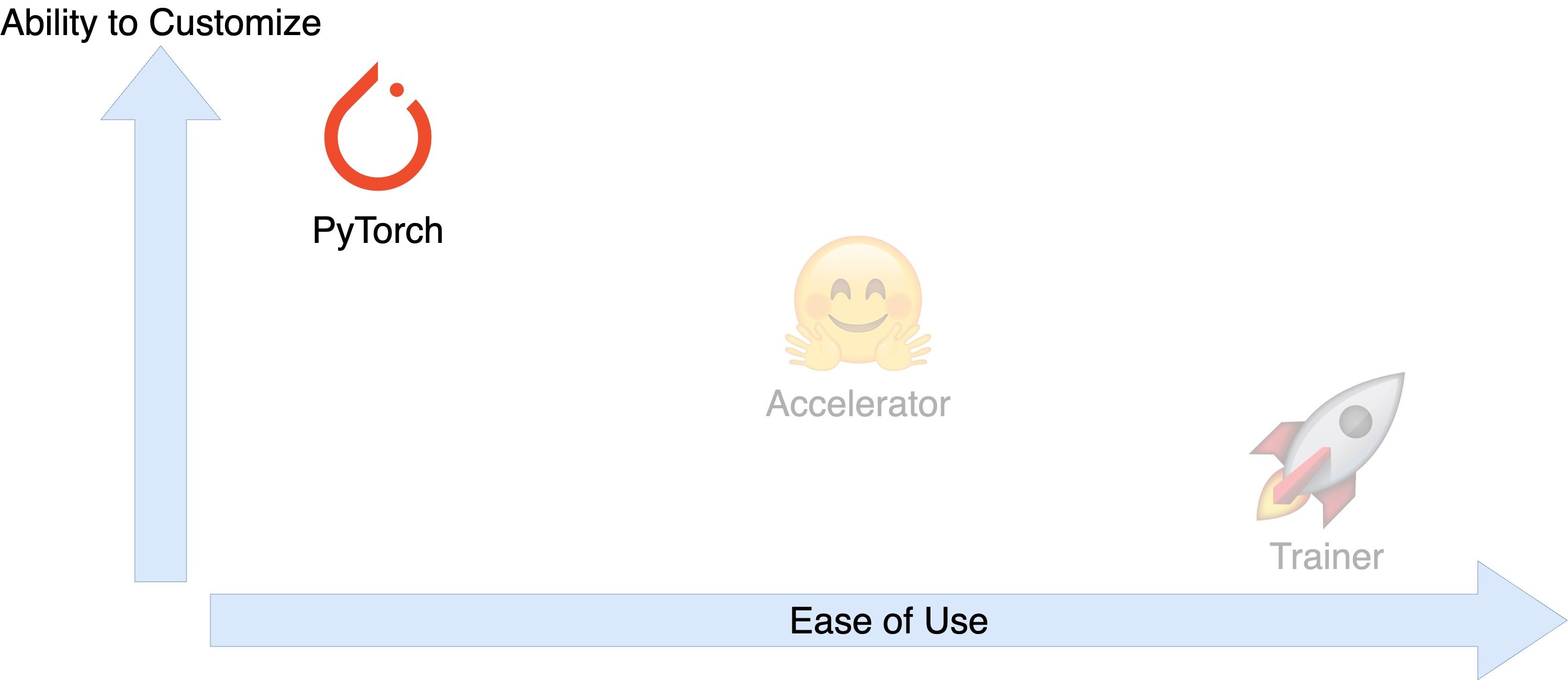

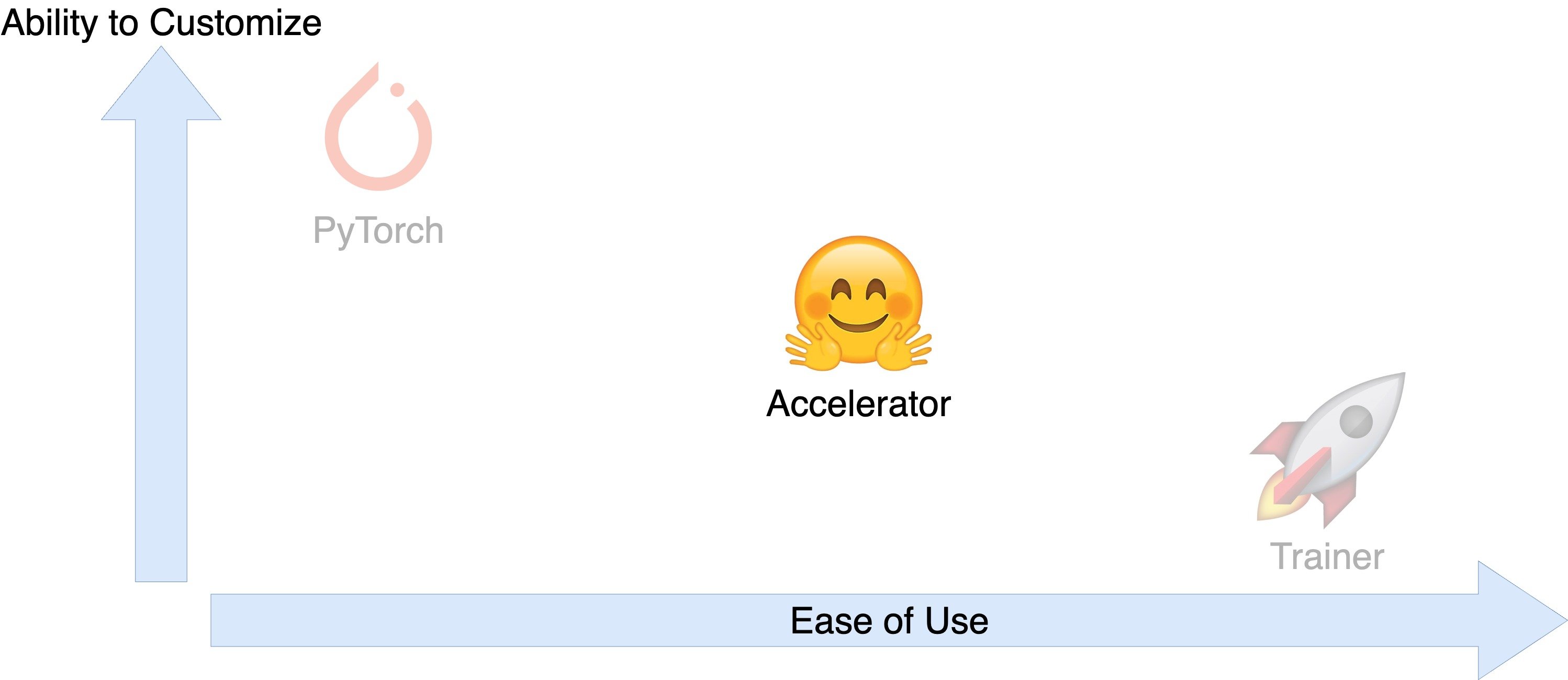

From PyTorch to Accelerator

From PyTorch to Accelerator

Mixed precision training with Accelerator

accelerator = Accelerator(mixed_precision="fp16")model, optimizer, train_dataloader, lr_scheduler = \ accelerator.prepare(model, optimizer, train_dataloader, lr_scheduler)for batch in train_dataloader: inputs, targets = batch["input_ids"], batch["labels"] outputs = model(inputs, labels=targets) loss = outputs.loss accelerator.backward(loss) optimizer.step() optimizer.zero_grad()

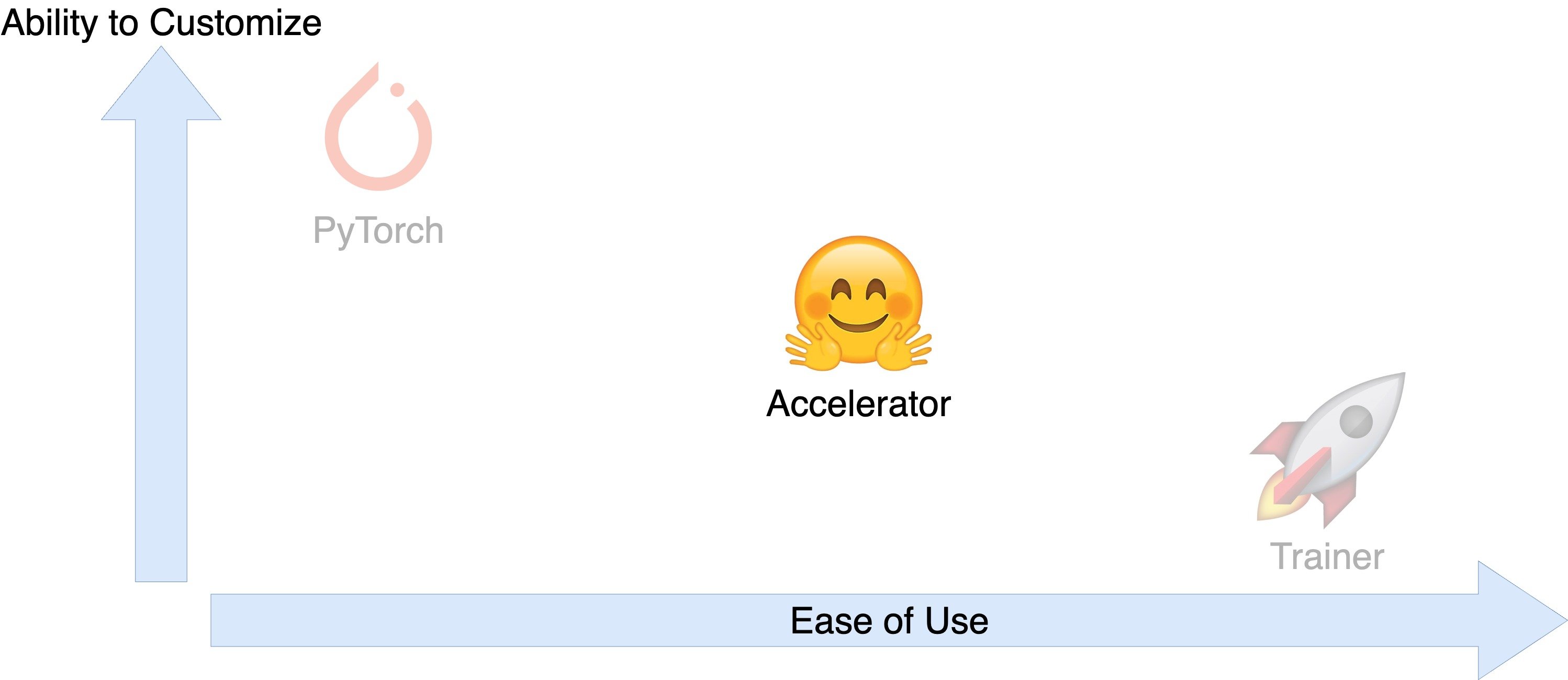

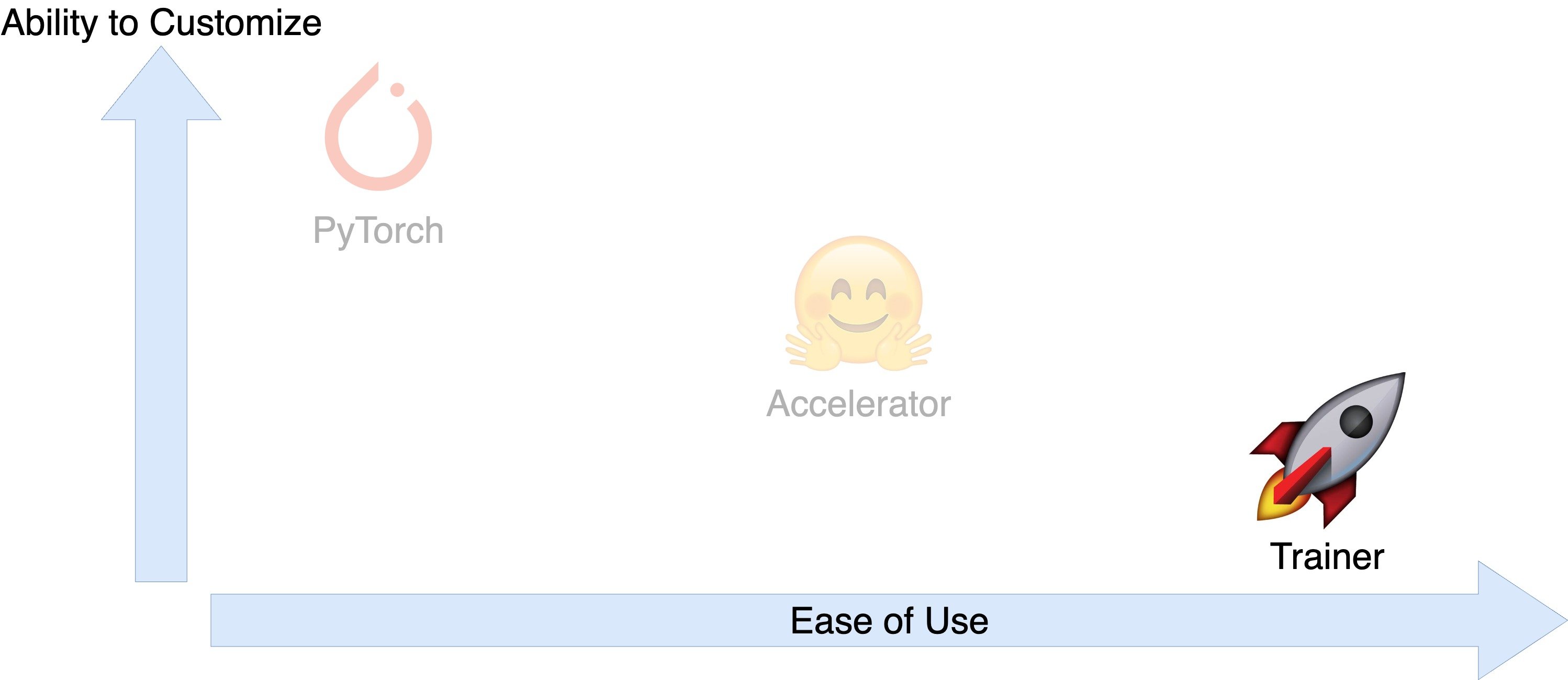

From Accelerator to Trainer

From Accelerator to Trainer

Mixed precision training with Trainer

training_args = TrainingArguments( output_dir="./results", evaluation_strategy="epoch", fp16=True )trainer = Trainer( model=model, args=training_args, train_dataset=dataset["train"], eval_dataset=dataset["validation"], compute_metrics=compute_metrics, )trainer.train()

Let's practice!

Efficient AI Model Training with PyTorch