Boundaries and Limitations of AI

Introduction to AI for Work

James Chapman

AI Curriculum Manager, DataCamp

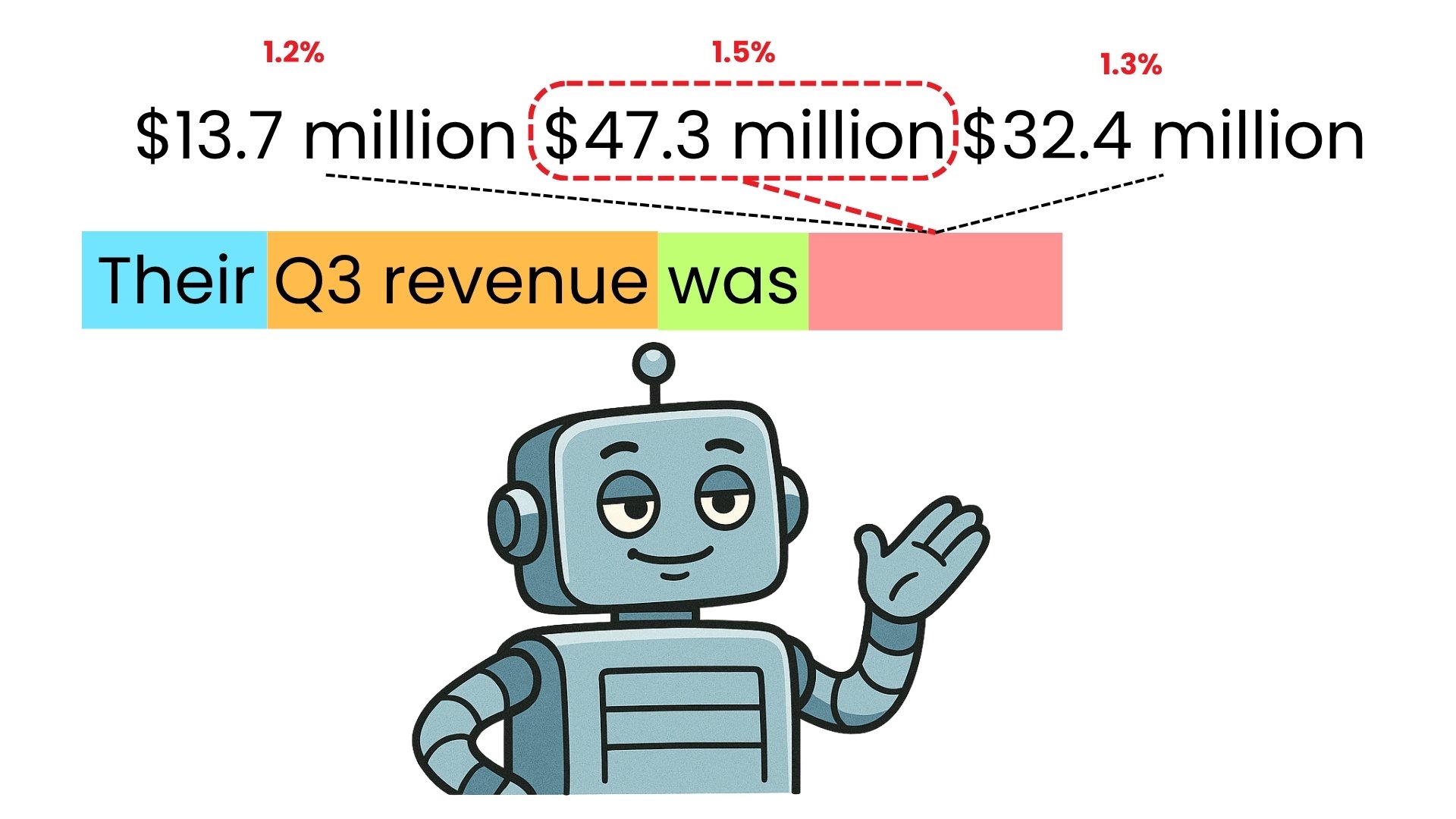

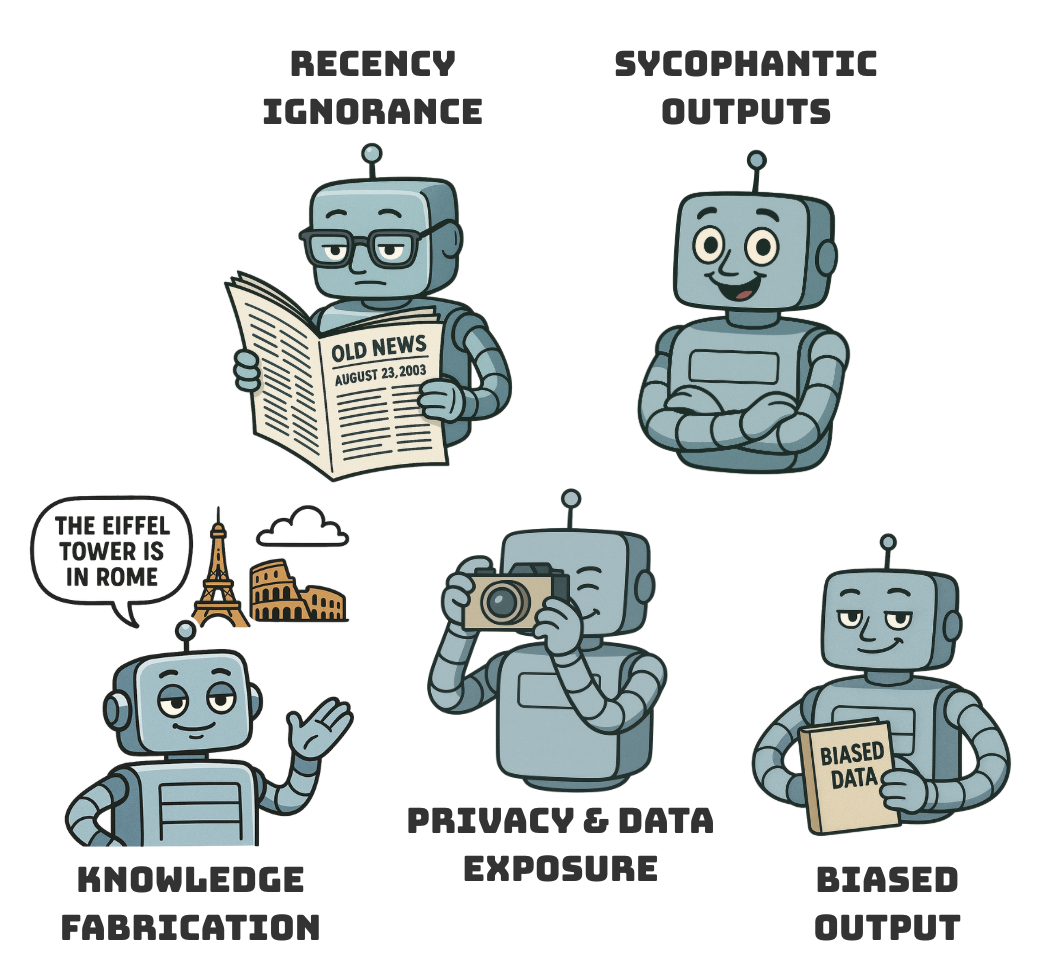

Risk #1: Knowledge Fabrication

- Or Hallucination: false information incorrectly presented as correct by AI

- Common Examples:

- Incorrect statistics

- Fake citations

- Hallucinate events

Risk #1: Knowledge Fabrication

- Or Hallucination: false information incorrectly presented as correct by AI

- Common Examples:

- Incorrect statistics

- Fake citations

- Hallucinate events

Risk #1: Knowledge Fabrication

Risk #2: Recency Ignorance

- Knowledge cutoff date → date limit on the model's knowledge of events

Examples:

- Outdated regulations, prices, locations, and coding syntax

Confirm time-sensitive facts from current sources

- Many AI tools also support internet search

Risk #3: Biased Outputs

- Reflect and amplify social bias from its training data

- Result → stereotypical or discriminatory content

- Review AI-generated content carefully!

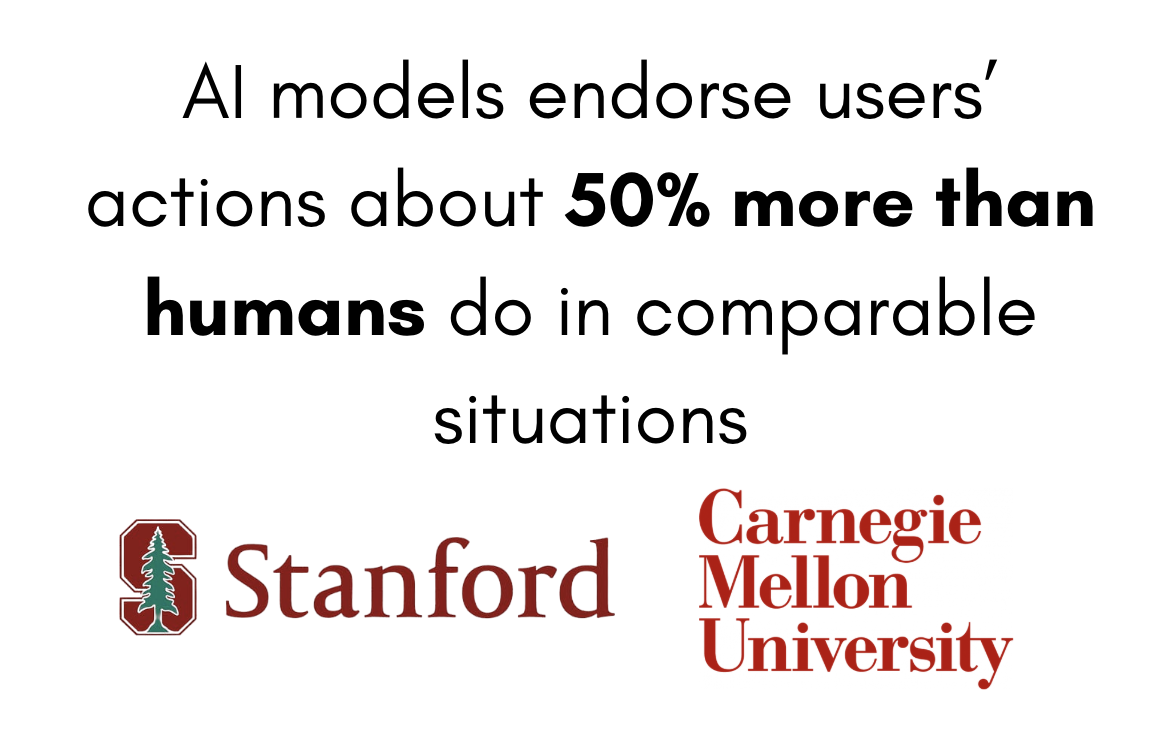

Risk #4: Sycophantic Outputs

Sycophantic → telling you what it thinks you want to hear

- Validate perspectives, support decisions, and affirm thinking

Problematic when you require a critical perspective

Risk #4: Sycophantic Outputs

Sycophantic → telling you what it thinks you want to hear

- Validate perspectives, support decisions, and affirm thinking

Problematic when you require a critical perspective

- By-product of how LLMs are trained on human ratings of responses

1 https://arxiv.org/abs/2510.01395

Risk #5: Privacy and Data Exposure

- Information shared with AI tools may not stay private!

- Uploading spreadsheets, documents, or client data can be risky

- Data may be stored on external servers, used for model training, or searchable

Risk #5: Privacy and Data Exposure

Risks

- Violating privacy laws (e.g., GDPR)

- Losing a competitive advantage

- Damaging client trust

- Always check the data policy of the AI tool

- If in doubt: consult an IT or InfoSec expert

From Risk to Responsibility

Let's practice!

Introduction to AI for Work