Classification and feature engineering

Machine Learning for Time Series Data in Python

Chris Holdgraf

Fellow, Berkeley Institute for Data Science

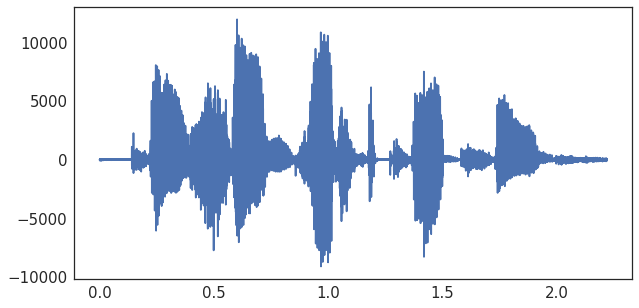

Always visualize raw data before fitting models

Visualize your timeseries data!

ixs = np.arange(audio.shape[-1])

time = ixs / sfreq

fig, ax = plt.subplots()

ax.plot(time, audio)

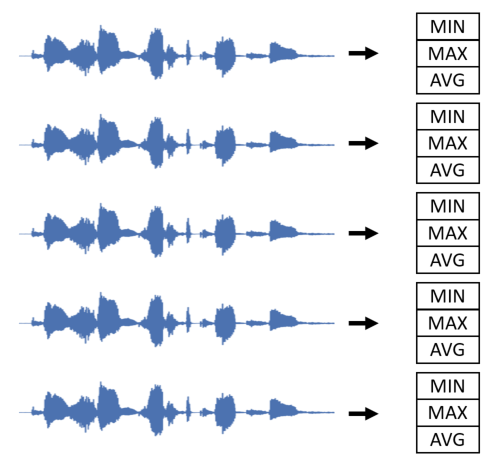

What features to use?

- Using raw timeseries data is too noisy for classification

- We need to calculate features!

- An easy start: summarize your audio data

Calculating multiple features

print(audio.shape)

# (n_files, time)

(20, 7000)

means = np.mean(audio, axis=-1)

maxs = np.max(audio, axis=-1)

stds = np.std(audio, axis=-1)

print(means.shape)

# (n_files,)

(20,)

Fitting a classifier with scikit-learn

- We've just collapsed a 2-D dataset (samples x time) into several features of a 1-D dataset (samples)

- We can combine each feature, and use it as an input to a model

- If we have a label for each sample, we can use scikit-learn to create and fit a classifier

Preparing your features for scikit-learn

# Import a linear classifier

from sklearn.svm import LinearSVC

# Note that means are reshaped to work with scikit-learn

X = np.column_stack([means, maxs, stds])

y = labels.reshape(-1, 1)

model = LinearSVC()

model.fit(X, y)

Scoring your scikit-learn model

from sklearn.metrics import accuracy_score

# Different input data

predictions = model.predict(X_test)

# Score our model with % correct

# Manually

percent_score = sum(predictions == labels_test) / len(labels_test)

# Using a sklearn scorer

percent_score = accuracy_score(labels_test, predictions)

Let's practice!

Machine Learning for Time Series Data in Python