Logistic regression and regularization

Linear Classifiers in Python

Michael (Mike) Gelbart

Instructor, The University of British Columbia

Regularized logistic regression

Regularized logistic regression

How does regularization affect training accuracy?

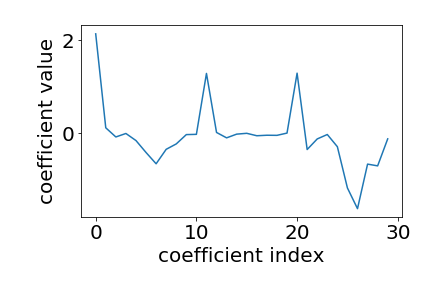

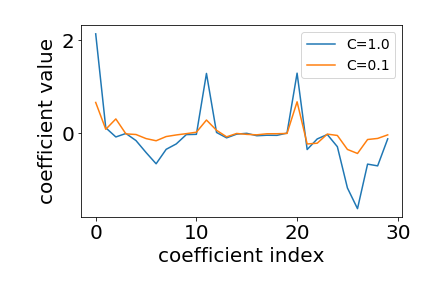

lr_weak_reg = LogisticRegression(C=100) lr_strong_reg = LogisticRegression(C=0.01)lr_weak_reg.fit(X_train, y_train) lr_strong_reg.fit(X_train, y_train)lr_weak_reg.score(X_train, y_train) lr_strong_reg.score(X_train, y_train)

1.0

0.92

$\text{regularized loss = original loss + large coefficient penalty}$

- more regularization: lower training accuracy

How does regularization affect test accuracy?

lr_weak_reg.score(X_test, y_test)

0.86

lr_strong_reg.score(X_test, y_test)

0.88

$\text{regularized loss = original loss + large coefficient penalty}$

- more regularization: lower training accuracy

- more regularization: (almost always) higher test accuracy

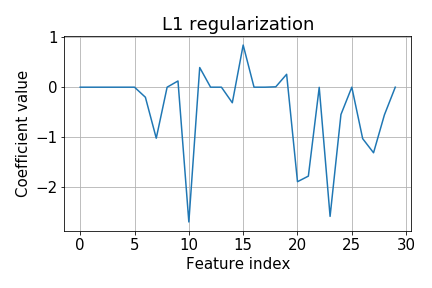

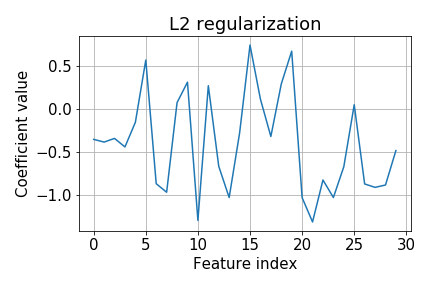

L1 vs. L2 regularization

- Lasso = linear regression with L1 regularization

- Ridge = linear regression with L2 regularization

- For other models like logistic regression we just say L1, L2, etc.

lr_L1 = LogisticRegression(solver='liblinear', penalty='l1')

lr_L2 = LogisticRegression() # penalty='l2' by default

lr_L1.fit(X_train, y_train)

lr_L2.fit(X_train, y_train)

plt.plot(lr_L1.coef_.flatten())

plt.plot(lr_L2.coef_.flatten())

L2 vs. L1 regularization

Let's practice!

Linear Classifiers in Python