Dropping Data

Feature Engineering with PySpark

John Hogue

Lead Data Scientist, General Mills

Where can data go bad?

- Recorded wrong

- Unique events

- Formatted incorrectly

- Duplications

- Missing

- Not relevant

Dropping Columns

df.select(['NO', 'UNITNUMBER', 'CLASS']).show()

+----+----------+-----+

| NO|UNITNUMBER|CLASS|

+----+----------+-----+

| 1| null| SF|

| 156| A8| SF|

| 157| 207| SF|

| 158| 701G| SF|

| 159| 36| SF|

Multiple fields are not needed for our analysis

'NO'auto-generated record number'UNITNUMBER'irrelevant data'CLASS'all constant

Dropping Columns

drop(*cols)

*cols– a column name to drop or a list of column names to drop.- Returns a new DataFrame that drops the specified

# List of columns to drop cols_to_drop = ['NO', 'UNITNUMBER', 'CLASS']# Drop the columns df = df.drop(*cols_to_drop)

Text Filtering

where(condition)- condition – a Column of

types.BooleanTypeor a string of SQL expression. - Filters dataframe where the condition is true

- condition – a Column of

like(other)- other – a SQL LIKE pattern

- Returns a boolean Column

~- The NOT condition

df = df.where(~df['POTENTIALSHORTSALE'].like('Not Disclosed'))

Outlier Filtering

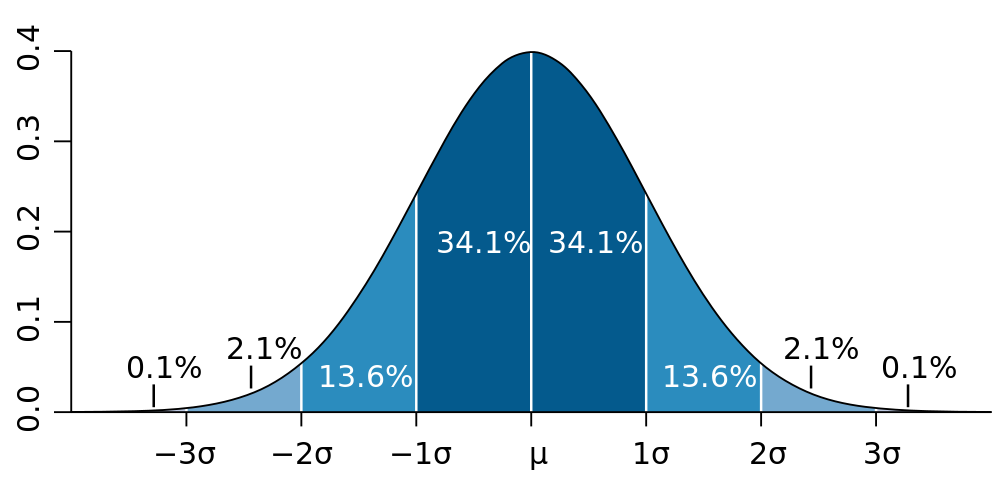

Filter data to within three standard deviations (3σ) of the mean (μ)

Value Filtering Example

# Calculate values used for filtering std_val = df.agg({'SALESCLOSEPRICE': 'stddev'}).collect()[0][0] mean_val = df.agg({'SALESCLOSEPRICE': 'mean'}).collect()[0][0]# Create three standard deviation (μ ± 3σ) upper and lower bounds for data hi_bound = mean_val + (3 * std_val) low_bound = mean_val - (3 * std_val)# Use where() to filter the DataFrame between values df = df.where((df['LISTPRICE'] < hi_bound) & (df['LISTPRICE'] > low_bound))

Dropping NA's or NULLs

DataFrame.dropna()

how: ‘any’ or ‘all’. If ‘any’, drop a record if it contains any nulls. If ‘all’, drop a record only if all its values are null.thresh: int, default None If specified, drop records that have less than thresh non-null values. This overwrites the how parameter.subset: optional list of column names to consider.

Dropping NA's or NULLs

# Drop any records with NULL values df = df.dropna()# drop records if both LISTPRICE and SALESCLOSEPRICE are NULL df = df.dropna(how='all', subset['LISTPRICE', 'SALESCLOSEPRICE '])# Drop records where at least two columns have NULL values df = df.dropna(thresh=2)

Dropping Duplicates

What is a duplicate?

- Two or more records contains all the same information

- After dropping columns or joining datasets, check for duplicates

dropDuplicates()

- Can be run across entire DataFrame or a list of columns

- In PySpark there is no order for which record is removed

# Entire DataFrame df.dropDuplicates()# Check only a column list df.dropDuplicates(['streetaddress'])

Let's practice!

Feature Engineering with PySpark