Getting More Data

Feature Engineering with PySpark

John Hogue

Lead Data Scientist, General Mills

Thoughts on External Data Sets

PROS

- Add important predictors

- Supplement/replace values

- Cheap or easy to obtain

CONS

- May 'bog' analysis down

- Easy to induce data leakage

- Become data set subject matter expert

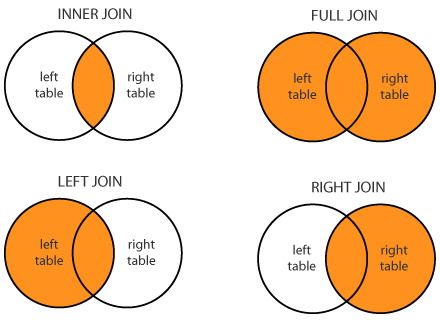

About Joins

Orienting our data directions

- Left; our starting data set

- Right; new data set to incorporate

PySpark DataFrame Joins

DataFrame.join(other, # Other DataFrame to mergeon=None, # The keys to join onhow=None) # Type of join to perform (default is 'inner')

PySpark Join Example

# Inspect dataframe head

hdf.show(2)

+----------+--------------------+

| dt| nm|

+----------+--------------------+

|2012-01-02| New Year Day|

|2012-01-16|Martin Luther Kin...|

+----------+--------------------+

only showing top 2 rows

# Specify join conditon cond = [df['OFFMARKETDATE'] == hdf['dt']]# Join two hdf onto df df = df.join(hdf, on=cond, 'left')# How many sales occurred on bank holidays? df.where(~df['nm'].isNull()).count()

0

SparkSQL Join

- Apply SQL to your dataframe

# Register the dataframe as a temp table

df.createOrReplaceTempView("df")

hdf.createOrReplaceTempView("hdf")

# Write a SQL Statement

sql_df = spark.sql("""

SELECT

*

FROM df

LEFT JOIN hdf

ON df.OFFMARKETDATE = hdf.dt

""")

Let's Join Some Data!

Feature Engineering with PySpark