Time Features

Feature Engineering with PySpark

John Hogue

Lead Data Scientist, General Mills

The Cyclical Nature of Things

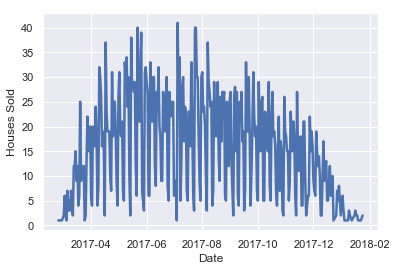

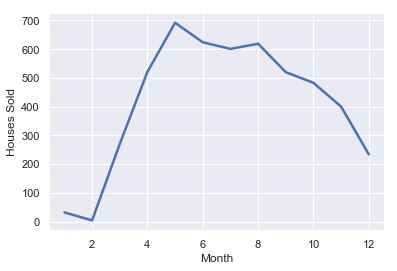

Choosing the Right Level

Choosing the Right Level

Treating Date Fields as Dates...

from pyspark.sql.functions import to_date

# Cast the data type to Date

df = df.withColumn('LISTDATE', to_date('LISTDATE'))

# Inspect the field

df[['LISTDATE']].show(2)

+----------+

| LISTDATE|

+----------+

|2017-07-14|

|2017-10-08|

+----------+

only showing top 2 rows

Time Components

from pyspark.sql.functions import year, month

# Create a new column of year number

df = df.withColumn('LIST_YEAR', year('LISTDATE'))

# Create a new column of month number

df = df.withColumn('LIST_MONTH', month('LISTDATE'))

from pyspark.sql.functions import dayofmonth, weekofyear

# Create new columns of the day number within the month

df = df.withColumn('LIST_DAYOFMONTH', dayofmonth('LISTDATE'))

# Create new columns of the week number within the year

df = df.withColumn('LIST_WEEKOFYEAR', weekofyear('LISTDATE'))

Basic Time Based Metrics

from pyspark.sql.functions import datediff

# Calculate difference between two date fields

df.withColumn('DAYSONMARKET', datediff('OFFMARKETDATE', 'LISTDATE'))

Lagging Features

window()

Returns a record based off a group of records

lag(col, count=1)

Returns the value that is offset by rows before the current row

Lagging Features, the PySpark Way

from pyspark.sql.functions import lag from pyspark.sql.window import Window# Create Window w = Window().orderBy(m_df['DATE'])# Create lagged column m_df = m_df.withColumn('MORTGAGE-1wk', lag('MORTGAGE', count=1).over(w))# Inspect results m_df.show(3)

+----------+------------+----------------+

| DATE| MORTGAGE| MORTGAGE-1wk|

+----------+------------+----------------+

|2013-10-10| 4.23| null|

|2013-10-17| 4.28| 4.23|

|2013-10-24| 4.13| 4.28|

+----------+------------+----------------+

only showing top 3 rows

It's TIME to practice!

Feature Engineering with PySpark