Generalization Error

Machine Learning with Tree-Based Models in Python

Elie Kawerk

Data Scientist

Supervised Learning - Under the Hood

- Supervised Learning: $y =f(x)$, $f$ is unknown.

Goals of Supervised Learning

Find a model $\hat{f}$ that best approximates $f$: $\hat{f} \approx f$

$\hat{f}$ can be Logistic Regression, Decision Tree, Neural Network ...

Discard noise as much as possible.

End goal: $\hat{f}$ should achieve a low predictive error on unseen datasets.

Difficulties in Approximating $f$

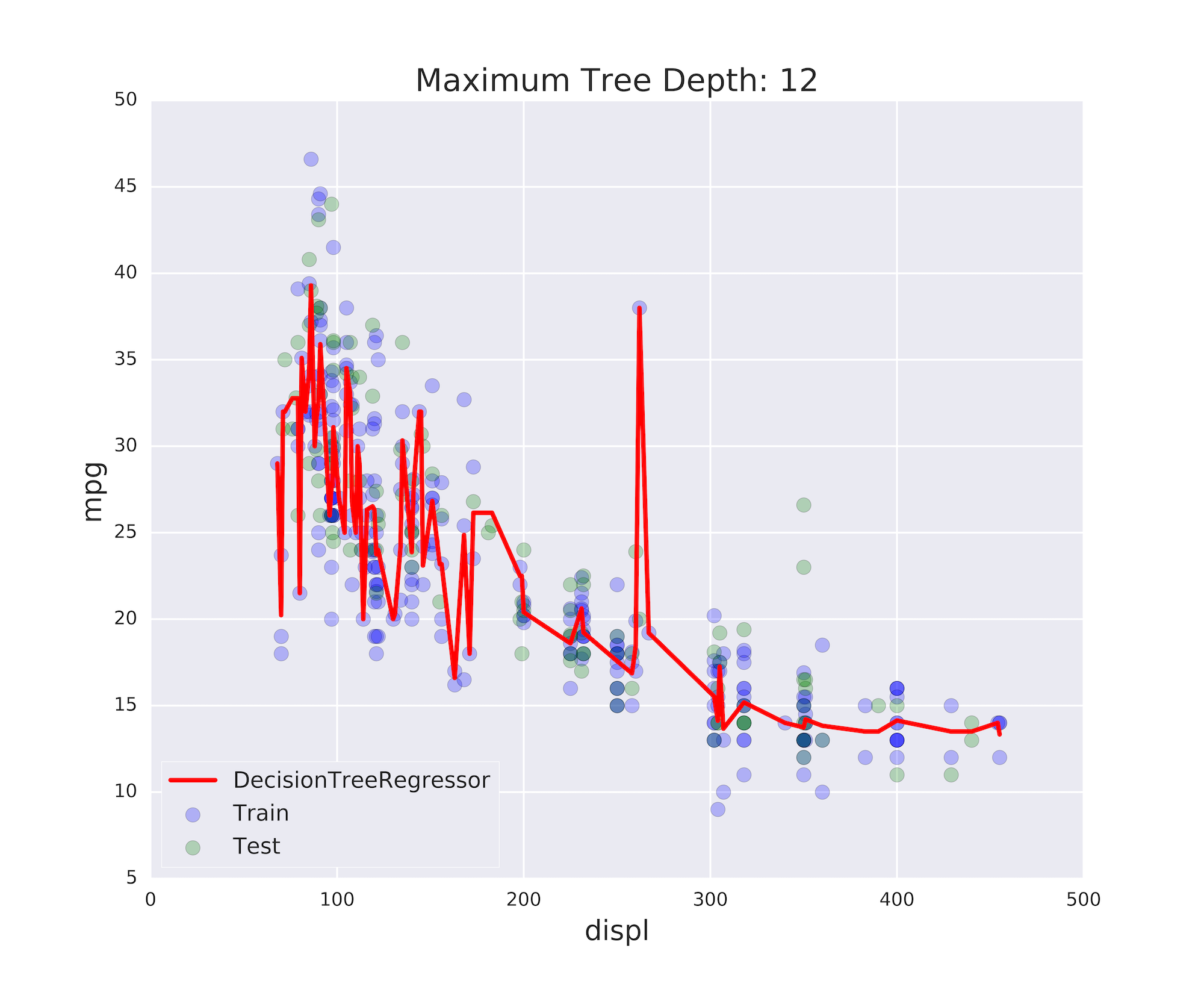

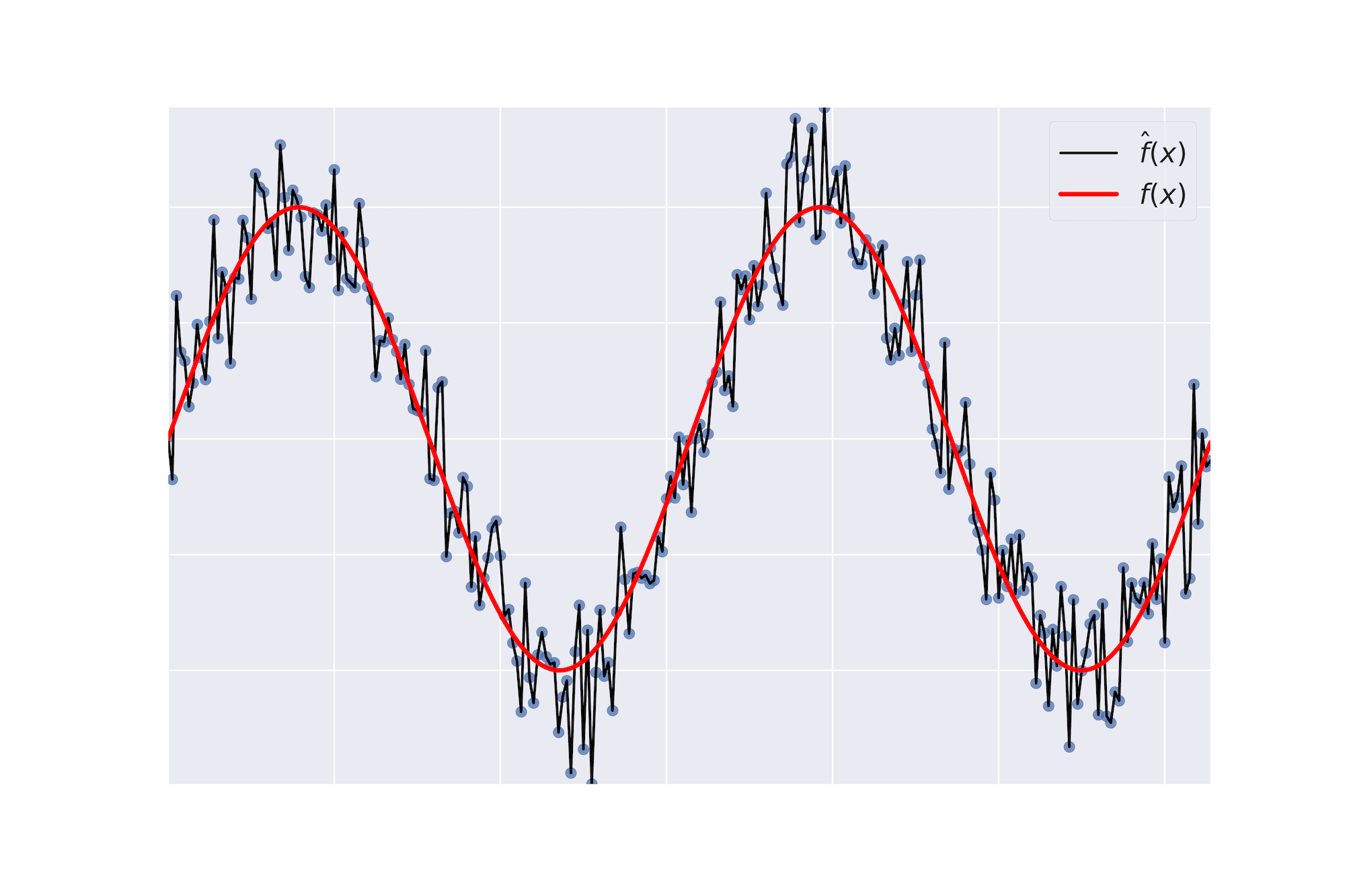

Overfitting:

$\hat{f}(x)$ fits the training set noise.

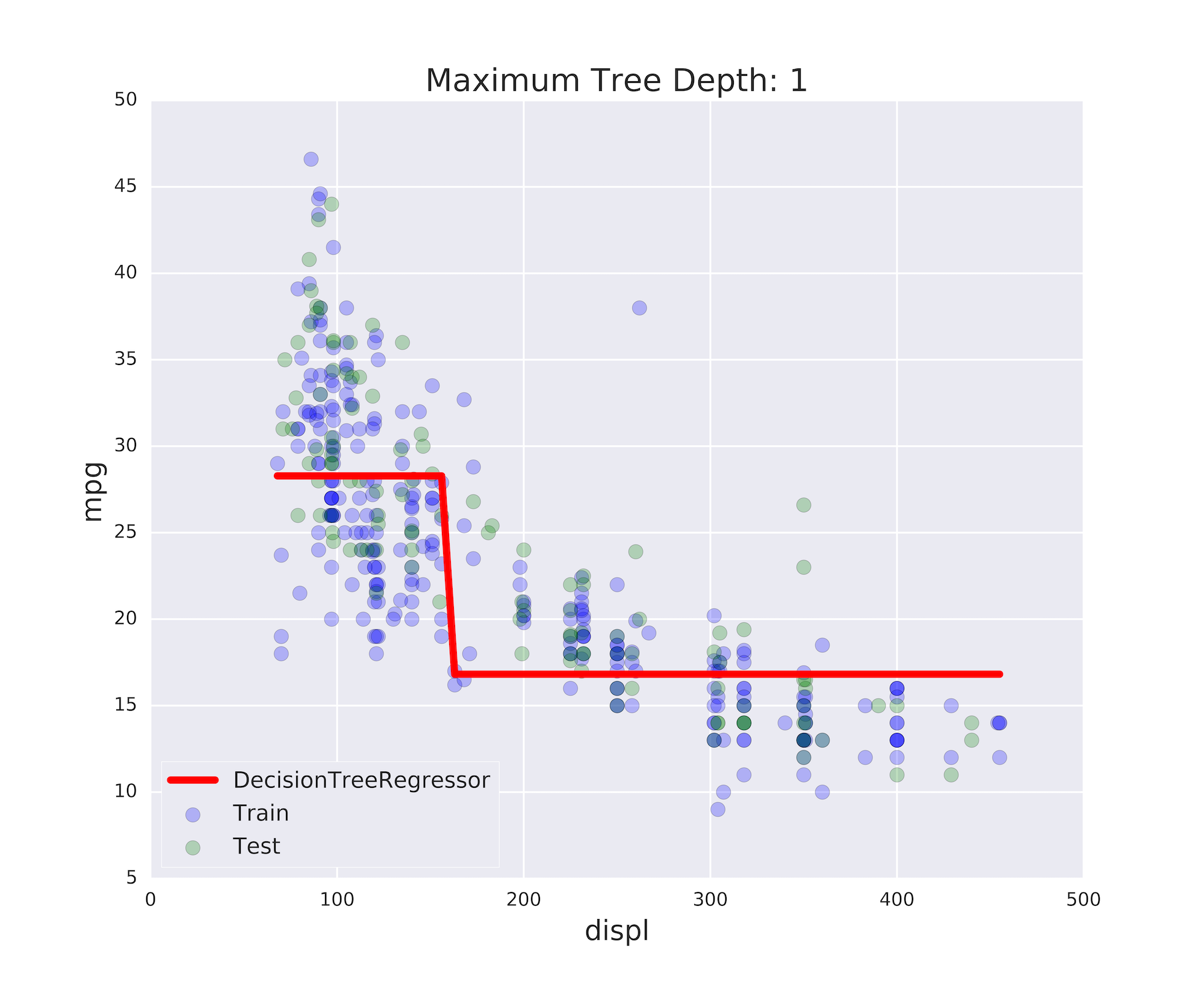

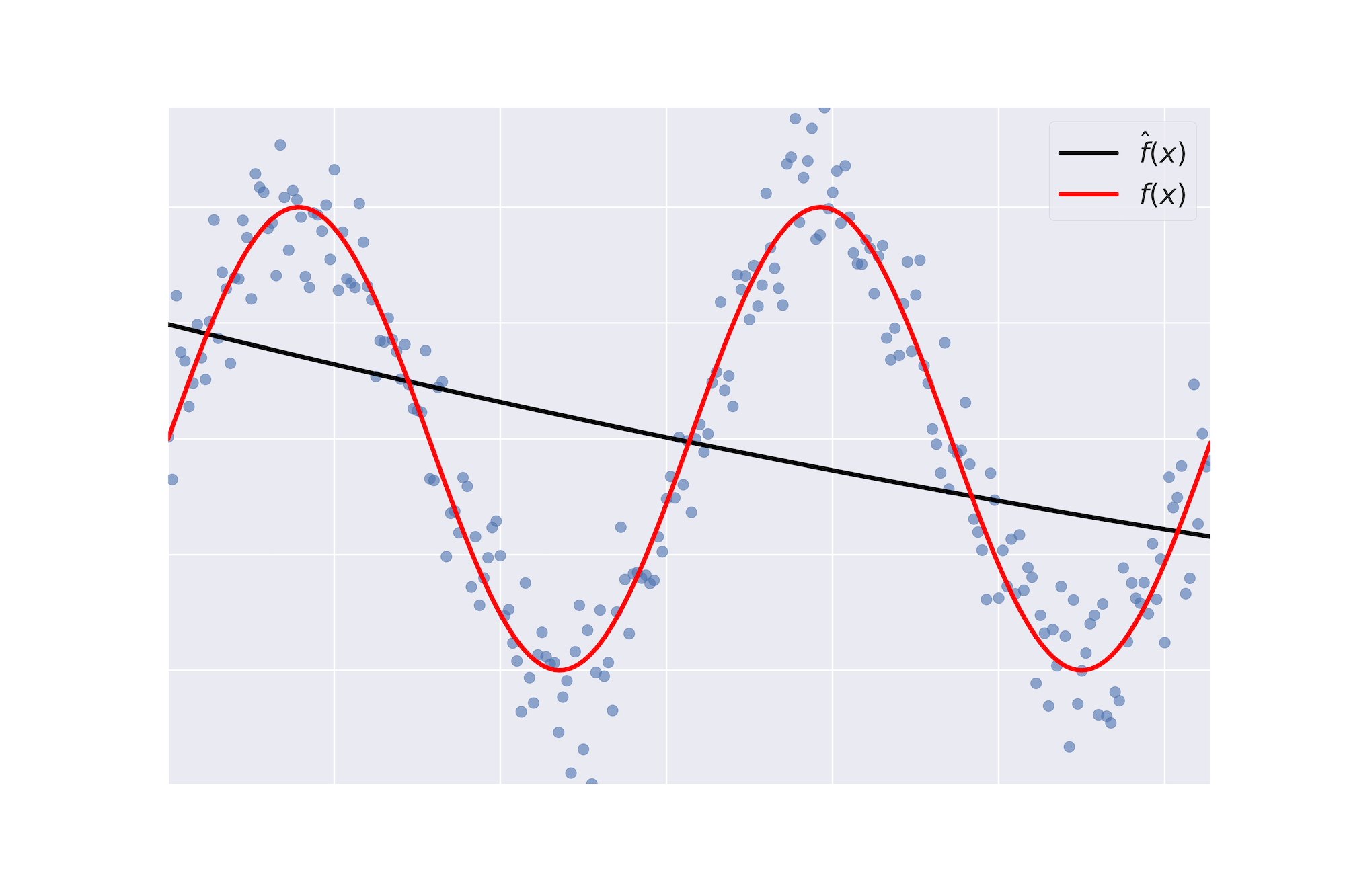

Underfitting:

$\hat{f}$ is not flexible enough to approximate $f$.

Overfitting

Underfitting

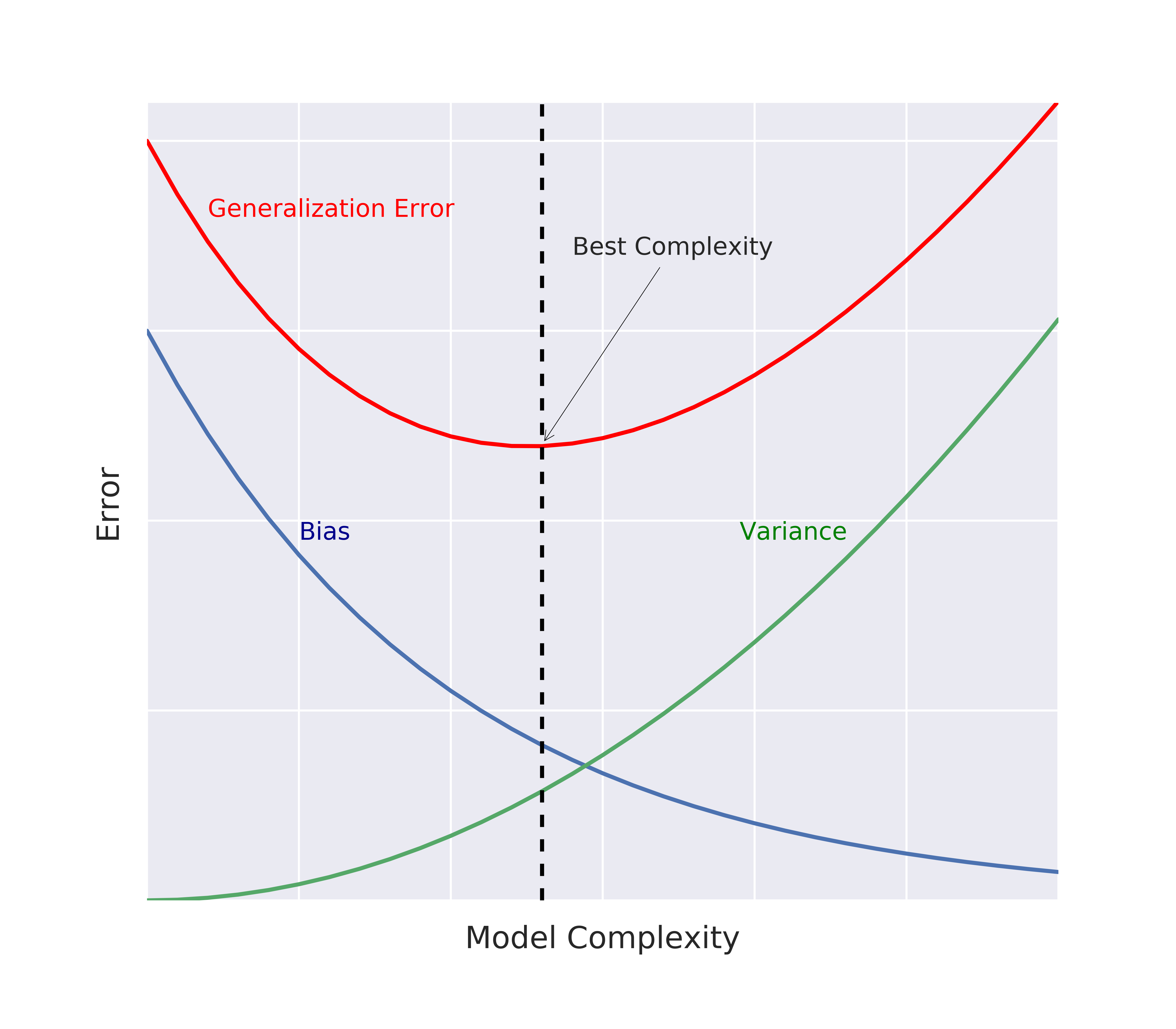

Generalization Error

Generalization Error of $\hat{f}$: Does $\hat{f}$ generalize well on unseen data?

It can be decomposed as follows:

Generalization Error of $\hat{f} = bias^2 + variance + \text{irreducible error}$

Bias

- Bias: error term that tells you, on average, how much $\hat{f} \neq f$.

Variance

- Variance: tells you how much $\hat{f}$ is inconsistent over different training sets.

Model Complexity

Model Complexity: sets the flexibility of $\hat{f}$.

Example: Maximum tree depth, Minimum samples per leaf, ...

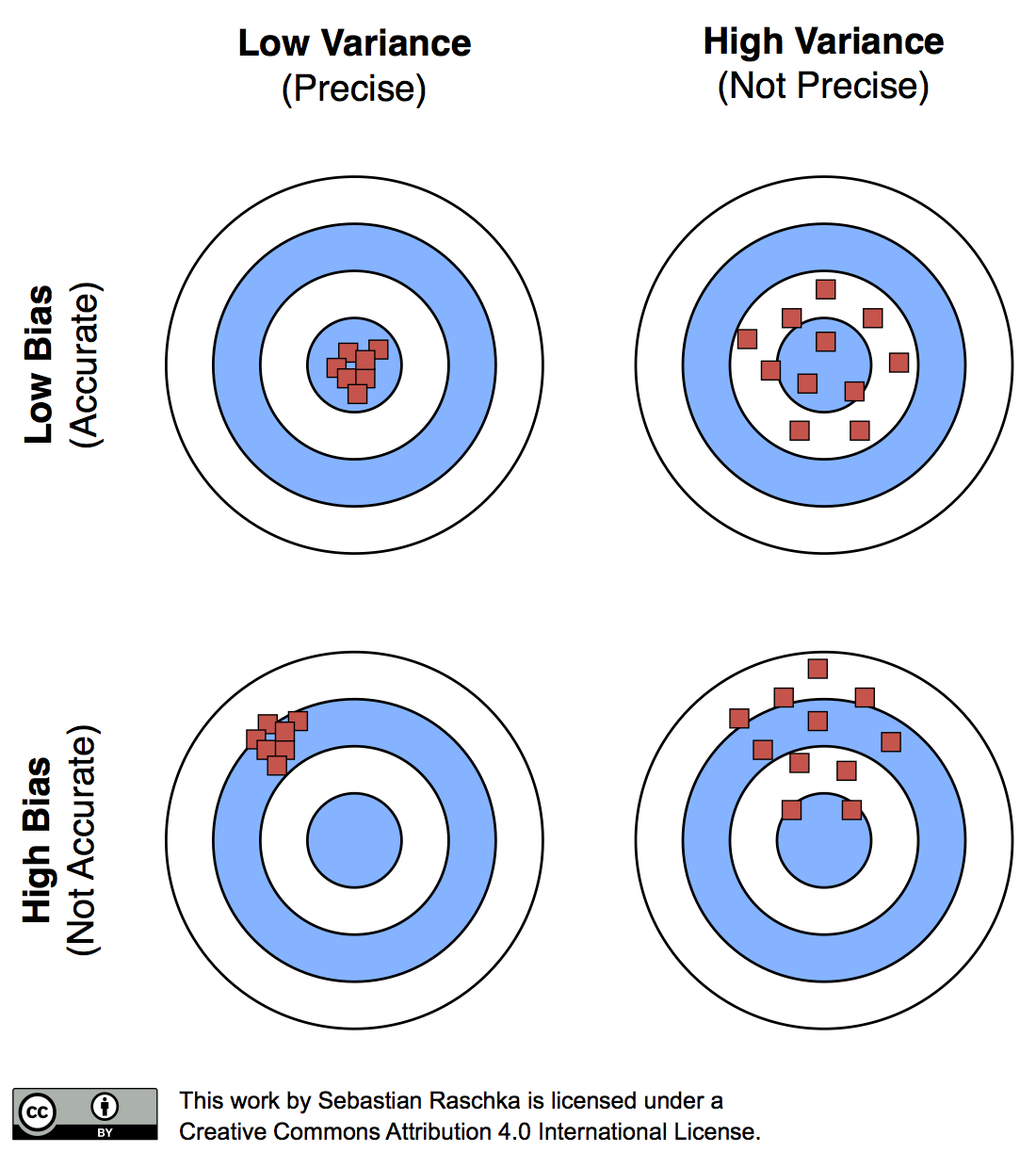

Bias-Variance Tradeoff

Bias-Variance Tradeoff: A Visual Explanation

Let's practice!

Machine Learning with Tree-Based Models in Python