Hyperparameter tuning in caret

Hyperparameter Tuning in R

Dr. Shirin Elsinghorst

Senior Data Scientist

Voter dataset from US 2016 election

- Split intro training and test set

library(tidyverse)

glimpse(voters_train_data)

Observations: 6,692

Variables: 42

$ turnout16_2016 <chr> "Did not vote", "Did not vote", "Did not vote", "Did not vote", ...

$ RIGGED_SYSTEM_1_2016 <int> 2, 2, 3, 2, 2, 3, 3, 1, 2, 3, 4, 4, 4, 3, 1, 2, 2, 2, 3, 2, 1, 2, 3, 2, 1, ...

$ RIGGED_SYSTEM_2_2016 <int> 3, 3, 2, 2, 3, 3, 2, 2, 1, 2, 4, 2, 3, 2, 3, 4, 3, 2, 2, 2, 4, 1, 2, 2, 3, ...

$ RIGGED_SYSTEM_3_2016 <int> 1, 1, 3, 1, 1, 1, 2, 1, 1, 2, 1, 2, 1, 2, 1, 1, 1, 2, 2, 3, 1, 3, 2, 1, 1, ...

$ RIGGED_SYSTEM_4_2016 <int> 2, 1, 2, 2, 2, 2, 2, 2, 1, 3, 3, 1, 3, 3, 1, 3, 3, 2, 1, 1, 1, 2, 1, 2, 2, ...

$ RIGGED_SYSTEM_5_2016 <int> 1, 2, 2, 2, 2, 3, 1, 1, 2, 3, 2, 2, 1, 3, 1, 1, 2, 2, 1, 2, 1, 2, 2, 2, 1, ...

$ RIGGED_SYSTEM_6_2016 <int> 1, 1, 2, 1, 2, 2, 2, 1, 2, 2, 1, 3, 1, 3, 1, 1, 1, 2, 1, 1, 1, 2, 2, 2, 1, ...

$ track_2016 <int> 2, 2, 2, 1, 2, 2, 2, 2, 2, 1, 2, 1, 2, 1, 1, 2, 2, 3, 2, 2, 2, 2, 3, 2, 2, ...

...

Let's train another model with caret

- Stochastic Gradient Boosting

library(caret)

library(tictoc)

fitControl <- trainControl(method = "repeatedcv", number = 3, repeats = 5)

tic()

set.seed(42)

gbm_model_voters <- train(turnout16_2016 ~ .,

data = voters_train_data,

method = "gbm",

trControl = fitControl,

verbose = FALSE)

toc()

32.934 sec elapsed

Let's train another model with caret

gbm_model_voters

Stochastic Gradient Boosting

...

Resampling results across tuning parameters:

interaction.depth n.trees Accuracy Kappa

1 50 0.9604603 -0.0001774346

...

Tuning parameter 'shrinkage' was held constant at a value of 0.1

Tuning parameter 'n.minobsinnode' was held constant at a value of 10

Accuracy was used to select the optimal model using the largest value.

The final values used for the model were n.trees = 50,

interaction.depth = 1, shrinkage = 0.1 and n.minobsinnode = 10.

Cartesian grid search with caret

- Define a Cartesian grid of hyperparameters:

man_grid <- expand.grid(n.trees = c(100, 200, 250), interaction.depth = c(1, 4, 6), shrinkage = 0.1, n.minobsinnode = 10)fitControl <- trainControl(method = "repeatedcv", number = 3, repeats = 5) tic() set.seed(42) gbm_model_voters_grid <- train(turnout16_2016 ~ ., data = voters_train_data, method = "gbm", trControl = fitControl, verbose = FALSE, tuneGrid = man_grid) toc()

85.745 sec elapsed

Cartesian grid search with caret

gbm_model_voters_grid

Stochastic Gradient Boosting

...

Resampling results across tuning parameters:

interaction.depth n.trees Accuracy Kappa

1 100 0.9603108 0.000912769

...

Tuning parameter 'shrinkage' was held constant at a value of 0.1

Tuning parameter 'n.minobsinnode' was held constant at a value of 10

Accuracy was used to select the optimal model using the largest value.

The final values used for the model were n.trees = 100,

interaction.depth = 1, shrinkage = 0.1 and n.minobsinnode = 10.

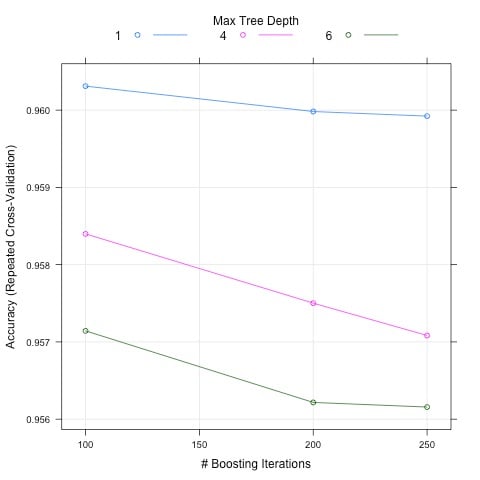

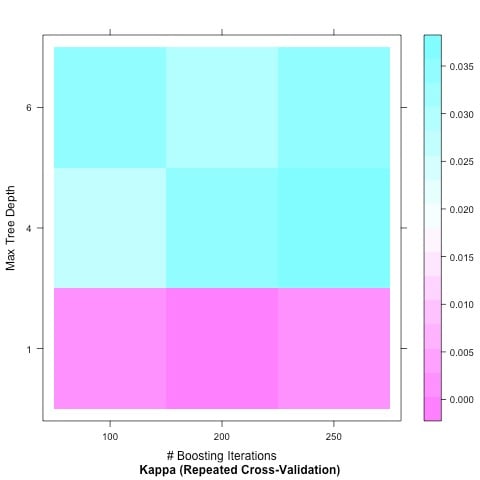

Plot hyperparameter models

plot(gbm_model_voters_grid)

plot(gbm_model_voters_grid,

metric = "Kappa",

plotType = "level")

Test it out for yourself!

Hyperparameter Tuning in R