Introduction to the MovieLens dataset

Building Recommendation Engines with PySpark

Jamen Long

Data Scientist at Nike

MovieLens dataset

F. Maxwell Harper and Joseph A. Konstan. 2015

The MovieLens Datasets: History and Context.

ACM Transitions on Interactive Intelligent Systems (TiiS) 5, 4, Article 19 (December 2015), 19 Pages.

DOI=http://dx.doi.org/10.1145/2827872

MovieLens summary stats

F. Maxwell Harper and Joseph A. Konstan. 2015

The MovieLens Datasets: History and Context.

ACM Transitions on Interactive Intelligent Systems (TiiS) 5, 4, Article 19 (December 2015), 19 Pages.

DOI=http://dx.doi.org/10.1145/2827872

Ratings: 20,000,000+

Users: 138,493

Movies: 27,278

Explore the data

df.show()

df.columns()

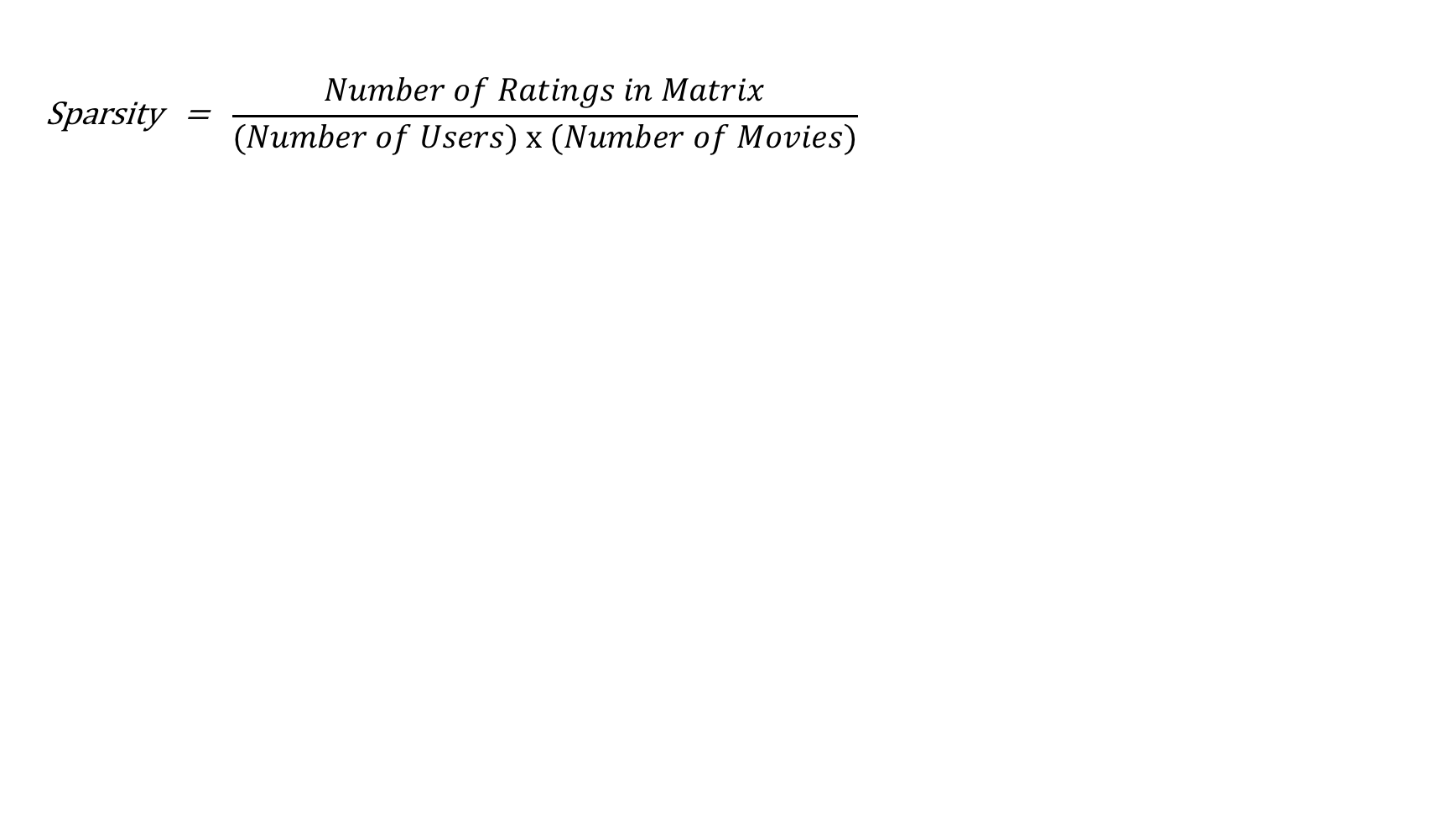

MovieLens sparsity

Sparsity: numerator

# Number of ratings in matrix

numerator = ratings.count()

Sparsity: users and movies

# Distinct users and movies

users = ratings.select("userId").distinct().count()

movies = ratings.select("movieId").distinct().count()

Sparsity: denominator

# Number of ratings in matrix numerator = ratings.count() # Distinct users and movies users = ratings.select("userId").distinct().count() movies = ratings.select("movieId").distinct().count()# Number of ratings matrix could contain if no empty cells denominator = users * movies

Sparsity

# Number of ratings in matrix

numerator = ratings.count()

# Distinct users and movies

users = ratings.select("userId").distinct().count()

movies = ratings.select("movieId").distinct().count()

# Number of ratings matrix could contain if no empty cells

denominator = users * movies

#Calculating sparsity

sparsity = 1 - (numerator*1.0 / denominator)

print ("Sparsity: "), sparsity

Sparsity: .998

The .distinct() method

ratings.select("userId").distinct().count()

671

GroupBy method

# Group by userId

ratings.groupBy("userId")

GroupBy method

# Num of song plays by userId

ratings.groupBy("userId").count().show()

+------+-----+

|userId|count|

+------+-----+

| 148| 76|

| 243| 12|

| 31| 232|

| 137| 16|

| 251| 19|

| 85| 752|

| 65| 737|

| 255| 9|

| 53| 190|

| 133| 302|

| 296| 74|

| 78| 301|

| 108| 136|

| 155| 3|

| 193| 174|

| 101| 1|

+------+-----+

GroupBy method min

from pyspark.sql.functions import min, max, avg

# Min num of song plays by userId

msd.groupBy("userId").count()

.select(min("count")).show()

+----------+

|min(count)|

+----------+

| 1|

+----------+

GroupBy method max

# Max num of song plays by userId

ratings.groupBy("userId").count()

.select(max("count")).show()

+----------+

|max(count)|

+----------+

| 1162|

+----------+

GroupBy method avg

# Avg num of song plays by userId

ratings.groupBy("userId").count()

.select(avg("count")).show()

+----------+

|avg(count)|

+----------+

| 233.34579|

+----------+

Filter method

# Removes users with less than 20 ratings

ratings.groupBy("userId").count().filter(col("count") >= 20).show()

+------+-----+

|userId|count|

+------+-----+

| 148| 76|

| 31| 232|

| 85| 752|

| 65| 737|

| 53| 190|

| 133| 302|

| 296| 74|

| 78| 301|

| 108| 136|

| 193| 174|

+------+-----+

Let's practice!

Building Recommendation Engines with PySpark