Scaling and performance

Advanced NLP with spaCy

Ines Montani

spaCy core developer

Processing large volumes of text

- Use

nlp.pipemethod - Processes texts as a stream, yields

Docobjects - Much faster than calling

nlpon each text

BAD:

docs = [nlp(text) for text in LOTS_OF_TEXTS]

GOOD:

docs = list(nlp.pipe(LOTS_OF_TEXTS))

Passing in context (1)

- Setting

as_tuples=Trueonnlp.pipelets you pass in(text, context)tuples - Yields

(doc, context)tuples - Useful for associating metadata with the

doc

data = [ ('This is a text', {'id': 1, 'page_number': 15}), ('And another text', {'id': 2, 'page_number': 16}), ]for doc, context in nlp.pipe(data, as_tuples=True): print(doc.text, context['page_number'])

This is a text 15

And another text 16

Passing in context (2)

from spacy.tokens import Doc Doc.set_extension('id', default=None) Doc.set_extension('page_number', default=None)data = [ ('This is a text', {'id': 1, 'page_number': 15}), ('And another text', {'id': 2, 'page_number': 16}), ] for doc, context in nlp.pipe(data, as_tuples=True): doc._.id = context['id'] doc._.page_number = context['page_number']

Using only the tokenizer

- don't run the whole pipeline!

Using only the tokenizer (2)

- Use

nlp.make_docto turn a text in to aDocobject

BAD:

doc = nlp("Hello world")

GOOD:

doc = nlp.make_doc("Hello world!")

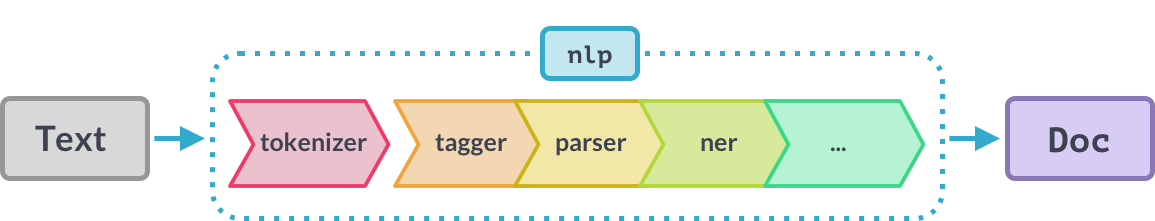

Disabling pipeline components

- Use

nlp.disable_pipesto temporarily disable one or more pipes

# Disable tagger and parser

with nlp.disable_pipes('tagger', 'parser'):

# Process the text and print the entities

doc = nlp(text)

print(doc.ents)

- restores them after the

withblock - only runs the remaining components

Let's practice!

Advanced NLP with spaCy