Model fine-tuning using human feedback

Introduction to LLMs in Python

Iván Palomares Carrascosa, PhD

Senior Data Science & AI Manager

Why human feedback in LLMs

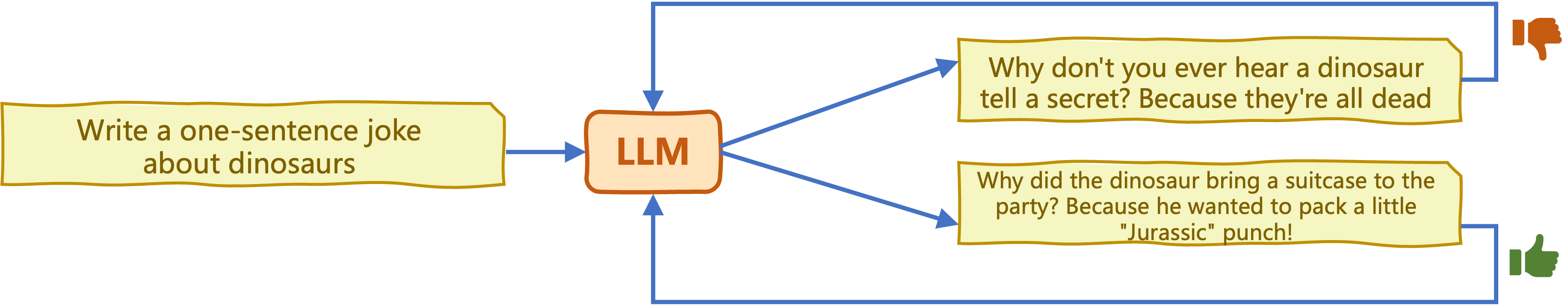

"What makes an LLM good?", "What is the LLM user looking for?"

- Objective, subjective and context-dependent criteria

- Truthfulness, originality, fine-grained detail vs. concise responses, etc.

- Objective metrics cannot fully capture subjective quality in LLM outputs

- Use human feedback as a guide (loss function) to optimize LLM outputs

Reinforcement Learning from Human Feedback (RLHF)

Reinforcement Learning (RL): an agent learns to make decisions upon feedback -rewards-, adapting its behavior to maximize cumulative reward over time

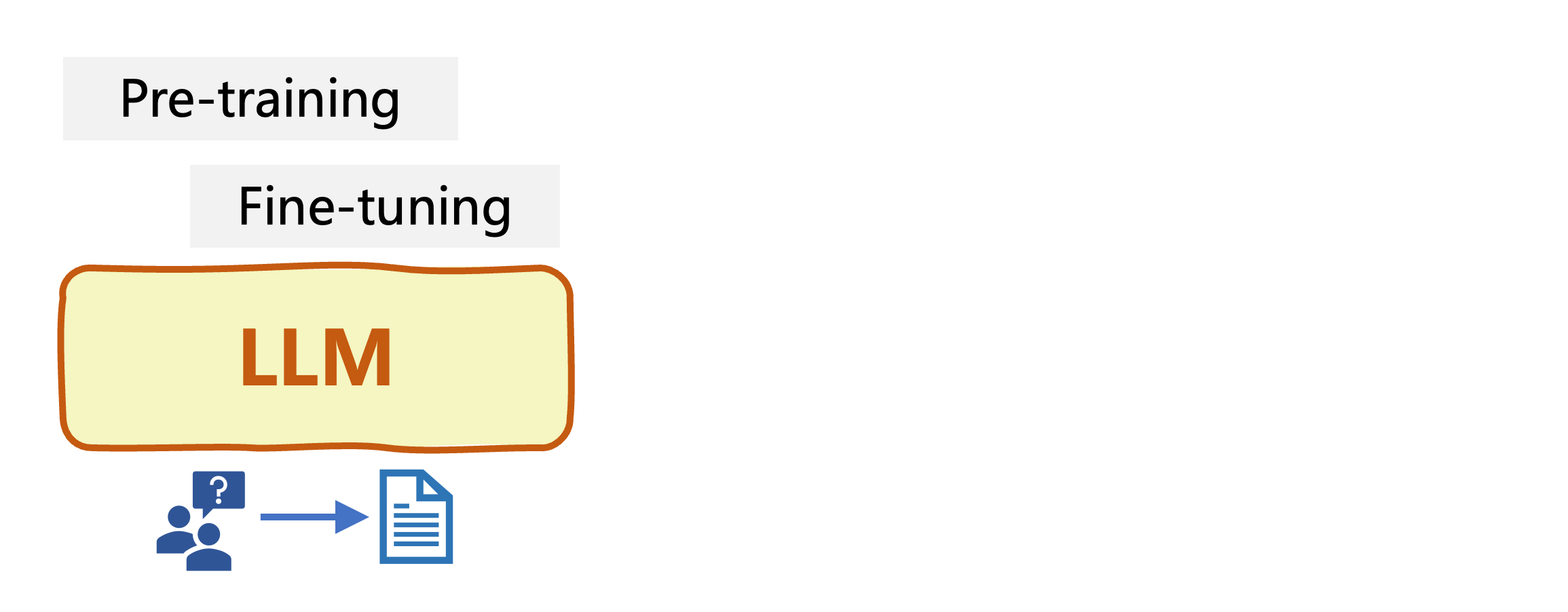

- Initial LLM

Reinforcement Learning from Human Feedback (RLHF)

Reinforcement Learning (RL): an agent learns to make decisions upon feedback -rewards-, adapting its behavior to maximize cumulative reward over time

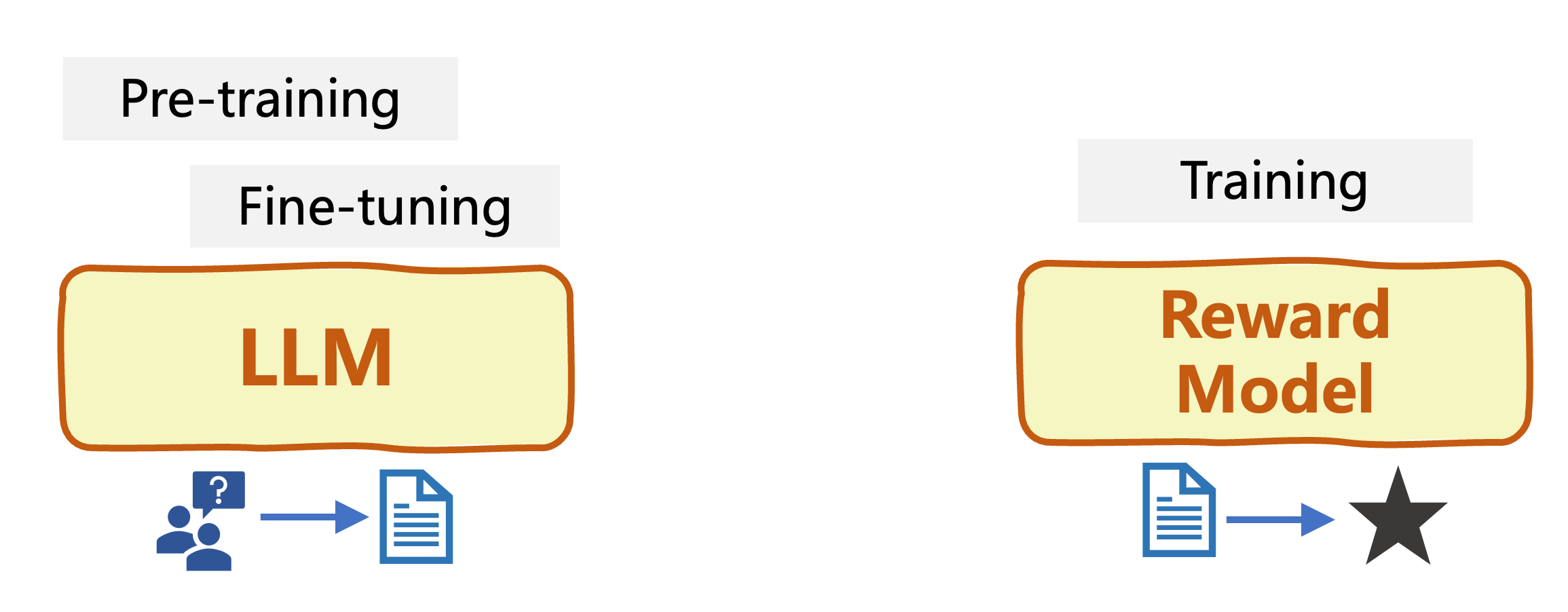

- Initial LLM

- Train a Reward Model (RM)

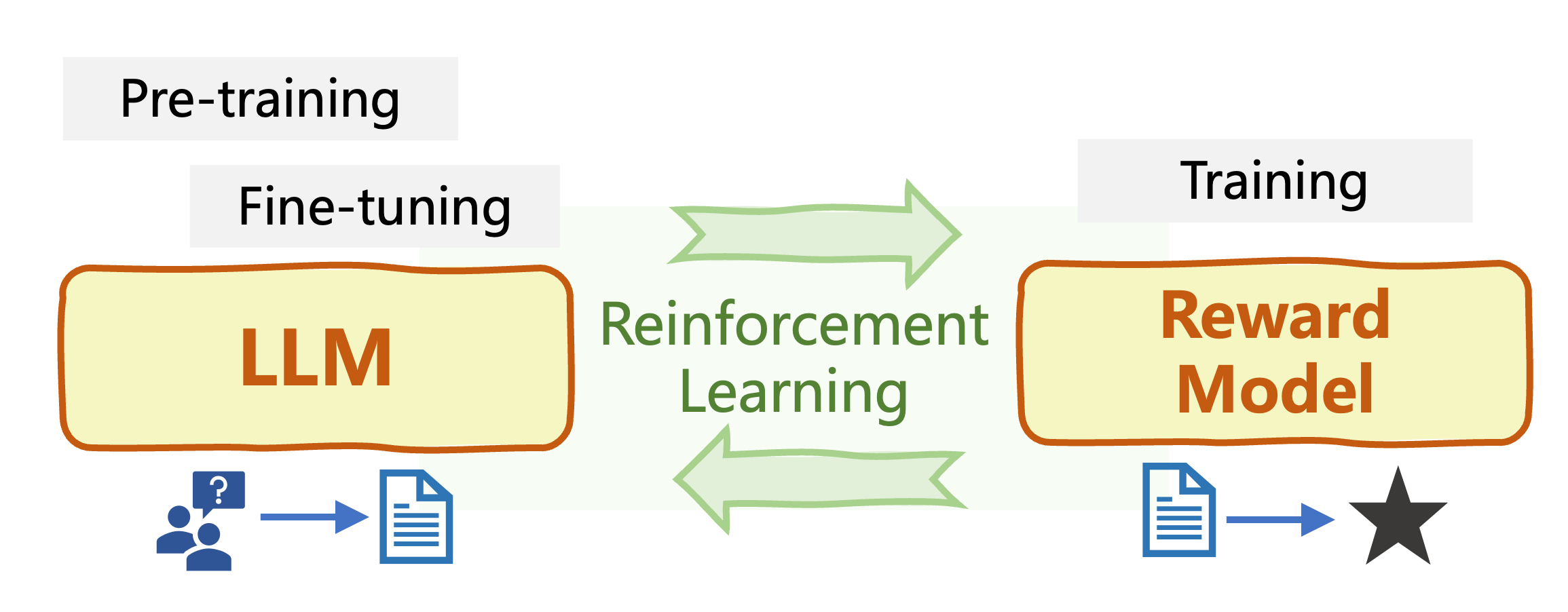

Reinforcement Learning from Human Feedback (RLHF)

Reinforcement Learning (RL): an agent learns to make decisions upon feedback -rewards-, adapting its behavior to maximize cumulative reward over time

- Initial LLM

- Train a Reward Model (RM)

- Optimize (fine-tune) LLM using RL algorithm (e.g. PPO) based on trained RM

Building a reward model

- Pre-trained LLM that generates text

- Collect samples of LLM inputs-outputs

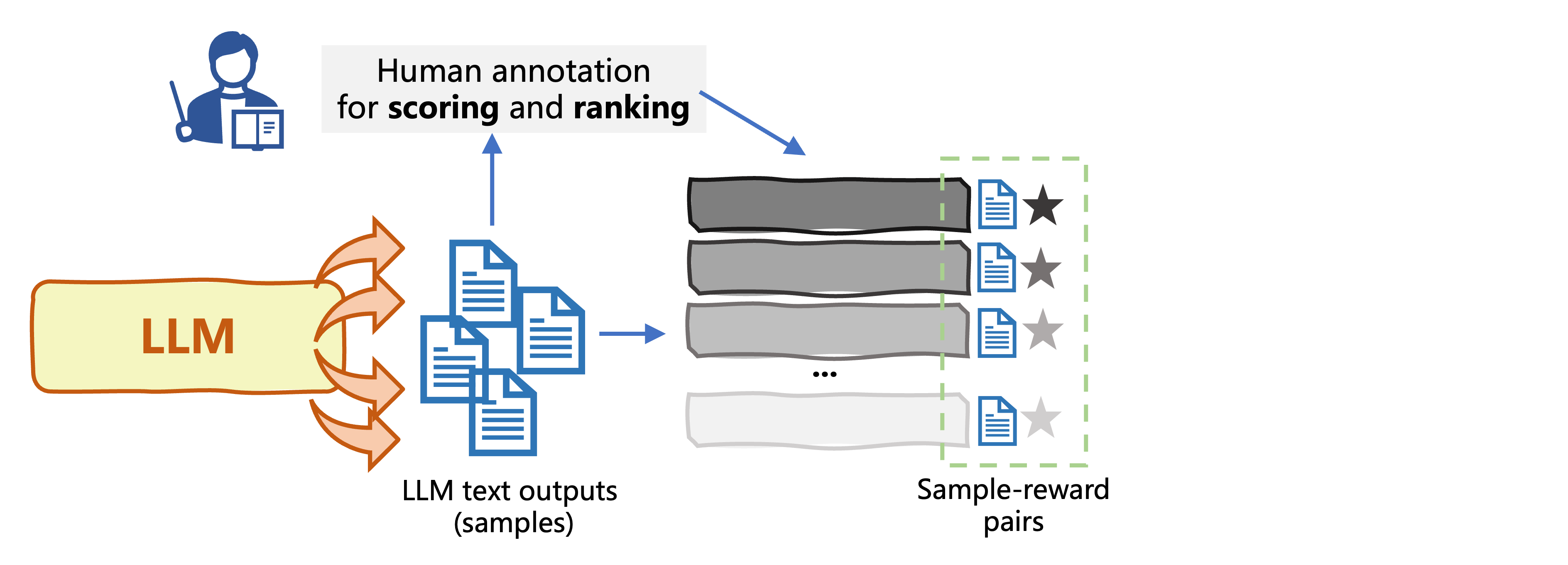

Building a reward model

- Generate dataset to train a reward model: human preferences

- Training instances are sample-reward pairs

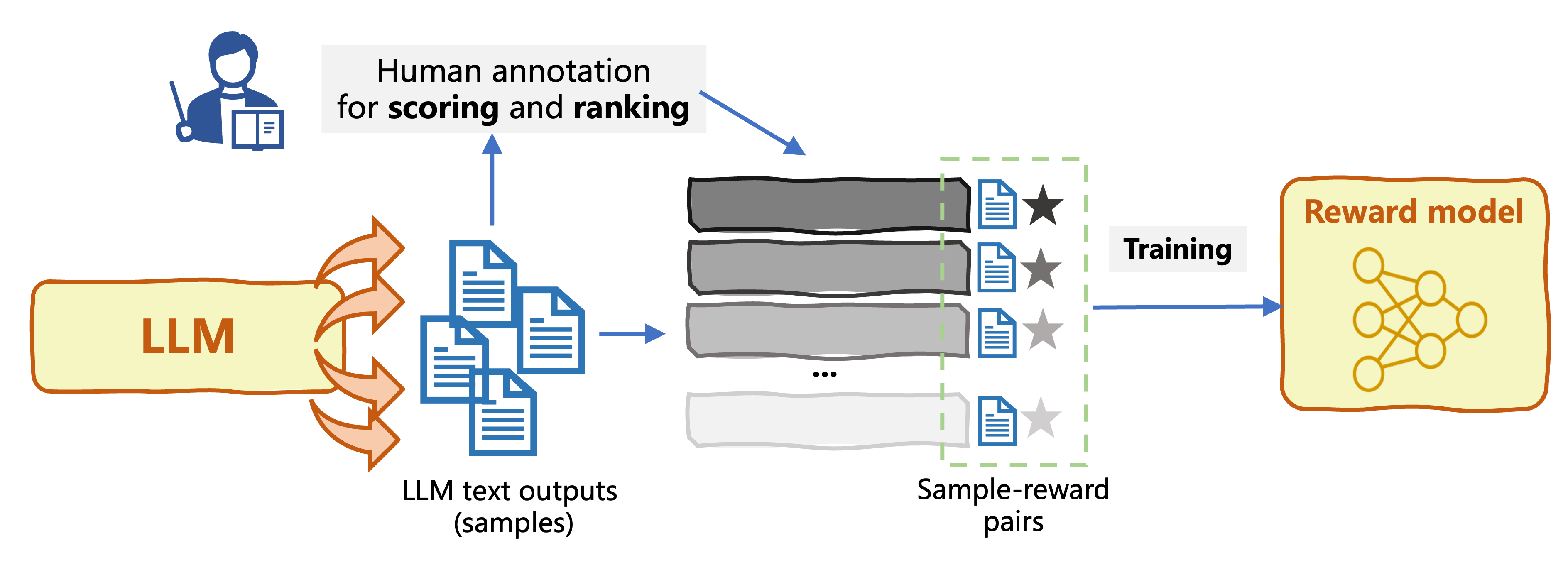

Building a reward model

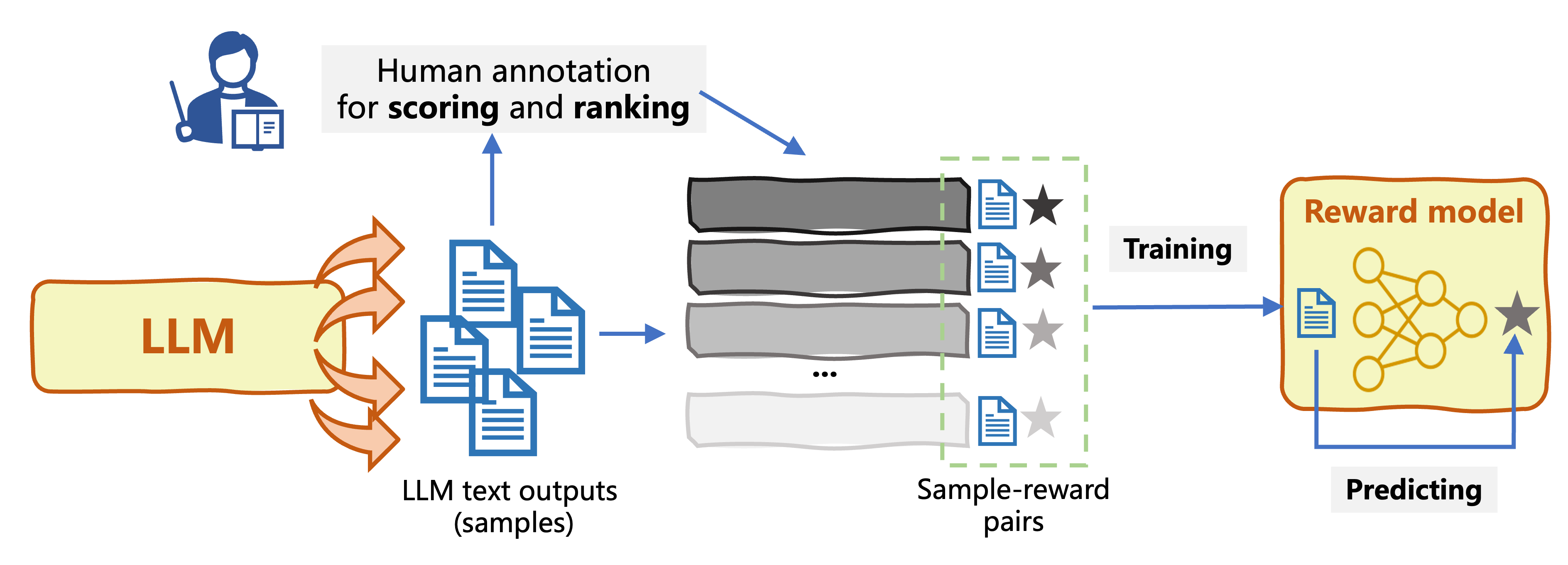

- Train Reward Model (RM) capable of predicting rewards for LLM input-outputs

Building a reward model

- Train Reward Model (RM) capable of predicting rewards for LLM input-outputs

- The trained RM is used by an RL algorithm to fine-tune the original LLM

TRL: Transformer Reinforcement Learning

TRL: a library to train transformer-based LLMs using a variety of RL approaches

Proximal Policy Optimization (PPO): optimize LLM upon <prompt, response, reward> triplets

AutoModelForCausalLMWithValueHead: it incorporates a value head for RL scenariosmodel_ref: reference model, e.g. the loaded pre-trained model before optimizingrespond_to_batch: similar purpose asmodel.generate(), adapted to RL- Set up

PPOTrainerinstance

PPO set-up example:

from trl import PPOTrainer, PPOConfig, create_reference_model, AutoModelForCausalLMWithValueHead from trl.core import respond_to_batchmodel = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2') model_ref = create_reference_model(model) tokenizer = AutoTokenizer.from_pretrained('gpt2') if tokenizer.pad_token is None: tokenizer.add_special_tokens({'pad_token': '[PAD]'})prompt = "My plan today is to " input = tokenizer.encode(query_txt, return_tensors="pt") response = respond_to_batch(model, input)ppo_config = PPOConfig(batch_size=1, mini_batch_size=1) ppo_trainer = PPOTrainer(ppo_config, model, model_ref, tokenizer)reward = [torch.tensor(1.0)] train_stats = ppo_trainer.step([input[0]], [response[0]], reward)

Let's practice!

Introduction to LLMs in Python